2025

Journal Articles

-

HAIFAI: Human-AI Interaction for Mental Face Reconstruction

Florian Strohm, Mihai Bâce, Andreas Bulling

ACM Transactions on Interactive Intelligent Systems (TiiS), , 2025.

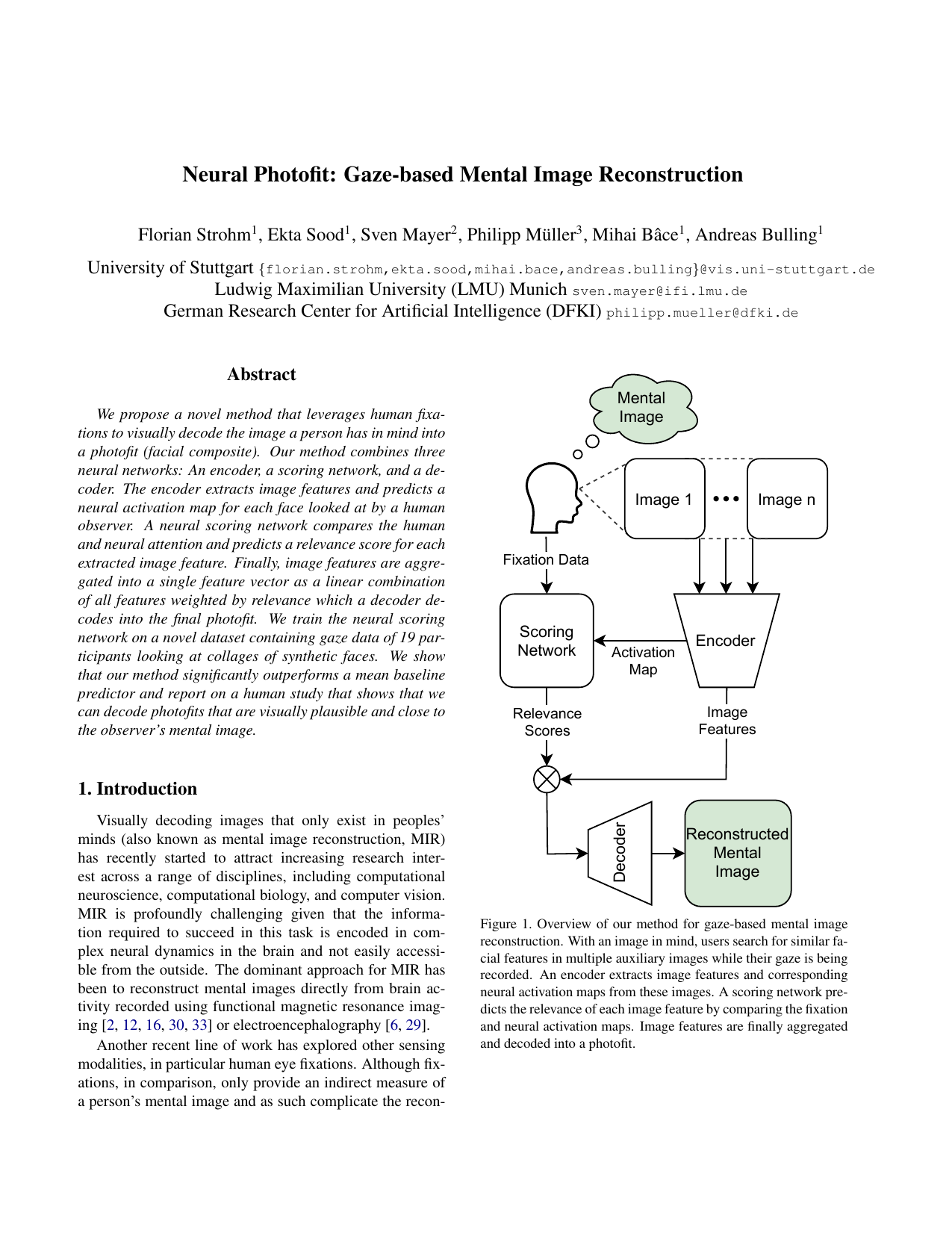

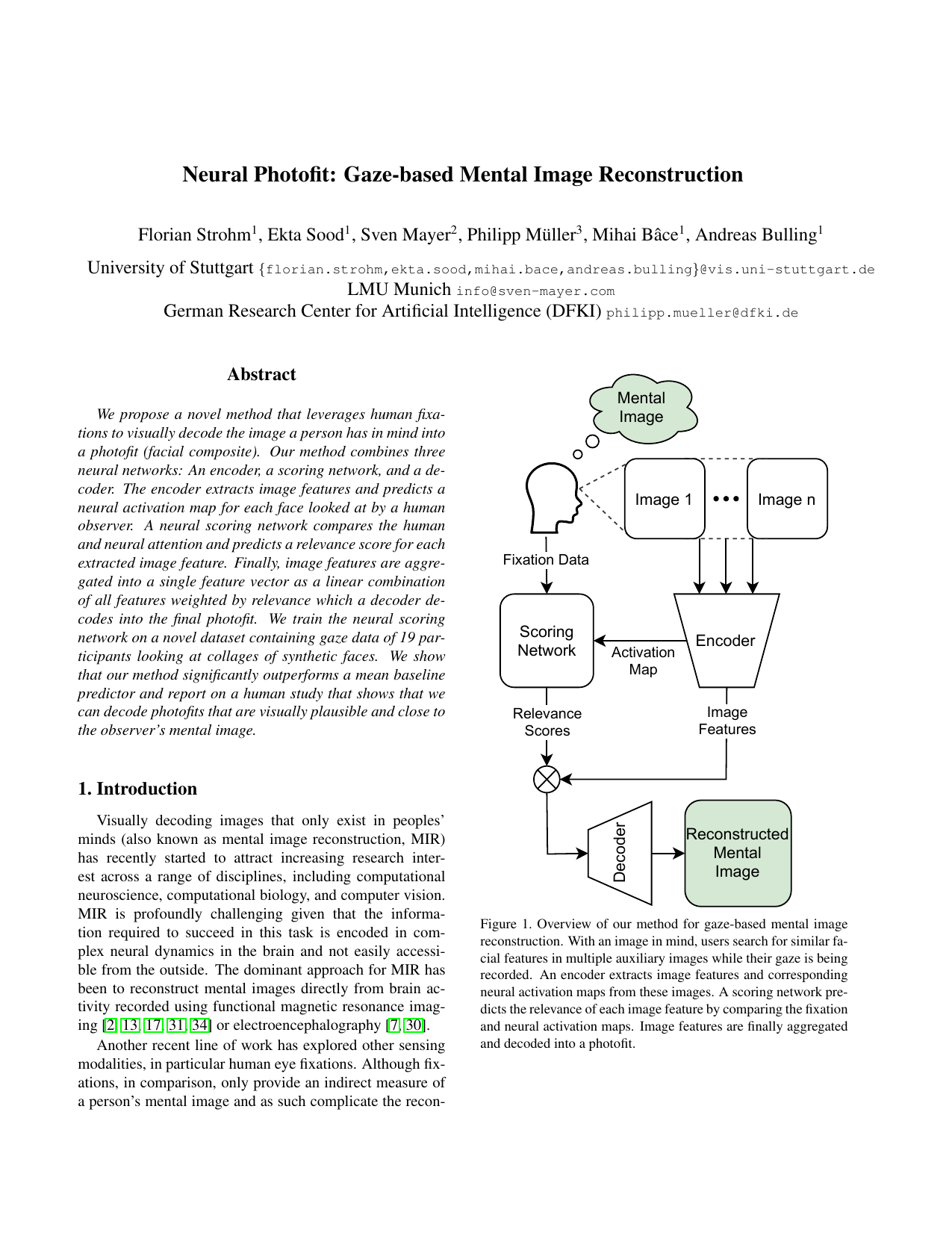

We present HAIFAI - a novel two-stage system where humans and AI interact to tackle the challenging task of reconstructing a visual representation of a face that exists only in a person’s mind. In the first stage, users iteratively rank images our reconstruction system presents based on their resemblance to a mental image. These rankings, in turn, allow the system to extract relevant image features, fuse them into a unified feature vector, and use a generative model to produce an initial reconstruction of the mental image. The second stage leverages an existing face editing method, allowing users to manually refine and further improve this reconstruction using an easy-to-use slider interface for face shape manipulation. To avoid the need for tedious human data collection for training the reconstruction system, we introduce a computational user model of human ranking behaviour. For this, we collected a small face ranking dataset through an online crowd-sourcing study containing data from 275 participants. We evaluate HAIFAI and an ablated version in a 12-participant user study and demonstrate that our approach outperforms the previous state of the art regarding reconstruction quality, usability, perceived workload, and reconstruction speed. We further validate the reconstructions in a subsequent face ranking study with 18 participants and show that HAIFAI achieves a new state-of-the-art identification rate of 60.6%. These findings represent a significant advancement towards developing new interactive intelligent systems capable of reliably and effortlessly reconstructing a user’s mental image.Paper Access: http://arxiv.org/abs/2412.06323@article{strohm25_tiis, title = {{HAIFAI}: {Human}-{AI} {Interaction} for {Mental} {Face} {Reconstruction}}, shorttitle = {{HAIFAI}}, url = {http://arxiv.org/abs/2412.06323}, doi = {10.48550/arXiv.2412.06323}, journal = {ACM Transactions on Interactive Intelligent Systems (TiiS)}, author = {Strohm, Florian and Bâce, Mihai and Bulling, Andreas}, year = {2025}, keywords = {Computer Science - Computer Vision and Pattern Recognition} }

Conference Papers

-

iAssistADL: Intelligent assistive device for patients with neuro- degenerative movement disorder: Concepts and first implementations

Winfried Ilg, Isabell Wochner, Jhon Paul Feliciano Charaja, Veronika Hofmann, Ole Strenge, Melanie Adam, Regine Lendway, Jan Kerner, Bhavya Deep Vashisht, Marko Ackermann, Friedemann Bunjes, Urs Schneider, Martin Giese, Andreas Bulling, Syn Schmitt, Christophe Maufroy, Daniel Florian Benedict Haeufle

Proc. International Consortium for Rehabilitation Robotics (ICORR), pp. 1–6, 2025.

Paper: ilg25_icorr.pdf@inproceedings{ilg25_icorr, title = {iAssistADL: Intelligent assistive device for patients with neuro- degenerative movement disorder: Concepts and first implementations}, author = {Ilg, Winfried and Wochner, Isabell and Charaja, Jhon Paul Feliciano and Hofmann, Veronika and Strenge, Ole and Adam, Melanie and Lendway, Regine and Kerner, Jan and Vashisht, Bhavya Deep and Ackermann, Marko and Bunjes, Friedemann and Schneider, Urs and Giese, Martin and Bulling, Andreas and Schmitt, Syn and Maufroy, Christophe and Haeufle, Daniel Florian Benedict}, year = {2025}, pages = {1--6}, booktitle = {Proc. International Consortium for Rehabilitation Robotics (ICORR)}, doi = {} } -

SummAct: Uncovering User Intentions Through Interactive Behaviour Summarisation

Guanhua Zhang, Mohamed Ahmed, Zhiming Hu, Andreas Bulling

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–23, 2025.

Recent work has highlighted the potential of modelling interactive behaviour analogously to natural language. We propose interactive behaviour summarisation as a novel computational task and demonstrate its usefulness for automatically uncovering latent user intentions while interacting with graphical user interfaces. To tackle this task, we introduce SummAct – a novel hierarchical method to summarise low-level input actions into high-level intentions. SummAct first identifies sub-goals from user actions using a large language model and in-context learning. High-level intentions are then obtained by fine-tuning the model using a novel UI element attention to preserve detailed context information embedded within UI elements during summarisation. Through a series of evaluations, we demonstrate that SummAct significantly outperforms baselines across desktop and mobile interfaces as well as interactive tasks by up to 21.9%. We further show three exciting interactive applications benefited from SummAct: interactive behaviour forecasting, automatic behaviour synonym identification, and language-based behaviour retrieval.@inproceedings{zhang25_chi, title = {SummAct: Uncovering User Intentions Through Interactive Behaviour Summarisation}, author = {Zhang, Guanhua and Ahmed, Mohamed and Hu, Zhiming and Bulling, Andreas}, year = {2025}, pages = {1--23}, booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)}, doi = {} } -

Chartist: Task-driven Eye Movement Control for Chart Reading

Danqing Shi, Yao Wang, Yunpeng Bai, Andreas Bulling, Antti Oulasvirta

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2025.

To design data visualizations that are easy to comprehend, we need to understand how people with different interests read them. Computational models of modeling scanpaths on charts could complement empirical studies by offering estimates of user performance inexpensively; however, previous models have been limited to gaze patterns and overlooked the effects of tasks. Here, we contribute a model that simulates how users move their eyes to extract information from the chart in order to solve analytical tasks, including retrieve value, filter, and find extreme. Our insight is a two-level hierarchical control structure. At the high level, the model uses a LLM to comprehend information gained so far and uses this representation to select a goal for the lower-level controllers, which in turn move the eyes according to a sampling policy learned via reinforcement learning. The model can accurately predict task-driven scanpaths and reproduce the human-like statistical summary across tasks.Paper: shi25_chi.pdf@inproceedings{shi25_chi, title = {Chartist: Task-driven Eye Movement Control for Chart Reading}, author = {Shi, Danqing and Wang, Yao and Bai, Yunpeng and Bulling, Andreas and Oulasvirta, Antti}, year = {2025}, pages = {1--14}, booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)}, doi = {10.1145/3706598.3713128} } -

ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos

Lei Shi, Paul-Christian Bürkner, Andreas Bulling

Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. , 2025.

We present ActionDiffusion - a novel diffusion model for procedure planning in instructional videos that is the first to take temporal inter-dependencies between actions into account. Our approach is in stark contrast to existing methods that fail to exploit the rich information content available in the particular order in which actions are performed. Our method unifies the learning of temporal dependencies between actions and denoising of the action plan in the diffusion process by projecting the action information into the noise space. This is achieved 1) by adding action embeddings in the noise masks in the noise-adding phase and 2) by introducing an attention mechanism in the noise prediction network to learn the correlations between different action steps. We report extensive experiments on three instructional video benchmark datasets (CrossTask, Coin, and NIV) and show that our method outperforms previous state-of-the-art methods on all metrics on CrossTask and NIV and all metrics except accuracy on Coin dataset. We show that by adding action embeddings into the noise mask the diffusion model can better learn action temporal dependencies and increase the performances on procedure planning.@inproceedings{shi25_wacv, author = {Shi, Lei and Bürkner, Paul-Christian and Bulling, Andreas}, title = {ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos}, booktitle = {Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, year = {2025}, pages = {} }

2024

Journal Articles

-

HOIMotion: Forecasting Human Motion During Human-Object Interactions Using Egocentric 3D Object Bounding Boxes

Zhiming Hu, Zheming Yin, Daniel Haeufle, Syn Schmitt, Andreas Bulling

IEEE Transactions on Visualization and Computer Graphics (TVCG), , pp. 1–11, 2024.

Abstract Links BibTeX Project Best Journal Paper Award

We present HOIMotion – a novel approach for human motion forecasting during human-object interactions that integrates information about past body poses and egocentric 3D object bounding boxes. Human motion forecasting is important in many augmented reality applications but most existing methods have only used past body poses to predict future motion. HOIMotion first uses an encoder-residual graph convolutional network (GCN) and multi-layer perceptrons to extract features from body poses and egocentric 3D object bounding boxes, respectively. Our method then fuses pose and object features into a novel pose-object graph and uses a residual-decoder GCN to forecast future body motion. We extensively evaluate our method on the Aria digital twin (ADT) and MoGaze datasets and show that HOIMotion consistently outperforms state-of-the-art methods by a large margin of up to 8.7% on ADT and 7.2% on MoGaze in terms of mean per joint position error. Complementing these evaluations, we report a human study (N=20) that shows that the improvements achieved by our method result in forecasted poses being perceived as both more precise and more realistic than those of existing methods. Taken together, these results reveal the significant information content available in egocentric 3D object bounding boxes for human motion forecasting and the effectiveness of our method in exploiting this information.Paper: hu24_ismar.pdf@article{hu24_ismar, author = {Hu, Zhiming and Yin, Zheming and Haeufle, Daniel and Schmitt, Syn and Bulling, Andreas}, title = {HOIMotion: Forecasting Human Motion During Human-Object Interactions Using Egocentric 3D Object Bounding Boxes}, journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)}, year = {2024}, pages = {1--11}, doi = {10.1109/TVCG.2024.3456161} } -

Pose2Gaze: Eye-body Coordination during Daily Activities for Gaze Prediction from Full-body Poses

Zhiming Hu, Jiahui Xu, Syn Schmitt, Andreas Bulling

IEEE Transactions on Visualization and Computer Graphics (TVCG), , pp. 1–12, 2024.

Human eye gaze plays a significant role in many virtual and augmented reality (VR/AR) applications, such as gaze-contingent rendering, gaze-based interaction, or eye-based activity recognition. However, prior works on gaze analysis and prediction have only explored eye-head coordination and were limited to human-object interactions. We first report a comprehensive analysis of eye-body coordination in various human-object and human-human interaction activities based on four public datasets collected in real-world (MoGaze), VR (ADT), as well as AR (GIMO and EgoBody) environments. We show that in human-object interactions, e.g. pick and place, eye gaze exhibits strong correlations with full-body motion while in human-human interactions, e.g. chat and teach, a person’s gaze direction is correlated with the body orientation towards the interaction partner. Informed by these analyses we then present Pose2Gaze – a novel eye-body coordination model that uses a convolutional neural network and a spatio-temporal graph convolutional neural network to extract features from head direction and full-body poses, respectively, and then uses a convolutional neural network to predict eye gaze. We compare our method with state-of-the-art methods that predict eye gaze only from head movements and show that Pose2Gaze outperforms these baselines with an average improvement of 24.0% on MoGaze, 10.1% on ADT, 21.3% on GIMO, and 28.6% on EgoBody in mean angular error, respectively. We also show that our method significantly outperforms prior methods in the sample downstream task of eye-based activity recognition. These results underline the significant information content available in eye-body coordination during daily activities and open up a new direction for gaze prediction.Paper: hu24_tvcg.pdf@article{hu24_tvcg, author = {Hu, Zhiming and Xu, Jiahui and Schmitt, Syn and Bulling, Andreas}, title = {Pose2Gaze: Eye-body Coordination during Daily Activities for Gaze Prediction from Full-body Poses}, journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)}, year = {2024}, pages = {1--12}, doi = {10.1109/TVCG.2024.3412190} } -

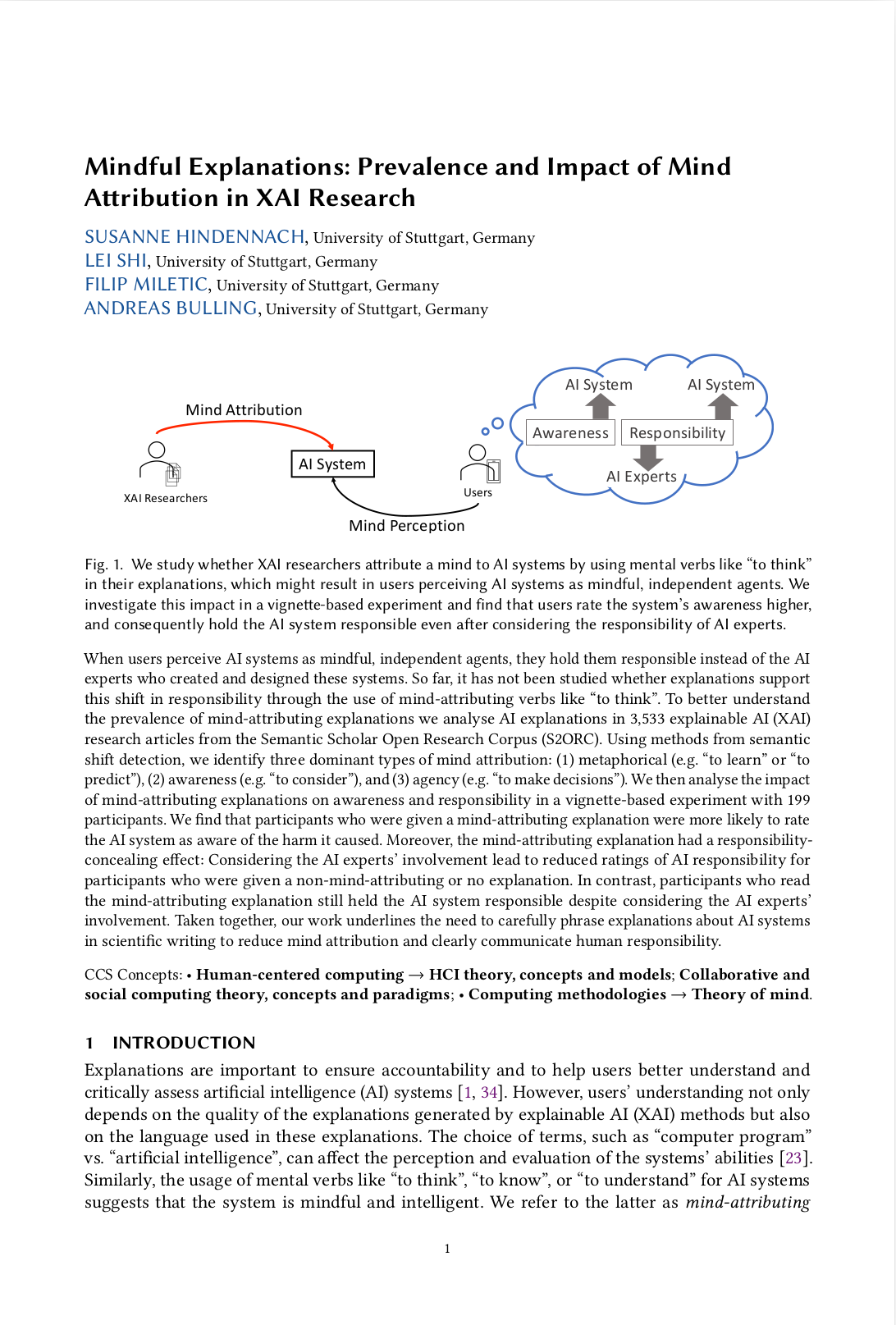

Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research

Susanne Hindennach, Lei Shi, Filip Miletic, Andreas Bulling

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (CSCW), pp. 1–42, 2024.

Abstract Links BibTeX Project Best Paper Honourable Mention Award

When users perceive AI systems as mindful, independent agents, they hold them responsible instead of the AI experts who created and designed these systems. So far, it has not been studied whether explanations support this shift in responsibility through the use of mind-attributing verbs like "to think". To better understand the prevalence of mind-attributing explanations we analyse AI explanations in 3,533 explainable AI (XAI) research articles from the Semantic Scholar Open Research Corpus (S2ORC). Using methods from semantic shift detection, we identify three dominant types of mind attribution: (1) metaphorical (e.g. "to learn" or "to predict"), (2) awareness (e.g. "to consider"), and (3) agency (e.g. "to make decisions"). We then analyse the impact of mind-attributing explanations on awareness and responsibility in a vignette-based experiment with 199 participants. We find that participants who were given a mind-attributing explanation were more likely to rate the AI system as aware of the harm it caused. Moreover, the mind-attributing explanation had a responsibility-concealing effect: Considering the AI experts’ involvement lead to reduced ratings of AI responsibility for participants who were given a non-mind-attributing or no explanation. In contrast, participants who read the mind-attributing explanation still held the AI system responsible despite considering the AI experts’ involvement. Taken together, our work underlines the need to carefully phrase explanations about AI systems in scientific writing to reduce mind attribution and clearly communicate human responsibility.@article{hindennach24_pacm, title = {Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research}, author = {Hindennach, Susanne and Shi, Lei and Miletic, Filip and Bulling, Andreas}, year = {2024}, pages = {1--42}, volume = {8}, number = {CSCW}, doi = {10.1145/3641009}, journal = {Proc. ACM on Human-Computer Interaction (PACM HCI)}, url = {https://medium.com/acm-cscw/be-mindful-when-using-mindful-descriptions-in-explanations-about-ai-bfc7666885c6} } -

Individual differences in visuo-spatial working memory capacity and prior knowledge during interrupted reading

Francesca Zermiani, Prajit Dhar, Florian Strohm, Sibylle Baumbach, Andreas Bulling, Maria Wirzberger

Frontiers in Cognition, 3, pp. 1–9, 2024.

Interruptions are often pervasive and require attentional shifts from the primary task. Limited data are available on the factors influencing individuals’ efficiency in resuming from interruptions during digital reading. The reported investigation -conducted using the InteRead dataset -examined whether individual differences in visuo-spatial working memory capacity (vsWMC) and prior knowledge could influence resumption lag times during interrupted reading. Participants’ vsWMC capacity was assessed using the symmetry span (SSPAN) task, while a pre-test questionnaire targeted their background knowledge about the text. While reading an extract from a Sherlock Holmes story, they were interrupted six times and asked to answer an opinion question. Our analyses revealed that the interaction between vsWMC and prior knowledge significantly predicted the time needed to resume reading following an interruption. The results from our analyses are discussed in relation to theoretical frameworks of task resumption and current research in the field.Paper: zermiani24_fic.pdf@article{zermiani24_fic, title = {Individual differences in visuo-spatial working memory capacity and prior knowledge during interrupted reading}, author = {Zermiani, Francesca and Dhar, Prajit and Strohm, Florian and Baumbach, Sibylle and Bulling, Andreas and Wirzberger, Maria}, year = {2024}, doi = {10.3389/fcogn.2024.1434642}, pages = {1--9}, volume = {3}, journal = {Frontiers in Cognition} } -

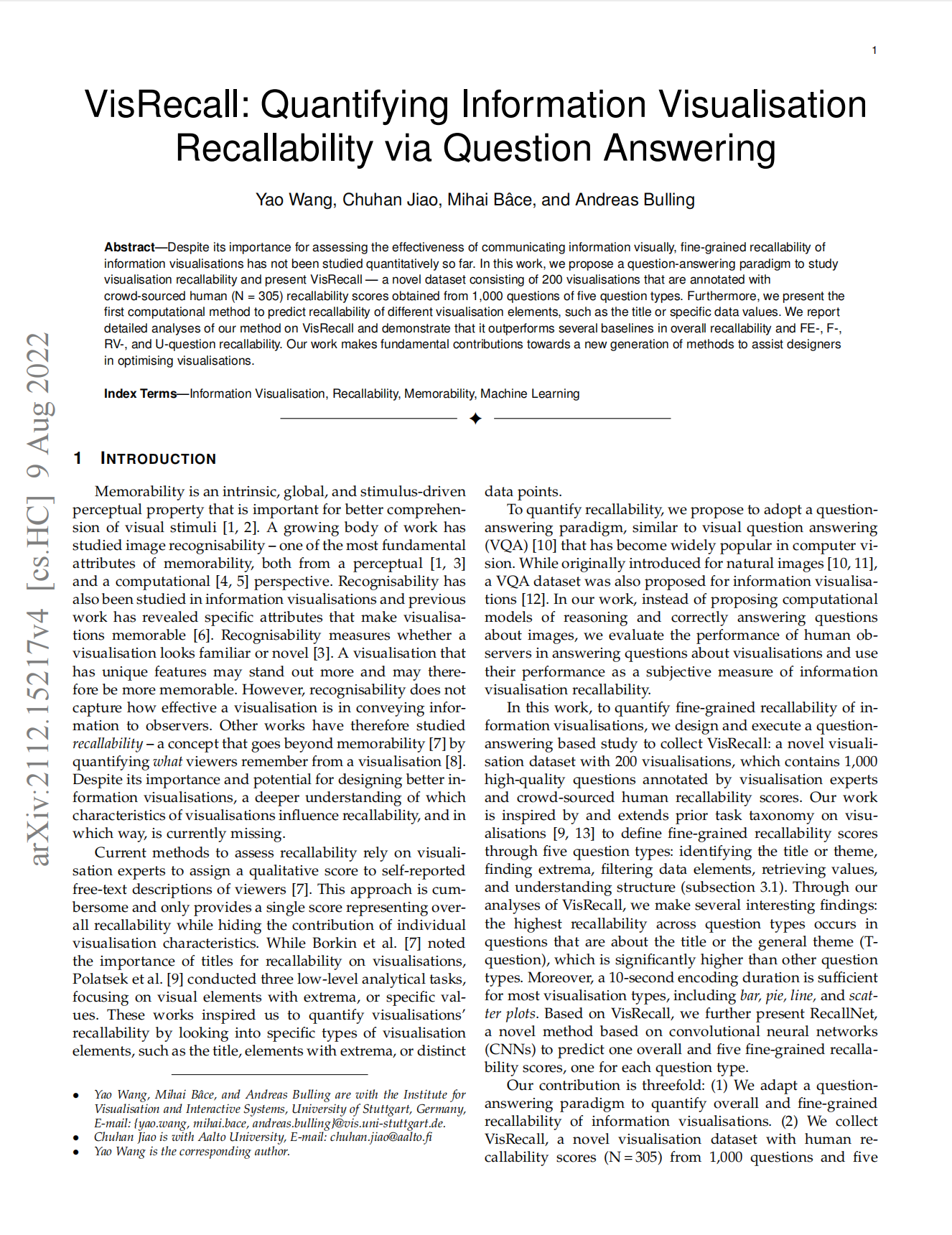

VisRecall++: Analysing and Predicting Visualisation Recallability from Gaze Behaviour

Yao Wang, Yue Jiang, Zhiming Hu, Constantin Ruhdorfer, Mihai Bâce, Andreas Bulling

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (ETRA), pp. 1–18, 2024.

Question answering has recently been proposed as a promising means to assess the recallability of information visualisations. However, prior works are yet to study the link between visually encoding a visualisation in memory and recall performance. To fill this gap, we propose VisRecall++ – a novel 40-participant recallability dataset that contains gaze data on 200 visualisations and five question types, such as identifying the title, and finding extreme values.We measured recallability by asking participants questions after they observed the visualisation for 10 seconds.Our analyses reveal several insights, such as saccade amplitude, number of fixations, and fixation duration significantly differ between high and low recallability groups.Finally, we propose GazeRecallNet – a novel computational method to predict recallability from gaze behaviour that outperforms several baselines on this task.Taken together, our results shed light on assessing recallability from gaze behaviour and inform future work on recallability-based visualisation optimisation.@article{wang24_etra, title = {VisRecall++: Analysing and Predicting Visualisation Recallability from Gaze Behaviour}, author = {Wang, Yao and Jiang, Yue and Hu, Zhiming and Ruhdorfer, Constantin and Bâce, Mihai and Bulling, Andreas}, year = {2024}, journal = {Proc. ACM on Human-Computer Interaction (PACM HCI)}, pages = {1--18}, volume = {8}, number = {ETRA}, doi = {10.1145/3655613} } -

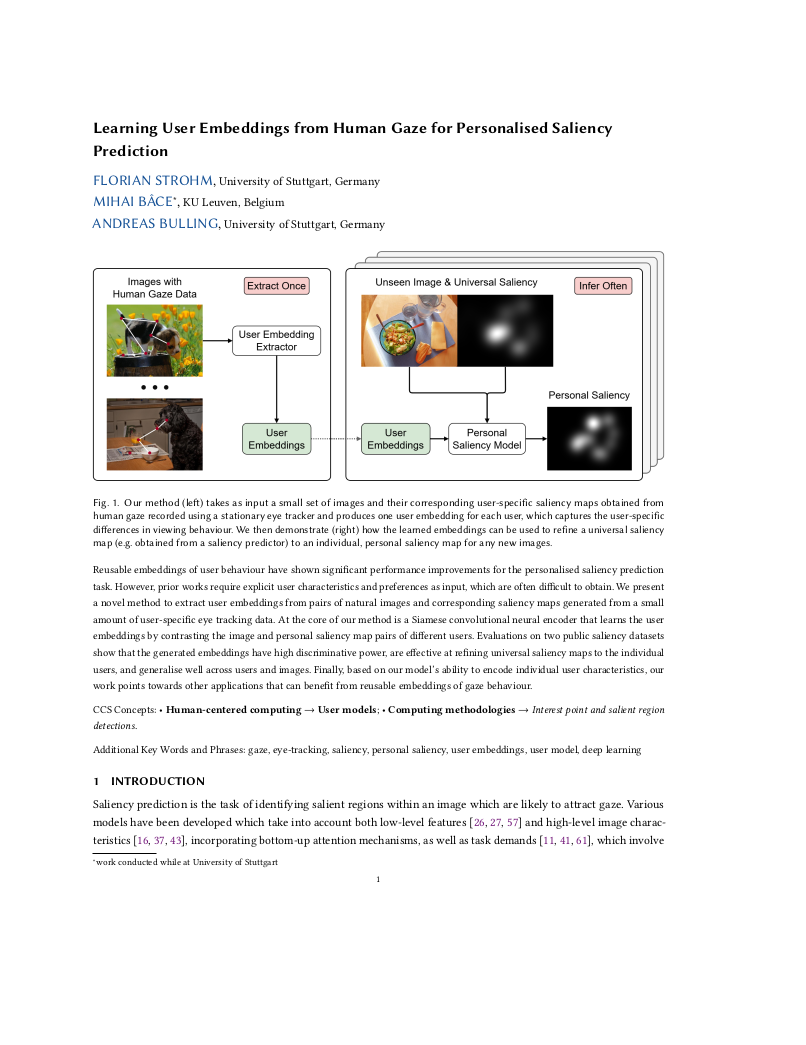

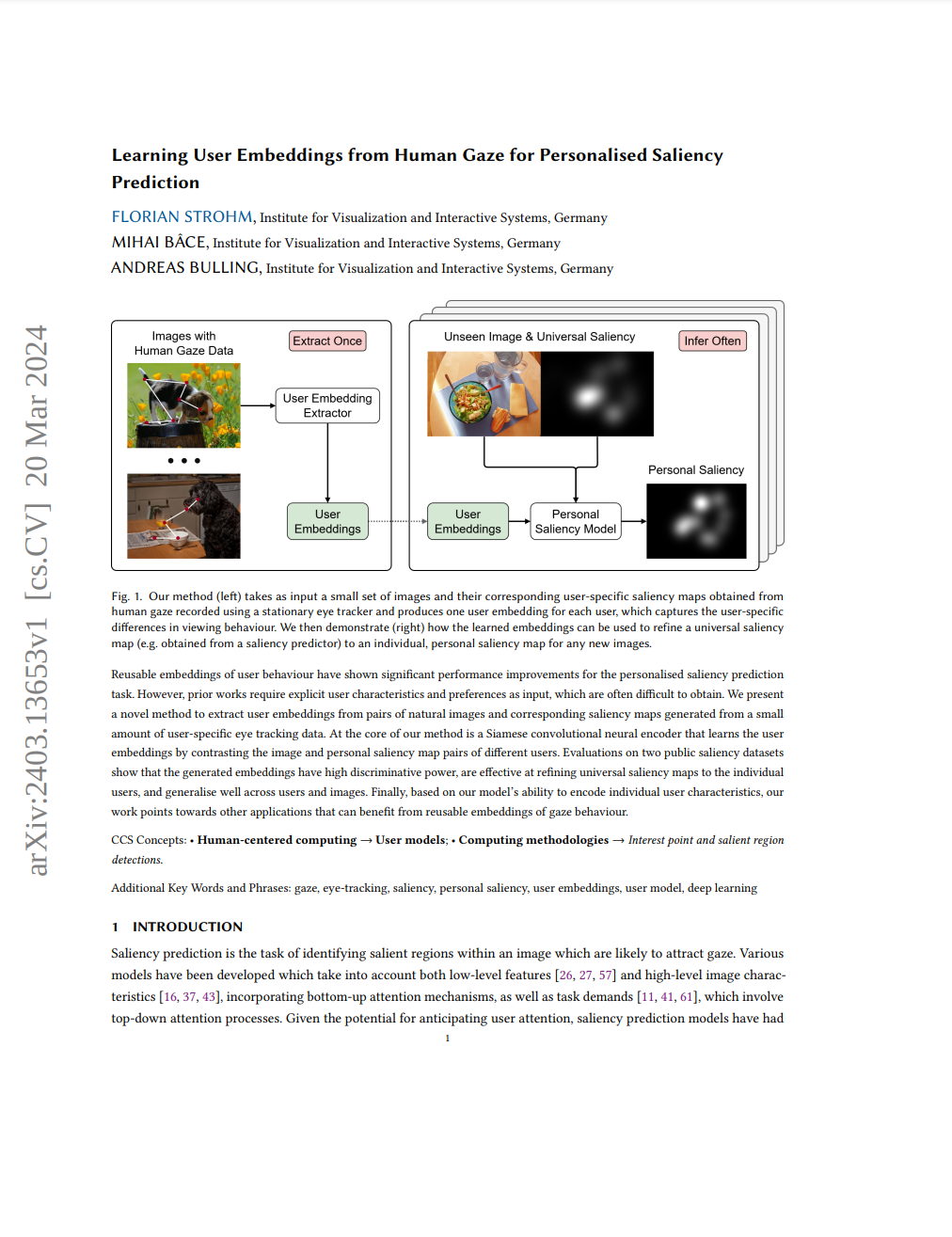

Learning User Embeddings from Human Gaze for Personalised Saliency Prediction

Florian Strohm, Mihai Bâce, Andreas Bulling

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (ETRA), pp. 1–18, 2024.

Reusable embeddings of user behaviour have shown significant performance improvements for the personalised saliency prediction task. However, prior works require explicit user characteristics and preferences as input, which are often difficult to obtain. We present a novel method to extract user embeddings from pairs of natural images and corresponding saliency maps generated from a small amount of user-specific eye tracking data. At the core of our method is a Siamese convolutional neural encoder that learns the user embeddings by contrasting the image and personal saliency map pairs of different users. Evaluations on two saliency datasets show that the generated embeddings have high discriminative power, are effective at refining universal saliency maps to the individual users, and generalise well across users and images. Finally, based on our model’s ability to encode individual user characteristics, our work points towards other applications that can benefit from reusable embeddings of gaze behaviour.doi: 10.1145/3655603Paper: strohm24_etra.pdf@article{strohm24_etra, title = {Learning User Embeddings from Human Gaze for Personalised Saliency Prediction}, author = {Strohm, Florian and Bâce, Mihai and Bulling, Andreas}, year = {2024}, journal = {Proc. ACM on Human-Computer Interaction (PACM HCI)}, pages = {1--18}, volume = {8}, number = {ETRA}, doi = {10.1145/3655603} } -

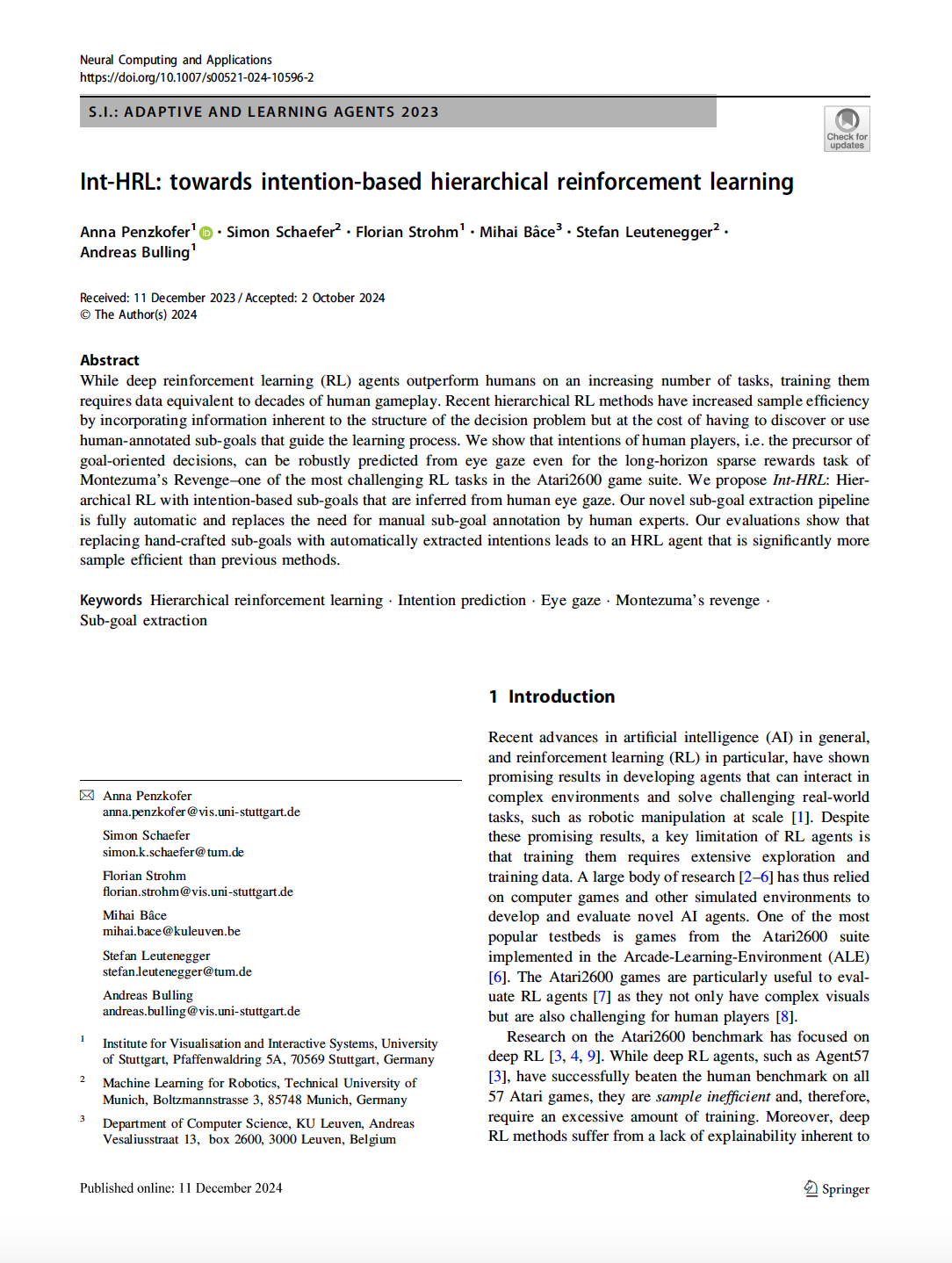

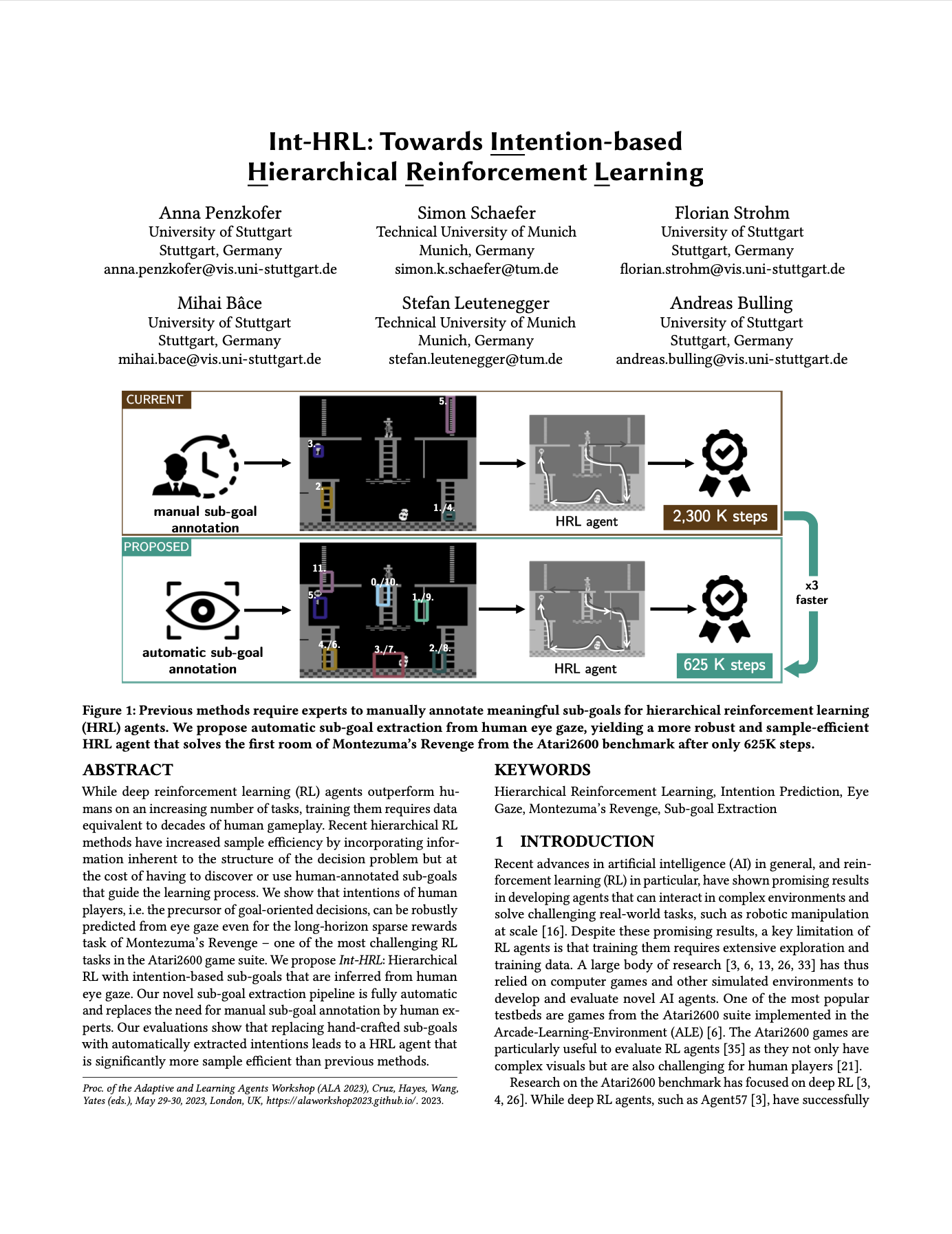

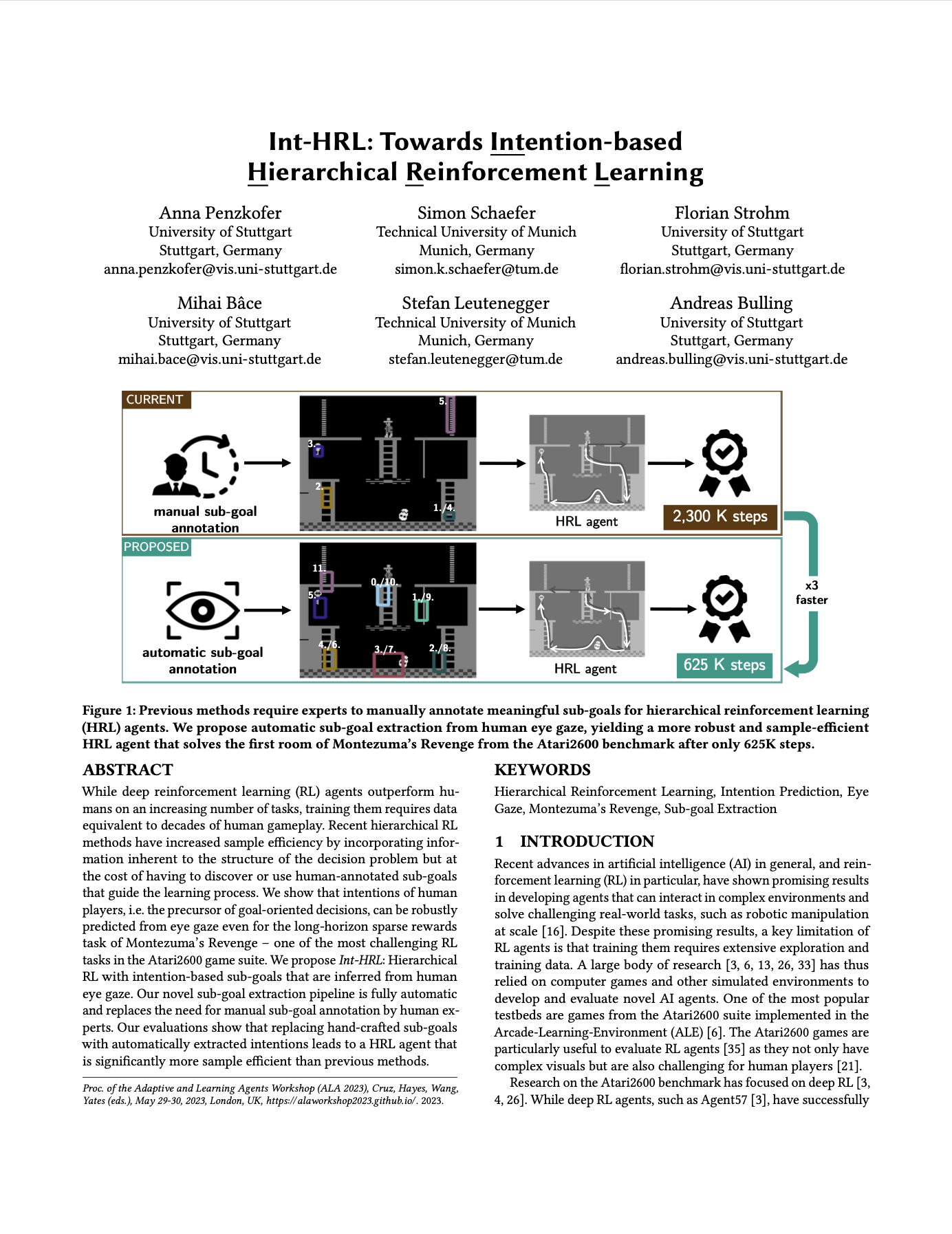

Int-HRL: Towards Intention-based Hierarchical Reinforcement Learning

Anna Penzkofer, Simon Schaefer, Florian Strohm, Mihai Bâce, Stefan Leutenegger, Andreas Bulling

Neural Computing and Applications (NCAA), 36, pp. 1–7, 2024.

While deep reinforcement learning (RL) agents outperform humans on an increasing number of tasks, training them requires data equivalent to decades of human gameplay. Recent hierarchical RL methods have increased sample efficiency by incorporating information inherent to the structure of the decision problem but at the cost of having to discover or use human-annotated sub-goals that guide the learning process. We show that intentions of human players, i.e., the precursor of goal-oriented decisions, can be robustly predicted from eye gaze even for the long-horizon sparse rewards task of Montezuma’s Revenge – one of the most challenging RL tasks in the Atari2600 game suite. We propose Int-HRL: Hierarchical RL with intention-based sub-goals that are inferred from human eye gaze. Our novel sub-goal extraction pipeline is fully automatic and replaces the need for manual sub-goal annotation by human experts. Our evaluations show that replacing hand-crafted sub-goals with automatically extracted intentions leads to an HRL agent that is significantly more sample efficient than previous methods.Paper: penzkofer24_ncaa.pdf@article{penzkofer24_ncaa, author = {Penzkofer, Anna and Schaefer, Simon and Strohm, Florian and Bâce, Mihai and Leutenegger, Stefan and Bulling, Andreas}, title = {Int-HRL: Towards Intention-based Hierarchical Reinforcement Learning}, journal = {Neural Computing and Applications (NCAA)}, year = {2024}, pages = {1--7}, doi = {10.1007/s00521-024-10596-2}, volume = {36}, issue = {} } -

PrivatEyes: Appearance-based Gaze Estimation Using Federated Secure Multi-Party Computation

Mayar Elfares, Pascal Reisert, Zhiming Hu, Wenwu Tang, Ralf Küsters, Andreas Bulling

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (ETRA), pp. 1–23, 2024.

Latest gaze estimation methods require large-scale training data but their collection and exchange pose significant privacy risks. We propose PrivatEyes - the first privacy-enhancing training approach for appearance-based gaze estimation based on federated learning (FL) and secure multi-party computation (MPC). PrivatEyes enables training gaze estimators on multiple local datasets across different users and server-based secure aggregation of the individual estimators’ updates. PrivatEyes guarantees that individual gaze data remains private even if a majority of the aggregating servers is malicious. We also introduce a new data leakage attack DualView that shows that PrivatEyes limits the leakage of private training data more effectively than previous approaches. Evaluations on the MPIIGaze, MPIIFaceGaze, GazeCapture, and NVGaze datasets further show that the improved privacy does not lead to a lower gaze estimation accuracy or substantially higher computational costs - both of which are on par with its non-secure counterparts.doi: 10.1145/3655606Paper: elfares24_etra.pdf@article{elfares24_etra, title = {PrivatEyes: Appearance-based Gaze Estimation Using Federated Secure Multi-Party Computation}, author = {Elfares, Mayar and Reisert, Pascal and Hu, Zhiming and Tang, Wenwu and Küsters, Ralf and Bulling, Andreas}, year = {2024}, journal = {Proc. ACM on Human-Computer Interaction (PACM HCI)}, pages = {1--23}, volume = {8}, number = {ETRA}, doi = {10.1145/3655606} }

Conference Papers

-

DisMouse: Disentangling Information from Mouse Movement Data

Guanhua Zhang, Zhiming Hu, Andreas Bulling

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 1–13, 2024.

Mouse movement data contain rich information about users, performed tasks, and user interfaces, but separating the respective components remains challenging and unexplored. As a first step to address this challenge, we propose DisMouse – the first method to disentangle user-specific and user-independent information and stochastic variations from mouse movement data. At the core of our method is an autoencoder trained in a semi-supervised fashion, consisting of a self-supervised denoising diffusion process and a supervised contrastive user identification module. Through evaluations on three datasets, we show that DisMouse 1) captures complementary information of mouse input, hence providing an interpretable framework for modelling mouse movements, 2) can be used to produce refined features, thus enabling various applications such as personalised and variable mouse data generation, and 3) generalises across different datasets. Taken together, our results underline the significant potential of disentangled representation learning for explainable, controllable, and generalised mouse behaviour modelling.@inproceedings{zhang24_uist, title = {DisMouse: Disentangling Information from Mouse Movement Data}, author = {Zhang, Guanhua and Hu, Zhiming and Bulling, Andreas}, year = {2024}, pages = {1--13}, booktitle = {Proc. ACM Symposium on User Interface Software and Technology (UIST)}, doi = {https://doi.org/10.1145/3654777.3676411} } -

Mouse2Vec: Learning Reusable Semantic Representations of Mouse Behaviour

Guanhua Zhang, Zhiming Hu, Mihai Bâce, Andreas Bulling

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–17, 2024.

The mouse is a pervasive input device used for a wide range of interactive applications. However, computational modelling of mouse behaviour typically requires time-consuming design and extraction of handcrafted features, or approaches that are application-specific. We instead propose Mouse2Vec – a novel self-supervised method designed to learn semantic representations of mouse behaviour that are reusable across users and applications. Mouse2Vec uses a Transformer-based encoder-decoder architecture, which is specifically geared for mouse data: During pretraining, the encoder learns an embedding of input mouse trajectories while the decoder reconstructs the input and simultaneously detects mouse click events. We show that the representations learned by our method can identify interpretable mouse behaviour clusters and retrieve similar mouse trajectories. We also demonstrate on three sample downstream tasks that the representations can be practically used to augment mouse data for training supervised methods and serve as an effective feature extractor.@inproceedings{zhang24_chi, title = {Mouse2Vec: Learning Reusable Semantic Representations of Mouse Behaviour}, author = {Zhang, Guanhua and Hu, Zhiming and B{\^a}ce, Mihai and Bulling, Andreas}, year = {2024}, pages = {1--17}, booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)}, doi = {10.1145/3613904.3642141} } -

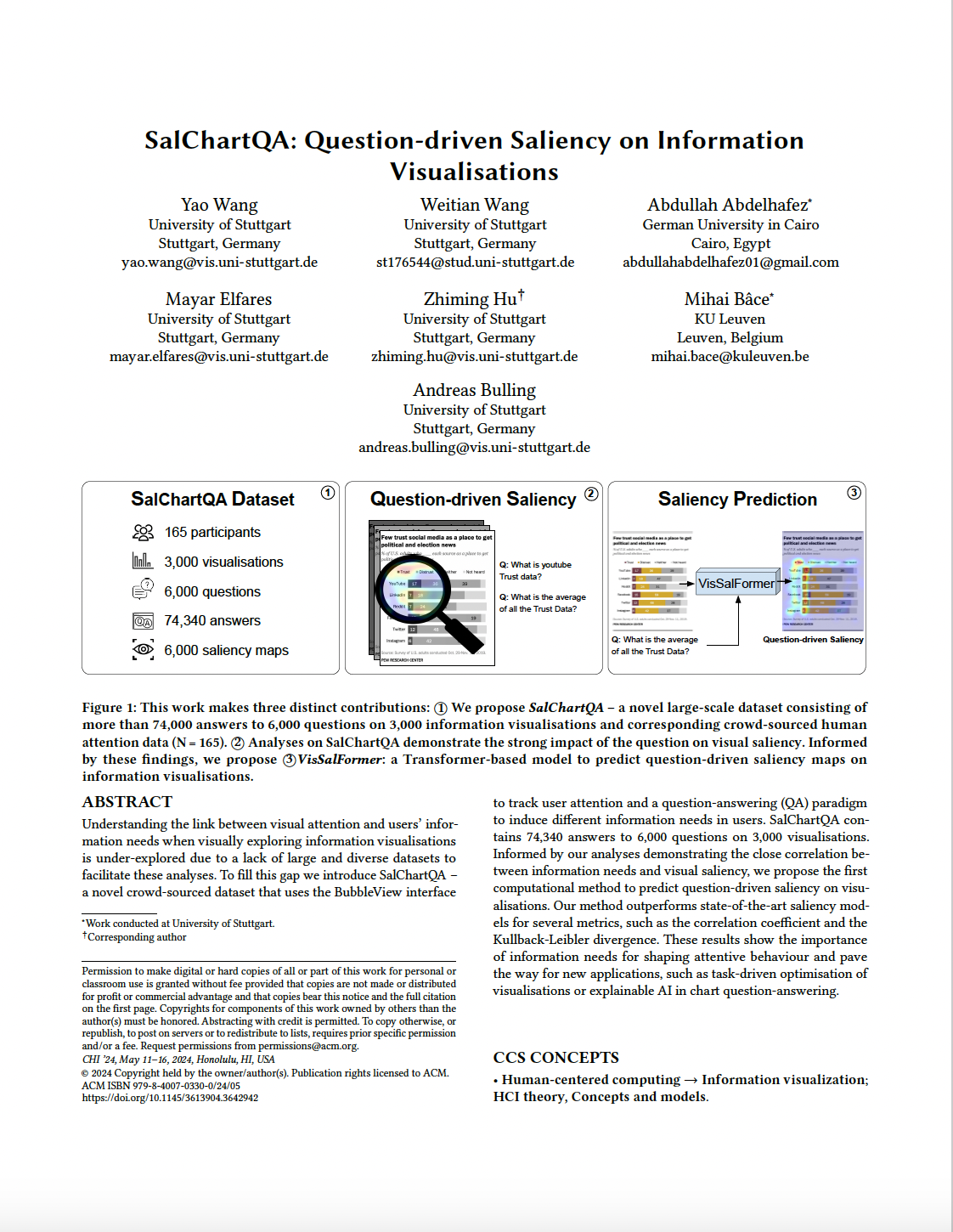

SalChartQA: Question-driven Saliency on Information Visualisations

Yao Wang, Weitian Wang, Abdullah Abdelhafez, Mayar Elfares, Zhiming Hu, Mihai Bâce, Andreas Bulling

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2024.

Understanding the link between visual attention and user’s needs when visually exploring information visualisations is under-explored due to a lack of large and diverse datasets to facilitate these analyses. To fill this gap, we introduce SalChartQA – a novel crowd-sourced dataset that uses the BubbleView interface as a proxy for human gaze and a question-answering (QA) paradigm to induce different information needs in users. SalChartQA contains 74,340 answers to 6,000 questions on 3,000 visualisations. Informed by our analyses demonstrating the tight correlation between the question and visual saliency, we propose the first computational method to predict question-driven saliency on information visualisations. Our method outperforms state-of-the-art saliency models, improving several metrics, such as the correlation coefficient and the Kullback-Leibler divergence. These results show the importance of information needs for shaping attention behaviour and paving the way for new applications, such as task-driven optimisation of visualisations or explainable AI in chart question-answering.Paper: wang24_chi.pdfSupplementary Material: wang24_chi_sup.pdf@inproceedings{wang24_chi, title = {SalChartQA: Question-driven Saliency on Information Visualisations}, author = {Wang, Yao and Wang, Weitian and Abdelhafez, Abdullah and Elfares, Mayar and Hu, Zhiming and B{\^a}ce, Mihai and Bulling, Andreas}, year = {2024}, pages = {1--14}, booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)}, doi = {10.1145/3613904.3642942} } -

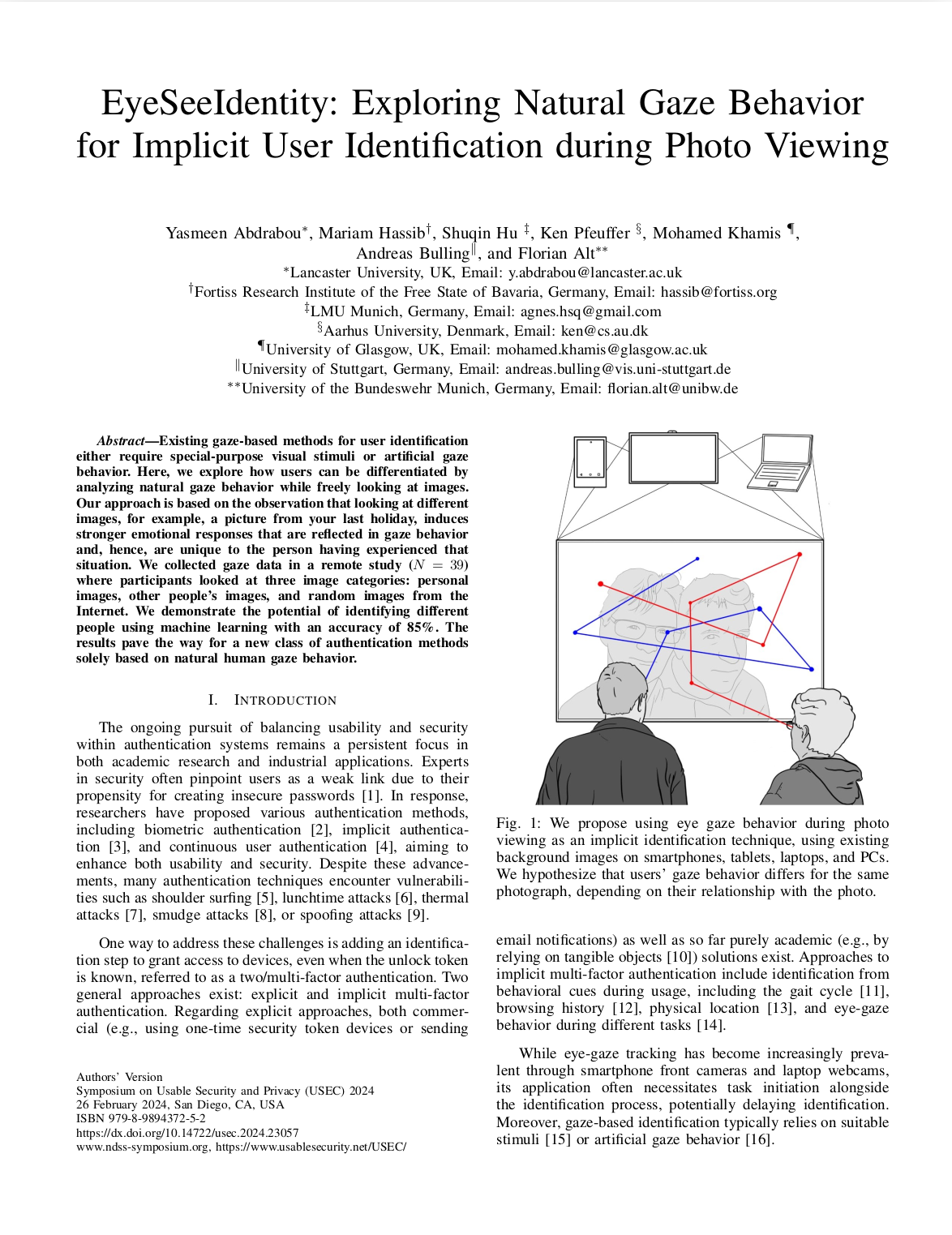

EyeSeeIdentity: Exploring Natural Gaze Behaviour for Implicit User Identification during Photo Viewing

Yasmeen Abdrabou, Mariam Hassib, Shuqin Hu, Ken Pfeuffer, Mohamed Khamis, Andreas Bulling, Florian Alt

Proc. Symposium on Usable Security and Privacy (USEC), pp. 1–12, 2024.

Existing gaze-based methods for user identification either require special-purpose visual stimuli or artificial gaze behaviour. Here, we explore how users can be differentiated by analysing natural gaze behaviour while freely looking at images. Our approach is based on the observation that looking at different images, for example, a picture from your last holiday, induces stronger emotional responses that are reflected in gaze behavioor and, hence, is unique to the person having experienced that situation. We collected gaze data in a remote study (N = 39) where participants looked at three image categories: personal images, other people’s images, and random images from the Internet. We demonstrate the potential of identifying different people using machine learning with an accuracy of 85%. The results pave the way towards a new class of authentication methods solely based on natural human gaze behaviour.Paper: abdrabou24_usec.pdf@inproceedings{abdrabou24_usec, author = {Abdrabou, Yasmeen and Hassib, Mariam and Hu, Shuqin and Pfeuffer, Ken and Khamis, Mohamed and Bulling, Andreas and Alt, Florian}, title = {EyeSeeIdentity: Exploring Natural Gaze Behaviour for Implicit User Identification during Photo Viewing}, booktitle = {Proc. Symposium on Usable Security and Privacy (USEC)}, year = {2024}, pages = {1--12} } -

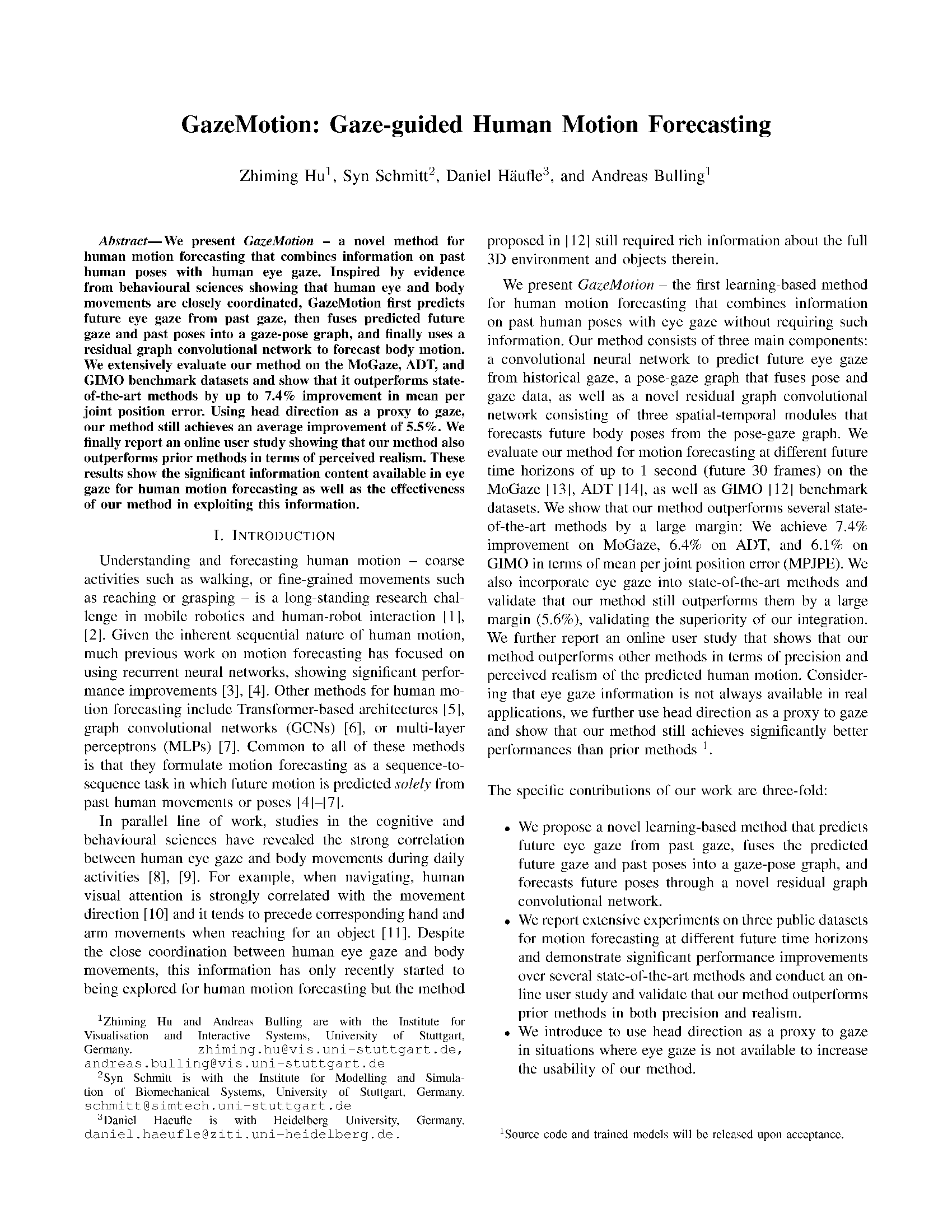

GazeMotion: Gaze-guided Human Motion Forecasting

Zhiming Hu, Syn Schmitt, Daniel Häufle, Andreas Bulling

Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1–6, 2024.

Abstract Links BibTeX Project Oral Presentation

We present GazeMotion, a novel method for human motion forecasting that combines information on past human poses with human eye gaze. Inspired by evidence from behavioural sciences showing that human eye and body movements are closely coordinated, GazeMotion first predicts future eye gaze from past gaze, then fuses predicted future gaze and past poses into a gaze-pose graph, and finally uses a residual graph convolutional network to forecast body motion. We extensively evaluate our method on the MoGaze, ADT, and GIMO benchmark datasets and show that it outperforms state-of-the-art methods by up to 7.4% improvement in mean per joint position error. Using head direction as a proxy to gaze, our method still achieves an average improvement of 5.5%. We finally report an online user study showing that our method also outperforms prior methods in terms of perceived realism. These results show the significant information content available in eye gaze for human motion forecasting as well as the effectiveness of our method in exploiting this information.@inproceedings{hu24_iros, author = {Hu, Zhiming and Schmitt, Syn and Häufle, Daniel and Bulling, Andreas}, title = {GazeMotion: Gaze-guided Human Motion Forecasting}, year = {2024}, pages = {1--6}, booktitle = {Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, video = {https://youtu.be/I-ecIvRqOCY?si=kK8SE0r-JadwOKLt} } -

Inferring Human Intentions from Predicted Action Probabilities

Lei Shi, Paul-Christian Bürkner, Andreas Bulling

Proc. Workshop on Theory of Mind in Human-AI Interaction at CHI 2024, pp. 1–7, 2024.

Inferring human intentions is a core challenge in human-AI collab-oration but while Bayesian methods struggle with complex visual input, deep neural network (DNN) based methods do not provide uncertainty quantifications. In this work we combine both approaches for the first time and show that the predicted next action probabilities contain information that can be used to infer the underlying user intention. We propose a two-step approach to human intention prediction: While a DNN predicts the probabilities of the next action, MCMC-based Bayesian inference is used to infer the underlying intention from these predictions. This approach not only allows for the independent design of the DNN architecture but also the subsequently fast, design-independent inference of human intentions. We evaluate our method using a series of experiments on the Watch-And-Help (WAH) and a keyboard and mouse interaction dataset. Our results show that our approach can accurately predict human intentions from observed actions and the implicit information contained in next action probabilities. Furthermore, we show that our approach can predict the correct intention even if only a few actions have been observed.Paper: shi24_chiw.pdf@inproceedings{shi24_chiw, author = {Shi, Lei and Bürkner, Paul-Christian and Bulling, Andreas}, title = {Inferring Human Intentions from Predicted Action Probabilities}, booktitle = {Proc. Workshop on Theory of Mind in Human-AI Interaction at CHI 2024}, year = {2024}, pages = {1--7} } -

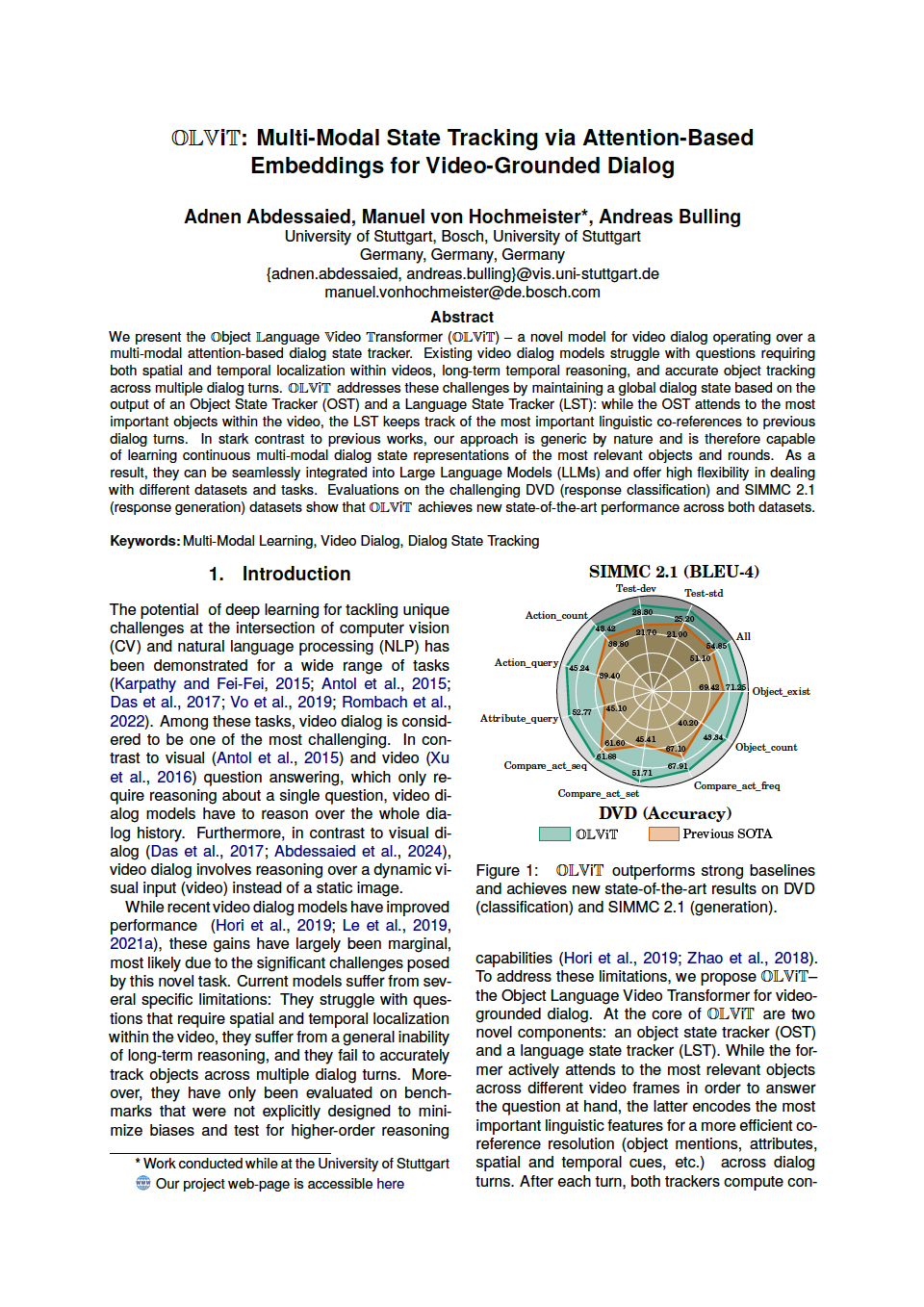

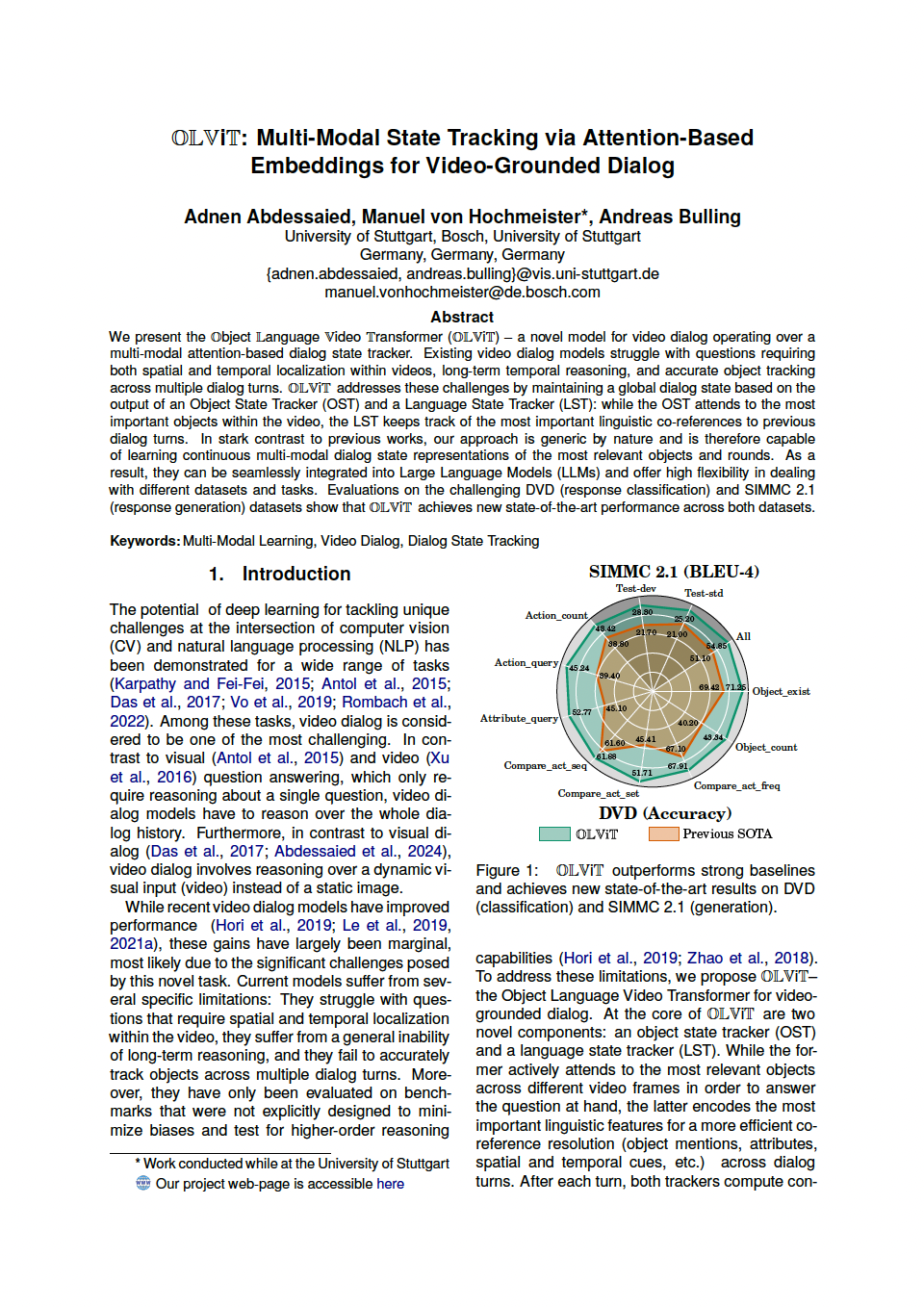

OLViT: Multi-Modal State Tracking via Attention-Based Embeddings for Video-Grounded Dialog

Adnen Abdessaied, Manuel Hochmeister, Andreas Bulling

Proc. 31st Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING), pp. 1–11, 2024.

We present the Object Language Video Transformer (OLViT) – a novel model for video dialog operating over a multi-modal attention-based dialog state tracker. Existing video dialog models struggle with questions requiring both spatial and temporal localization within videos, long-term temporal reasoning, and accurate object tracking across multiple dialog turns. OLViT addresses these challenges by maintaining a global dialog state based on the output of an Object State Tracker (OST) and a Language State Tracker (LST): while the OST attends to the most important objects within the video, the LST keeps track of the most important linguistic co-references to previous dialog turns. In stark contrast to previous works, our approach is generic by nature and is therefore capable of learning continuous multi-modal dialog state representations of the most relevant objects and rounds. As a result, they can be seamlessly integrated into Large Language Models (LLMs) and offer high flexibility in dealing with different datasets and tasks. Evaluations on the challenging DVD (response classification) and SIMMC 2.1 (response generation) datasets show that OLViT achieves new state-of-the-art performance across both datasets.@inproceedings{abdessaied24_coling, author = {Abdessaied, Adnen and von Hochmeister, Manuel and Bulling, Andreas}, title = {OLViT: Multi-Modal State Tracking via Attention-Based Embeddings for Video-Grounded Dialog}, booktitle = {Proc. 31st Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING)}, year = {2024}, pages = {1--11} } -

Neural Reasoning About Agents’ Goals, Preferences, and Actions

Matteo Bortoletto, Lei Shi, Andreas Bulling

Proc. 38th AAAI Conference on Artificial Intelligence (AAAI), pp. 456–464, 2024.

We propose the Intuitive Reasoning Network (IRENE) – a novel neural model for intuitive psychological reasoning about agents’ goals, preferences, and actions that can generalise previous experiences to new situations. IRENE combines a graph neural network for learning agent and world state representations with a transformer to encode the task context. When evaluated on the challenging Baby Intuitions Benchmark, IRENE achieves new state-of-the-art performance on three out of its five tasks – with up to 48.9 % improvement. In contrast to existing methods, IRENE is able to bind preferences to specific agents, to better distinguish between rational and irrational agents, and to better understand the role of blocking obstacles. We also investigate, for the first time, the influence of the training tasks on test performance. Our analyses demonstrate the effectiveness of IRENE in combining prior knowledge gained during training for unseen evaluation tasks.@inproceedings{bortoletto24_aaai, author = {Bortoletto, Matteo and Shi, Lei and Bulling, Andreas}, title = {Neural Reasoning About Agents’ Goals, Preferences, and Actions}, booktitle = {Proc. 38th AAAI Conference on Artificial Intelligence (AAAI)}, year = {2024}, volume = {38}, number = {1}, pages = {456--464}, doi = {10.1609/aaai.v38i1.27800} } -

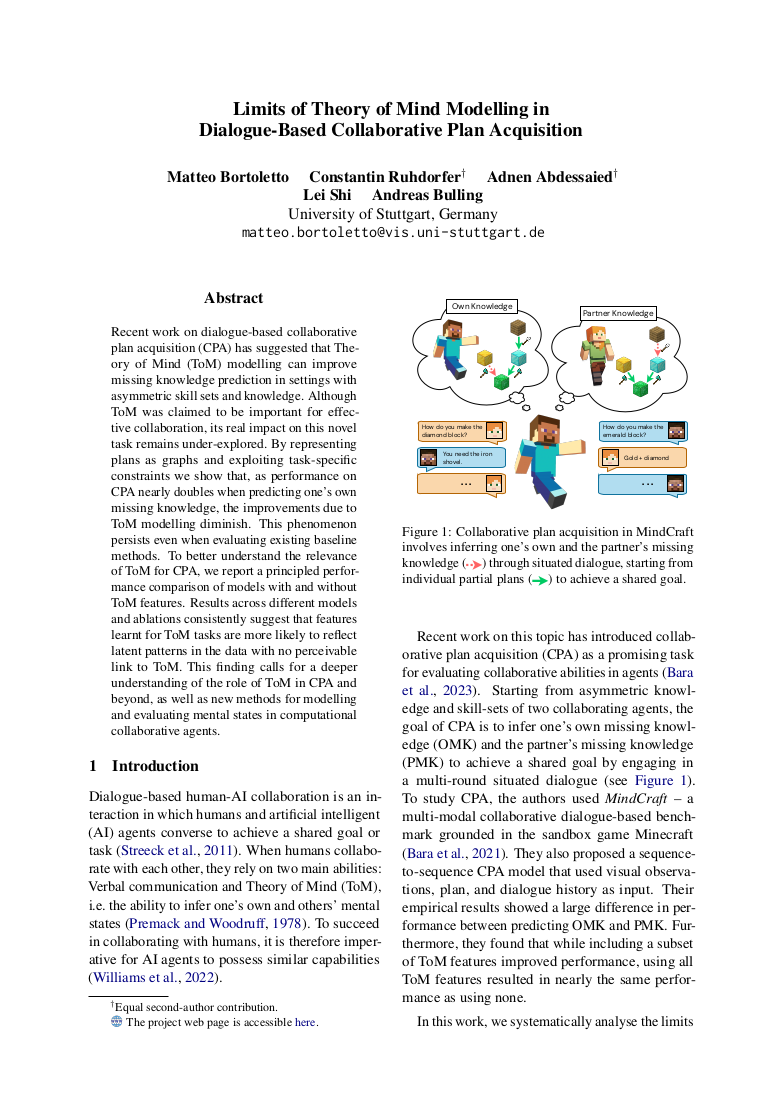

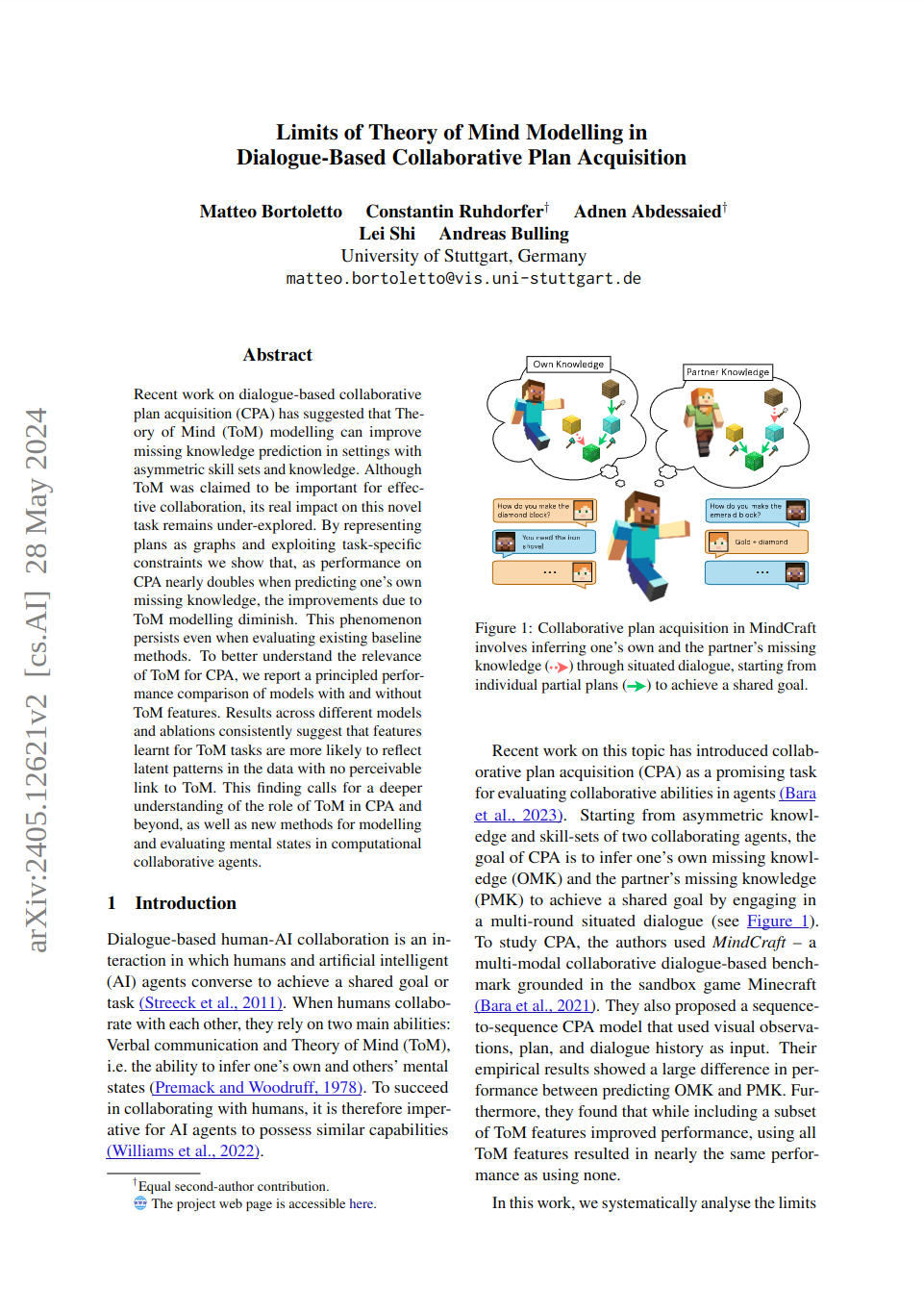

Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition

Matteo Bortoletto, Constantin Ruhdorfer, Adnen Abdessaied, Lei Shi, Andreas Bulling

Proc. 62nd Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1–16, 2024.

Recent work on dialogue-based collaborative plan acquisition (CPA) has suggested that Theory of Mind (ToM) modelling can improve missing knowledge prediction in settings with asymmetric skill-sets and knowledge. Although ToM was claimed to be important for effective collaboration, its real impact on this novel task remains under-explored. By representing plans as graphs and by exploiting task-specific constraints we show that, as performance on CPA nearly doubles when predicting one’s own missing knowledge, the improvements due to ToM modelling diminish. This phenomenon persists even when evaluating existing baseline methods. To better understand the relevance of ToM for CPA, we report a principled performance comparison of models with and without ToM features. Results across different models and ablations consistently suggest that learned ToM features are indeed more likely to reflect latent patterns in the data with no perceivable link to ToM. This finding calls for a deeper understanding of the role of ToM in CPA and beyond, as well as new methods for modelling and evaluating mental states in computational collaborative agents.@inproceedings{bortoletto24_acl, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Abdessaied, Adnen and Shi, Lei and Bulling, Andreas}, title = {Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition}, booktitle = {Proc. 62nd Annual Meeting of the Association for Computational Linguistics (ACL)}, year = {2024}, pages = {1--16}, doi = {} } -

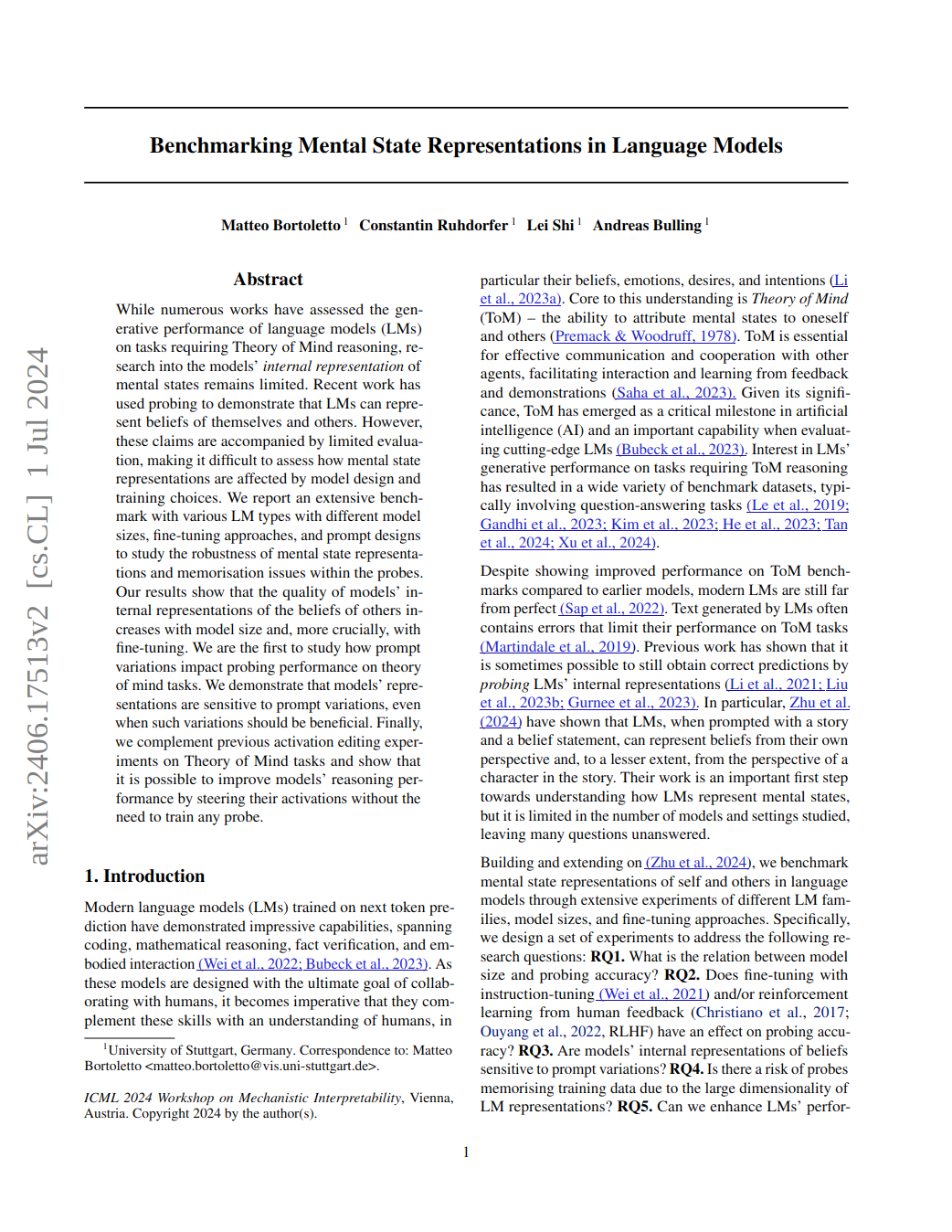

Benchmarking Mental State Representations in Language Models

Matteo Bortoletto, Constantin Ruhdorfer, Lei Shi, Andreas Bulling

Proc. ICML 2024 Workshop on Mechanistic Interpretability, pp. 1–21, 2024.

While numerous works have assessed the generative performance of language models (LMs) on tasks requiring Theory of Mind reasoning, research into the models’ internal representation of mental states remains limited. Recent work has used probing to demonstrate that LMs can represent beliefs of themselves and others. However, these claims are accompanied by limited evaluation, making it difficult to assess how mental state representations are affected by model design and training choices. We report an extensive benchmark with various LM types with different model sizes, fine-tuning approaches, and prompt designs to study the robustness of mental state representations and memorisation issues within the probes. Our results show that the quality of models’ internal representations of the beliefs of others increases with model size and, more crucially, with fine-tuning. We are the first to study how prompt variations impact probing performance on theory of mind tasks. We demonstrate that models’ representations are sensitive to prompt variations, even when such variations should be beneficial. Finally, we complement previous activation editing experiments on Theory of Mind tasks and show that it is possible to improve models’ reasoning performance by steering their activations without the need to train any probe.@inproceedings{bortoletto24_icmlw, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Shi, Lei and Bulling, Andreas}, title = {Benchmarking Mental State Representations in Language Models}, booktitle = {Proc. ICML 2024 Workshop on Mechanistic Interpretability}, year = {2024}, pages = {1--21}, doi = {}, url = {https://openreview.net/forum?id=yEwEVoH9Be} } -

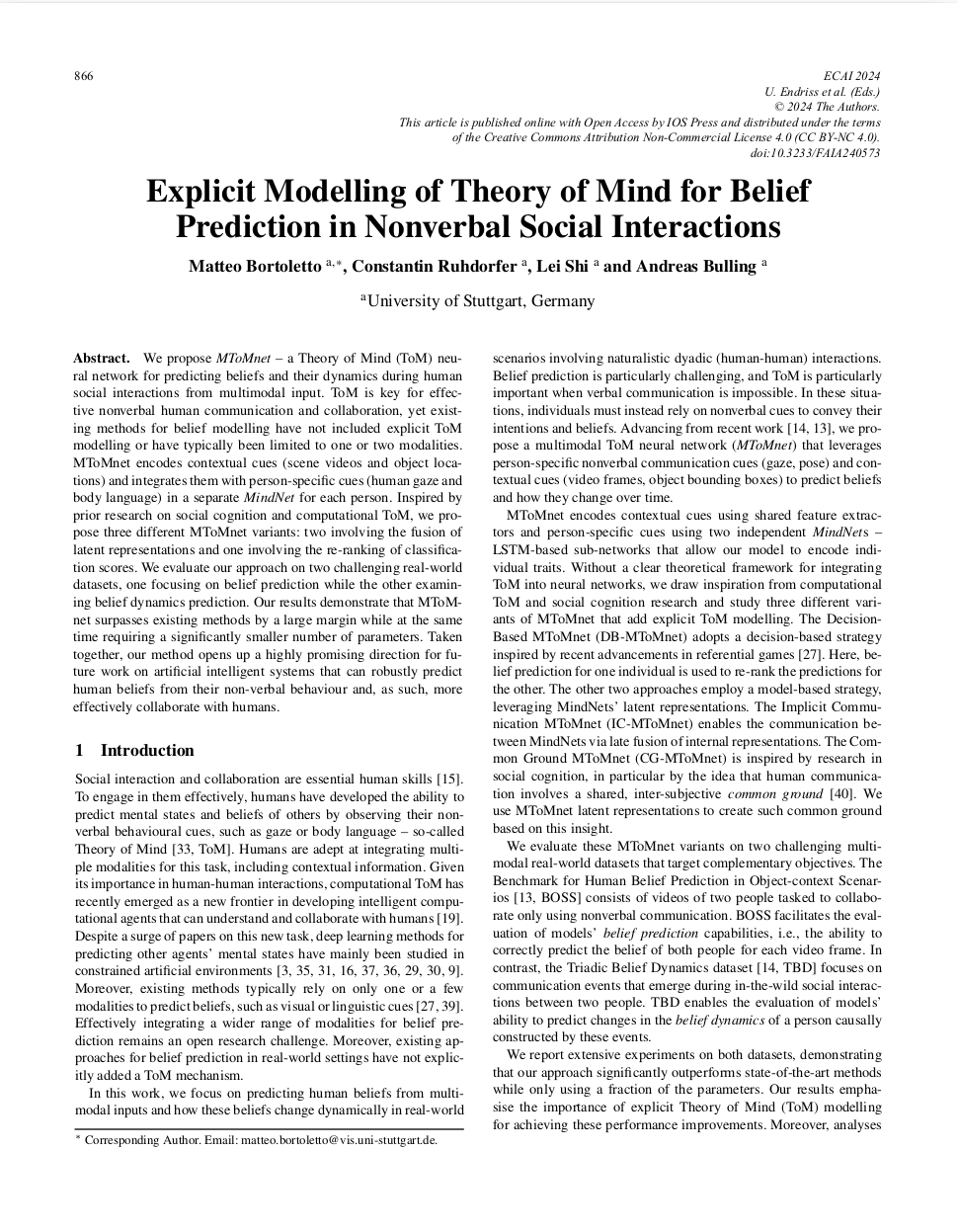

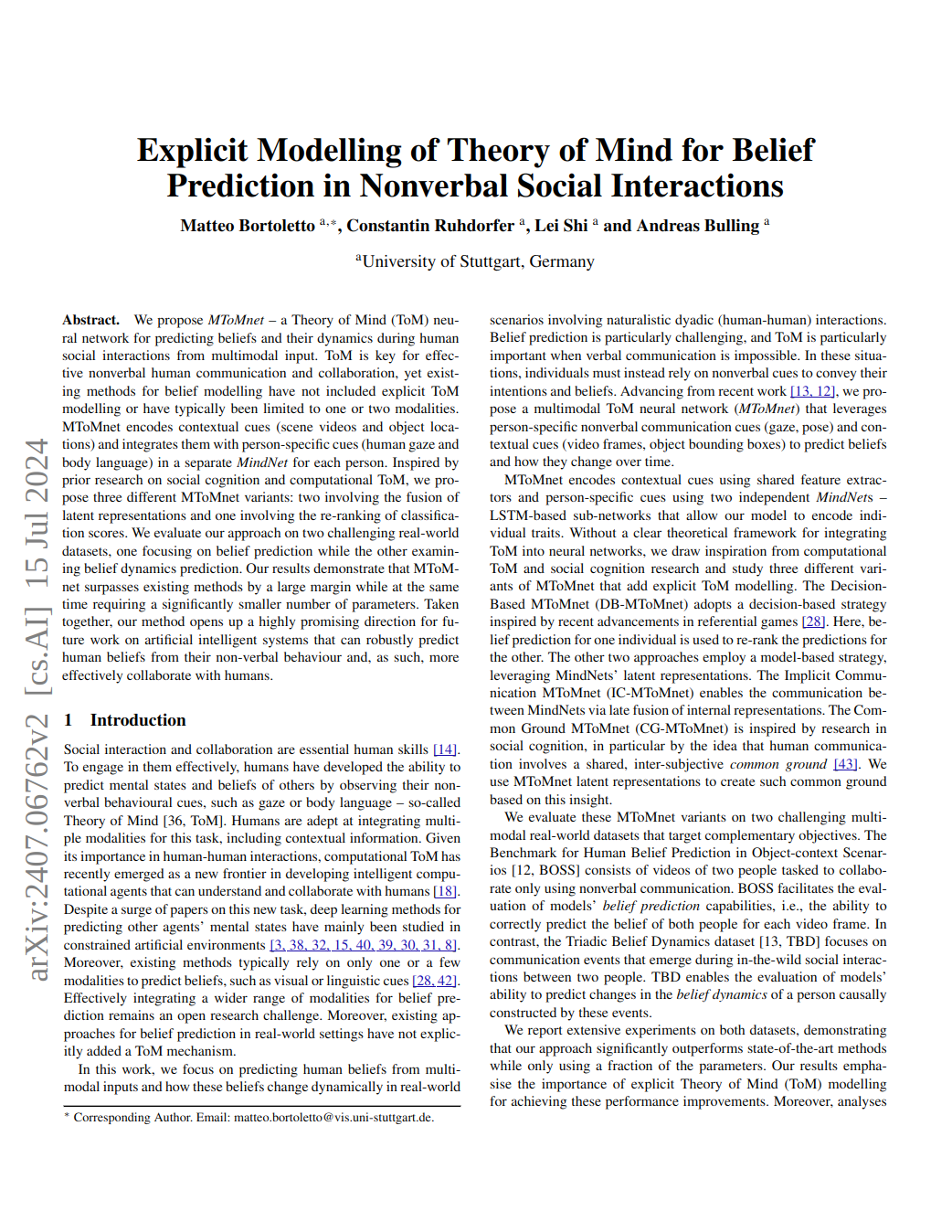

Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions

Matteo Bortoletto, Constantin Ruhdorfer, Lei Shi, Andreas Bulling

Proc. 27th European Conference on Artificial Intelligence (ECAI), pp. 866–873, 2024.

We propose MToMnet - a Theory of Mind (ToM) neural network for predicting beliefs and their dynamics during human social interactions from multimodal input. ToM is key for effective nonverbal human communication and collaboration, yet, existing methods for belief modelling have not included explicit ToM modelling or have typically been limited to one or two modalities. MToMnet encodes contextual cues (scene videos and object locations) and integrates them with person-specific cues (human gaze and body language) in a separate MindNet for each person. Inspired by prior research on social cognition and computational ToM, we propose three different MToMnet variants: two involving fusion of latent representations and one involving re-ranking of classification scores. We evaluate our approach on two challenging real-world datasets, one focusing on belief prediction, while the other examining belief dynamics prediction. Our results demonstrate that MToMnet surpasses existing methods by a large margin while at the same time requiring a significantly smaller number of parameters. Taken together, our method opens up a highly promising direction for future work on artificial intelligent systems that can robustly predict human beliefs from their non-verbal behaviour and, as such, more effectively collaborate with humans.doi: 10.3233/FAIA240573Paper: bortoletto24_ecai.pdf@inproceedings{bortoletto24_ecai, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Shi, Lei and Bulling, Andreas}, title = {Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions}, booktitle = {Proc. 27th European Conference on Artificial Intelligence (ECAI)}, year = {2024}, pages = {866--873}, doi = {10.3233/FAIA240573} } -

InteRead: An Eye Tracking Dataset of Interrupted Reading

Francesca Zermiani, Prajit Dhar, Ekta Sood, Fabian Kögel, Andreas Bulling, Maria Wirzberger

Proc. 31st Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING), pp. 9154–9169, 2024.

Eye movements during reading offer a window into cognitive processes and language comprehension, but the scarcity of reading data with interruptions – which learners frequently encounter in their everyday learning environments – hampers advances in the development of intelligent learning technologies. We introduce InteRead – a novel 50-participant dataset of gaze data recorded during self-paced reading of real-world text. InteRead further offers fine-grained annotations of interruptions interspersed throughout the text as well as resumption lags incurred by these interruptions. Interruptions were triggered automatically once readers reached predefined target words. We validate our dataset by reporting interdisciplinary analyses on different measures of gaze behavior. In line with prior research, our analyses show that the interruptions as well as word length and word frequency effects significantly impact eye movements during reading. We also explore individual differences within our dataset, shedding light on the potential for tailored educational solutions. InteRead is accessible from our datasets web-page: https://www.ife.uni-stuttgart.de/en/llis/research/datasets/.@inproceedings{zermiani24_coling, title = {InteRead: An Eye Tracking Dataset of Interrupted Reading}, author = {Zermiani, Francesca and Dhar, Prajit and Sood, Ekta and Kögel, Fabian and Bulling, Andreas and Wirzberger, Maria}, year = {2024}, booktitle = {Proc. 31st Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING)}, pages = {9154--9169}, doi = {}, url = {https://aclanthology.org/2024.lrec-main.802/} } -

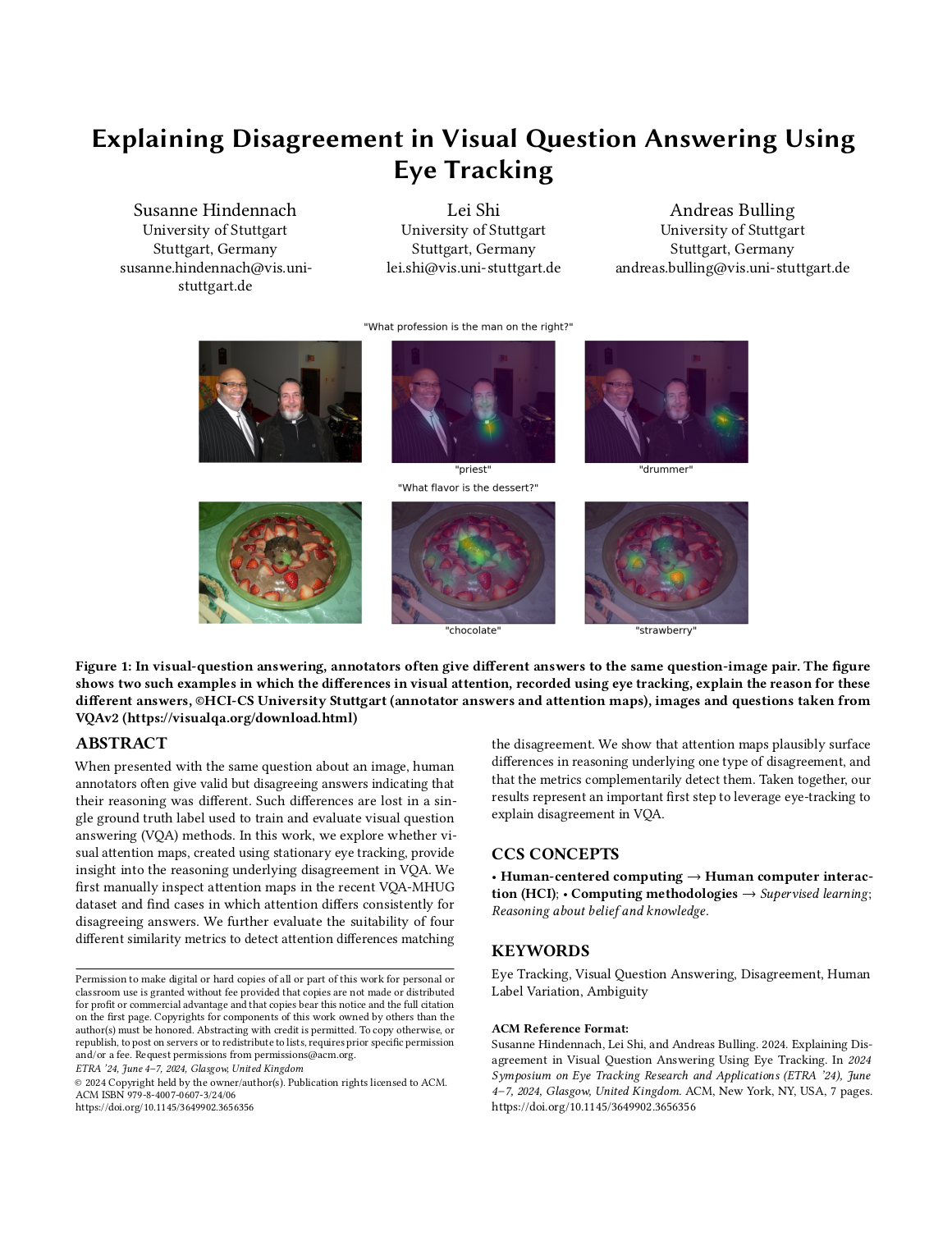

Explaining Disagreement in Visual Question Answering Using Eye Tracking

Susanne Hindennach, Lei Shi, Andreas Bulling

Proc. International Workshop on Pervasive Eye Tracking and Mobile Gaze-Based Interaction (PETMEI), pp. 1–7, 2024.

When presented with the same question about an image, human annotators often give valid but disagreeing answers indicating that their reasoning was different. Such differences are lost in a single ground truth label used to train and evaluate visual question answering (VQA) methods. In this work, we explore whether visual attention maps, created using stationary eye tracking, provide insight into the reasoning underlying disagreement in VQA. We first manually inspect attention maps in the recent VQA-MHUG dataset and find cases in which attention differs consistently for disagreeing answers. We further evaluate the suitability of four different similarity metrics to detect attention differences matching the disagreement. We show that attention maps plausibly surface differences in reasoning underlying one type of disagreement, and that the metrics complementarily detect them. Taken together, our results represent an important first step to leverage eye-tracking to explain disagreement in VQA.Paper: hindennach24_petmei.pdf@inproceedings{hindennach24_petmei, title = {Explaining Disagreement in Visual Question Answering Using Eye Tracking}, author = {Hindennach, Susanne and Shi, Lei and Bulling, Andreas}, year = {2024}, pages = {1--7}, doi = {10.1145/3649902.3656356}, booktitle = {Proc. International Workshop on Pervasive Eye Tracking and Mobile Gaze-Based Interaction (PETMEI)} } -

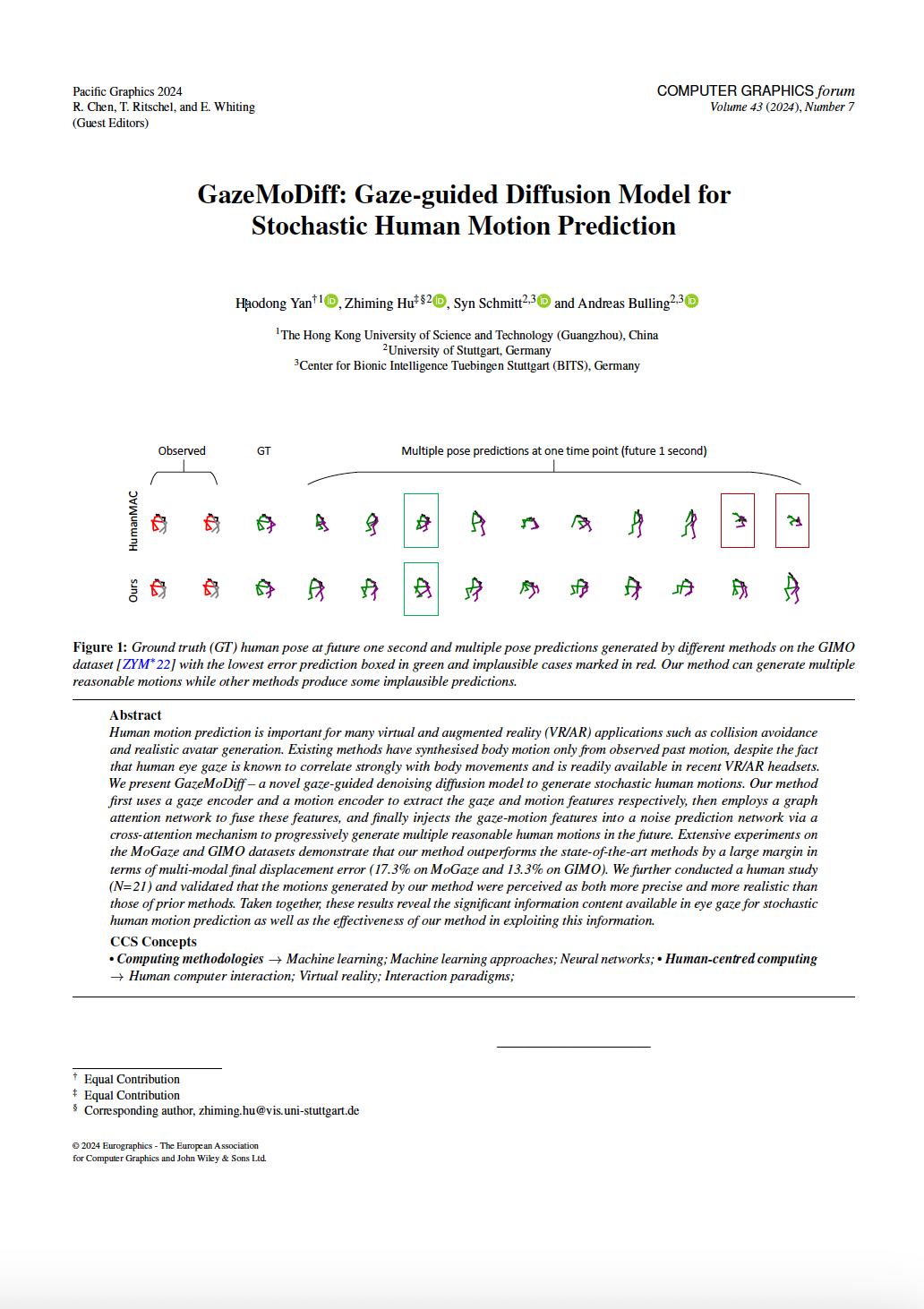

GazeMoDiff: Gaze-guided Diffusion Model for Stochastic Human Motion Prediction

Haodong Yan, Zhiming Hu, Syn Schmitt, Andreas Bulling

Proc. 32nd Pacific Conference on Computer Graphics and Application (PG), pp. 1–10, 2024.

Human motion prediction is important for many virtual and augmented reality (VR/AR) applications such as collision avoidance and realistic avatar generation. Existing methods have synthesised body motion only from observed past motion, despite the fact that human eye gaze is known to correlate strongly with body movements and is readily available in recent VR/AR headsets. We present GazeMoDiff – a novel gaze-guided denoising diffusion model to generate stochastic human motions. Our method first uses a gaze encoder and a motion encoder to extract the gaze and motion features respectively, then employs a graph attention network to fuse these features, and finally injects the gaze-motion features into a noise prediction network via a cross-attention mechanism to progressively generate multiple reasonable human motions in the future. Extensive experiments on the MoGaze and GIMO datasets demonstrate that our method outperforms the state-of-the-art methods by a large margin in terms of multi-modal final displacement error (17.3% on MoGaze and 13.3% on GIMO). We further conducted a human study (N=21) and validated that the motions generated by our method were perceived as both more precise and more realistic than those of prior methods. Taken together, these results reveal the significant information content available in eye gaze for stochastic human motion prediction as well as the effectiveness of our method in exploiting this information.Paper: yan24_pg.pdf@inproceedings{yan24_pg, title = {GazeMoDiff: Gaze-guided Diffusion Model for Stochastic Human Motion Prediction}, author = {Yan, Haodong and Hu, Zhiming and Schmitt, Syn and Bulling, Andreas}, year = {2024}, doi = {}, pages = {1--10}, booktitle = {Proc. 32nd Pacific Conference on Computer Graphics and Application (PG)} } -

Saliency3D: a 3D Saliency Dataset Collected on Screen

Yao Wang, Qi Dai, Mihai Bâce, Karsten Klein, Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2024.

While visual saliency has recently been studied in 3D, the experimental setup for collecting 3D saliency data can be expensive and cumbersome. To address this challenge, we propose a novel experimental design that utilizes an eye tracker on a screen to collect 3D saliency data. Our experimental design reduces the cost and complexity of 3D saliency dataset collection. We first collect gaze data on a screen, then we map them to 3D saliency data through perspective transformation. Using this method, we collect a 3D saliency dataset (49,276 fixations) comprising 10 participants looking at sixteen objects. Moreover, we examine the viewing preferences for objects and discuss our findings in this study. Our results indicate potential preferred viewing directions and a correlation between salient features and the variation in viewing directions.Paper: wang24_etras.pdf@inproceedings{wang24_etras, title = {Saliency3D: a 3D Saliency Dataset Collected on Screen}, author = {Wang, Yao and Dai, Qi and B{\^a}ce, Mihai and Klein, Karsten and Bulling, Andreas}, year = {2024}, pages = {1--9}, booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)}, doi = {10.1145/3649902.3653350} } -

MultiMediate’24: Multi-Domain Engagement Estimation

Philipp Müller, Michal Balazia, Tobias Baur, Michael Dietz, Alexander Heimerl, Anna Penzkofer, Dominik Schiller, François Brémond, Jan Alexandersson, Elisabeth André, Andreas Bulling

Proceedings of the 32nd ACM International Conference on Multimedia, pp. 11377 - 11382, 2024.

Estimating the momentary level of participant’s engagement is an important prerequisite for assistive systems that support human interactions. Previous work has addressed this task in within-domain evaluation scenarios, i.e. training and testing on the same dataset. This is in contrast to real-life scenarios where domain shifts between training and testing data frequently occur. With MultiMediate’24, we present the first challenge addressing multi-domain engagement estimation. As training data, we utilise the NOXI database of dyadic novice-expert interactions. In addition to within-domain test data, we add two new test domains. First, we introduce recordings following the NOXI protocol but covering languages that are not present in the NOXI training data. Second, we collected novel engagement annotations on the MPIIGroupInteraction dataset which consists of group discussions between three to four people. In this way, MultiMediate’24 evaluates the ability of approaches to generalise across factors such as language and cultural background, group size, task, and screen-mediated vs. face-to-face interaction. This paper describes the MultiMediate’24 challenge and presents baseline results. In addition, we discuss selected challenge solutions.Paper: mueller24_mm.pdf@inproceedings{mueller24_mm, author = {M{\"{u}}ller, Philipp and Balazia, Michal and Baur, Tobias and Dietz, Michael and Heimerl, Alexander and Penzkofer, Anna and Schiller, Dominik and Brémond, François and Alexandersson, Jan and André, Elisabeth and Bulling, Andreas}, title = {MultiMediate'24: Multi-Domain Engagement Estimation}, year = {2024}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, doi = {10.1145/3664647.3689004}, booktitle = {Proceedings of the 32nd ACM International Conference on Multimedia}, pages = {11377 - 11382} } -

VSA4VQA: Scaling A Vector Symbolic Architecture To Visual Question Answering on Natural Images

Anna Penzkofer, Lei Shi, Andreas Bulling

Proc. 46th Annual Meeting of the Cognitive Science Society (CogSci), 2024.

Abstract Links BibTeX Project Oral Presentation

While Vector Symbolic Architectures (VSAs) are promising for modelling spatial cognition, their application is currently limited to artificially generated images and simple spatial queries. We propose VSA4VQA – a novel 4D implementation of VSAs that implements a mental representation of natural images for the challenging task of Visual Question Answering (VQA). VSA4VQA is the first model to scale a VSA to complex spatial queries. Our method is based on the Semantic Pointer Architecture (SPA) to encode objects in a hyper-dimensional vector space. To encode natural images, we extend the SPA to include dimensions for object’s width and height in addition to their spatial location. To perform spatial queries we further introduce learned spatial query masks and integrate a pre-trained vision-language model for answering attribute-related questions. We evaluate our method on the GQA benchmark dataset and show that it can effectively encode natural images, achieving competitive performance to state-of-the-art deep learning methods for zero-shot VQA.@inproceedings{penzkofer24_cogsci, author = {Penzkofer, Anna and Shi, Lei and Bulling, Andreas}, title = {{VSA4VQA}: {Scaling} {A} {Vector} {Symbolic} {Architecture} {To} {Visual} {Question} {Answering} on {Natural} {Images}}, booktitle = {Proc. 46th Annual Meeting of the Cognitive Science Society (CogSci)}, year = {2024}, volume = {46}, url = {https://escholarship.org/uc/item/26j7v1nf.} } -

Quantifying Human Upper Limb Stiffness Responses Based on a Computationally Efficient Neuromusculoskeletal Arm Model

Maria Sapounaki, Pierre Schumacher, Winfried Ilg, Martin Giese, Christophe Maufroy, Andreas Bulling, Syn Schmitt, Daniel F.B. Haeufle, Isabell Wochner

Proc. 10th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, pp. 1–6, 2024.

Abstract Links BibTeX Project Oral Presentation

The mimicking of human-like arm movement characteristics involves the consideration of three factors during control policy synthesis: (a) chosen task requirements, (b) inclusion of noise during movement execution and (c) chosen optimality principles. Previous studies showed that when considering these factors (a-c) individually, it is possible to synthesize arm movements that either kinematically match the experimental data or reproduce the stereotypical triphasic muscle activation pattern. However, to date no quantitative comparison has been made on how realistic the arm movement generated by each factor is; as well as whether a partial or total combination of all factors results in arm movements with human-like kinematic characteristics and a triphasic muscle pattern. To investigate this, we used reinforcement learning to learn a control policy for a musculoskeletal arm model, aiming to discern which combination of factors (a-c) results in realistic arm movements according to four frequently reported stereotypical characteristics. Our findings indicate that incorporating velocity and acceleration requirements into the reaching task, employing reward terms that encourage minimization of mechanical work, hand jerk, and control effort, along with the inclusion of noise during movement, leads to the emergence of realistic human arm movements in reinforcement learning. We expect that the gained insights will help in the future to better predict desired arm movements and corrective forces in wearable assistive devices.Paper: sapounaki24_biorob.pdf@inproceedings{sapounaki24_biorob, title = {Quantifying Human Upper Limb Stiffness Responses Based on a Computationally Efficient Neuromusculoskeletal Arm Model}, author = {Sapounaki, Maria and Schumacher, Pierre and Ilg, Winfried and Giese, Martin and Maufroy, Christophe and Bulling, Andreas and Schmitt, Syn and Haeufle, Daniel F.B. and Wochner, Isabell}, year = {2024}, booktitle = {Proc. 10th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics}, pages = {1--6} } -

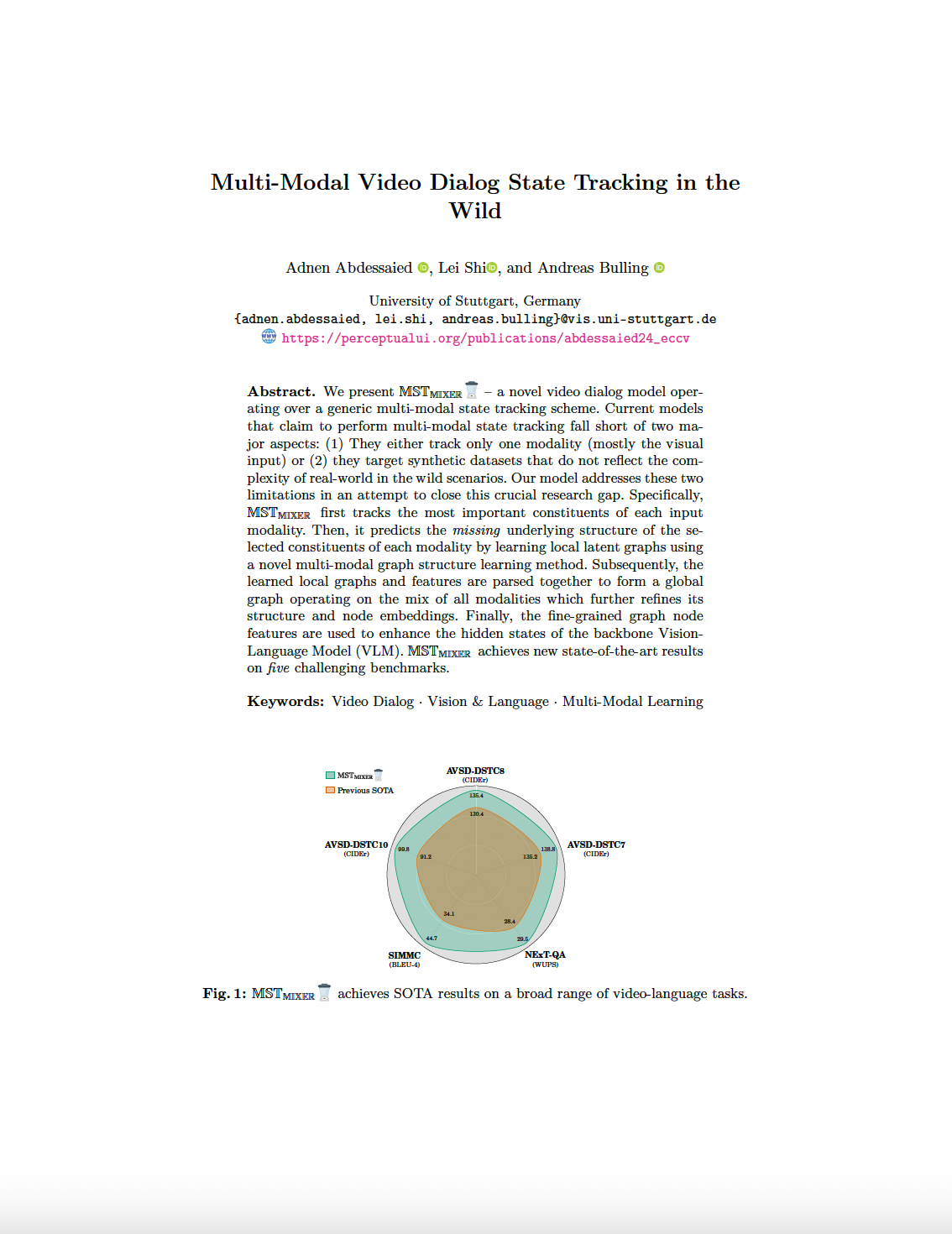

Multi-Modal Video Dialog State Tracking in the Wild

Adnen Abdessaied, Lei Shi, Andreas Bulling

Proc. 18th European Conference on Computer Vision (ECCV), pp. 1–25, 2024.

We present MST-MIXER – a novel video dialog model operating over a generic multi-modal state tracking scheme. Current models that claim to perform multi-modal state tracking fall short of two major aspects: (1) They either track only one modality (mostly the visual input) or (2) they target synthetic datasets that do not reflect the complexity of real-world in the wild scenarios. Our model addresses these two limitations in an attempt to close this crucial research gap. Specifically, MST-MIXER first tracks the most important constituents of each input modality. Then, it predicts the missing underlying structure of the selected constituents of each modality by learning local latent graphs using a novel multi-modal graph structure learning method. Subsequently, the learned local graphs and features are parsed together to form a global graph operating on the mix of all modalities which further refines its structure and node embeddings. Finally, the fine-grained graph node features are used to enhance the hidden states of the backbone Vision-Language Model (VLM). MST-MIXER achieves new state-of-the-art results on five challenging benchmarks.@inproceedings{abdessaied24_eccv, author = {Abdessaied, Adnen and Shi, Lei and Bulling, Andreas}, title = {Multi-Modal Video Dialog State Tracking in the Wild}, booktitle = {Proc. 18th European Conference on Computer Vision (ECCV)}, year = {2024}, pages = {1--25} } -

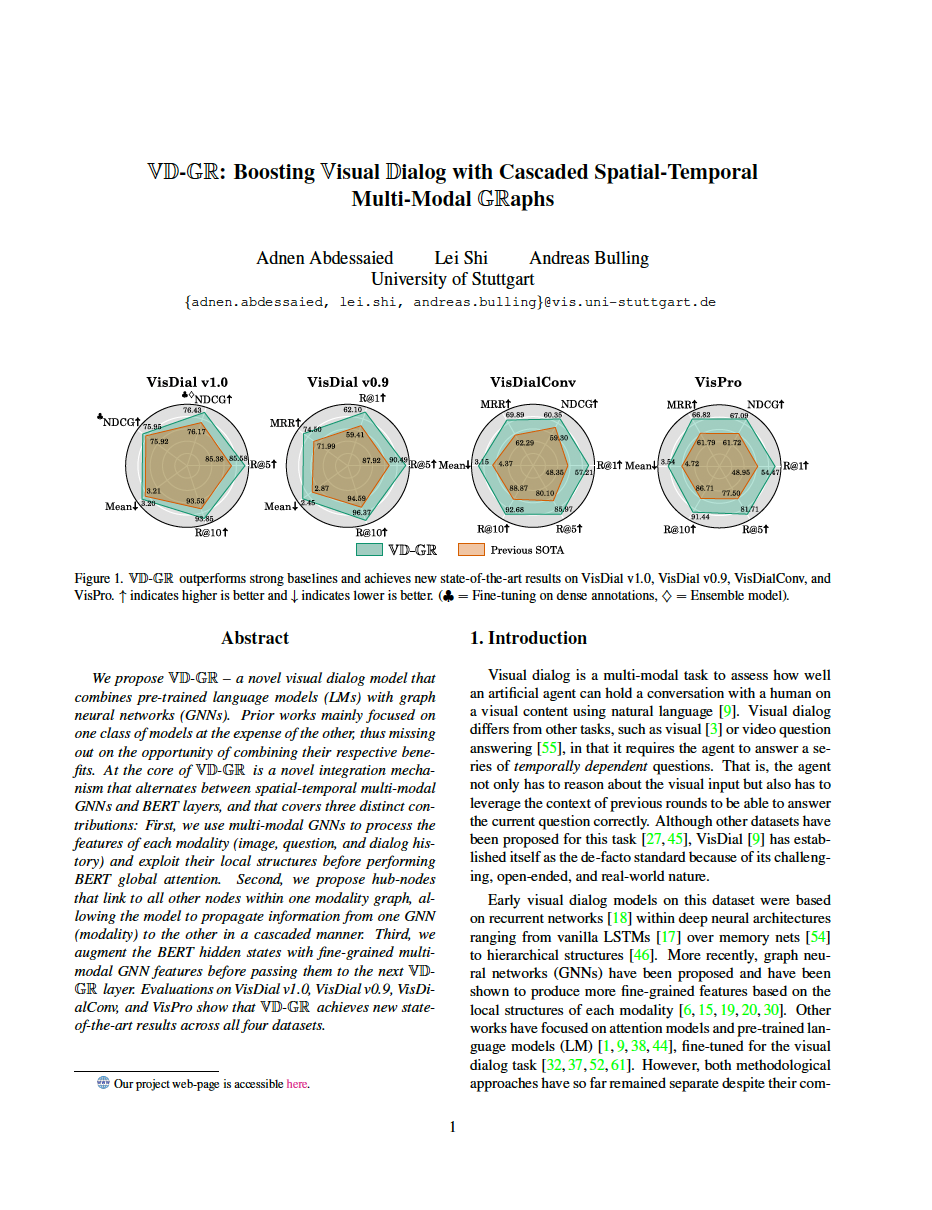

VD-GR: Boosting Visual Dialog with Cascaded Spatial-Temporal Multi-Modal GRaphs

Adnen Abdessaied, Lei Shi, Andreas Bulling

Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. 5805–5814, 2024.

We propose VD-GR – a novel visual dialog model that combines pre-trained language models (LMs) with graph neural networks (GNNs). Prior works mainly focused on one class of models at the expense of the other, thus missing out on the opportunity of combining their respective benefits. At the core of VD-GR is a novel integration mechanism that alternates between spatial-temporal multi-modal GNNs and BERT layers, and that covers three distinct contributions: First, we use multi-modal GNNs to process the features of each modality (image, question, and dialog history) and exploit their local structures before performing BERT global attention. Second, we propose hub-nodes that link to all other nodes within one modality graph, allowing the model to propagate information from one GNN (modality) to the other in a cascaded manner. Third, we augment the BERT hidden states with fine-grained multi-modal GNN features before passing them to the next VD-GR layer. Evaluations on VisDial v1.0, VisDial v0.9, VisDialConv, and VisPro show that VD-GR achieves new state-of-the-art results across all four datasets@inproceedings{abdessaied24_wacv, author = {Abdessaied, Adnen and Shi, Lei and Bulling, Andreas}, title = {VD-GR: Boosting Visual Dialog with Cascaded Spatial-Temporal Multi-Modal GRaphs}, booktitle = {Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, year = {2024}, pages = {5805--5814} } -

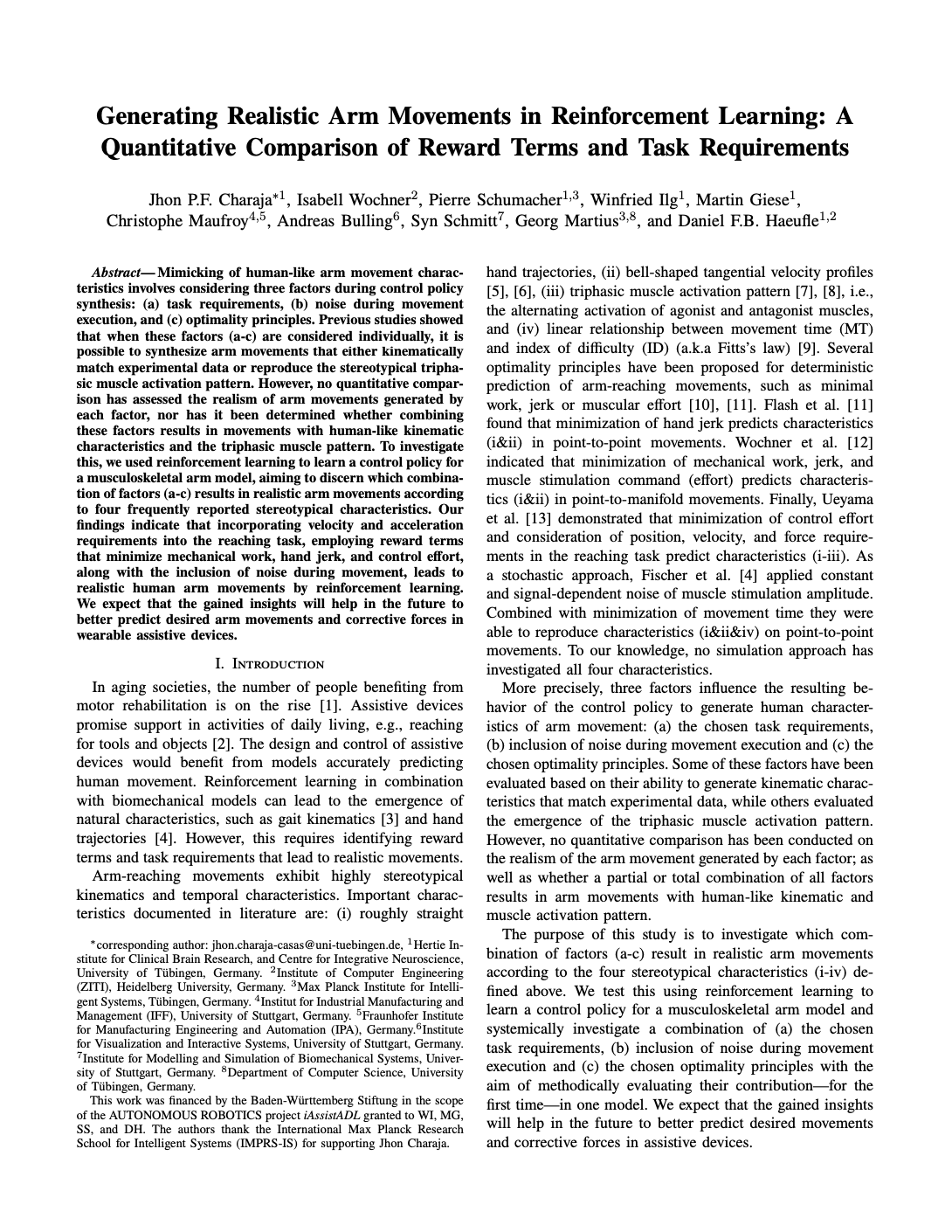

Generating Realistic Arm Movements in Reinforcement Learning: A Quantitative Comparison of Reward Terms and Task Requirements

Jhon Paul Feliciano Charaja Casas, Isabell Wochner, Pierre Schumacher, Winfried Ilg, Martin Giese, Christophe Maufroy, Andreas Bulling, Syn Schmitt, Daniel F.B. Haeufle

Proc. 10th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, pp. 1–6, 2024.

The mimicking of human-like arm movement characteristics involves the consideration of three factors during control policy synthesis: (a) chosen task requirements, (b) inclusion of noise during movement execution and (c) chosen optimality principles. Previous studies showed that when considering these factors (a-c) individually, it is possible to synthesize arm movements that either kinematically match the experimental data or reproduce the stereotypical triphasic muscle activation pattern. However, to date no quantitative comparison has been made on how realistic the arm movement generated by each factor is; as well as whether a partial or total combination of all factors results in arm movements with human-like kinematic characteristics and a triphasic muscle pattern. To investigate this, we used reinforcement learning to learn a control policy for a musculoskeletal arm model, aiming to discern which combination of factors (a-c) results in realistic arm movements according to four frequently reported stereotypical characteristics. Our findings indicate that incorporating velocity and acceleration requirements into the reaching task, employing reward terms that encourage minimization of mechanical work, hand jerk, and control effort, along with the inclusion of noise during movement, leads to the emergence of realistic human arm movements in reinforcement learning. We expect that the gained insights will help in the future to better predict desired arm movements and corrective forces in wearable assistive devices.Paper: casas24_biorob.pdf@inproceedings{casas24_biorob, title = {Generating Realistic Arm Movements in Reinforcement Learning: A Quantitative Comparison of Reward Terms and Task Requirements}, author = {Casas, Jhon Paul Feliciano Charaja and Wochner, Isabell and Schumacher, Pierre and Ilg, Winfried and Giese, Martin and Maufroy, Christophe and Bulling, Andreas and Schmitt, Syn and Haeufle, Daniel F.B.}, year = {2024}, booktitle = {Proc. 10th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics}, pages = {1--6} }

Technical Reports

-

PrivatEyes: Appearance-based Gaze Estimation Using Federated Secure Multi-Party Computation

Mayar Elfares, Pascal Reisert, Zhiming Hu, Wenwu Tang, Ralf Küsters, Andreas Bulling

arXiv:2402.18970, pp. 1–22, 2024.

Latest gaze estimation methods require large-scale training data but their collection and exchange pose significant privacy risks. We propose PrivatEyes - the first privacy-enhancing training approach for appearance-based gaze estimation based on federated learning (FL) and secure multi-party computation (MPC). PrivatEyes enables training gaze estimators on multiple local datasets across different users and server-based secure aggregation of the individual estimators’ updates. PrivatEyes guarantees that individual gaze data remains private even if a majority of the aggregating servers is malicious. We also introduce a new data leakage attack DualView that shows that PrivatEyes limits the leakage of private training data more effectively than previous approaches. Evaluations on the MPIIGaze, MPIIFaceGaze, GazeCapture, and NVGaze datasets further show that the improved privacy does not lead to a lower gaze estimation accuracy or substantially higher computational costs - both of which are on par with its non-secure counterparts.@techreport{elfares24_arxiv, title = {PrivatEyes: Appearance-based Gaze Estimation Using Federated Secure Multi-Party Computation}, author = {Elfares, Mayar and Reisert, Pascal and Hu, Zhiming and Tang, Wenwu and Küsters, Ralf and Bulling, Andreas}, year = {2024}, doi = {10.48550/arXiv.2402.18970}, pages = {1--22} } -

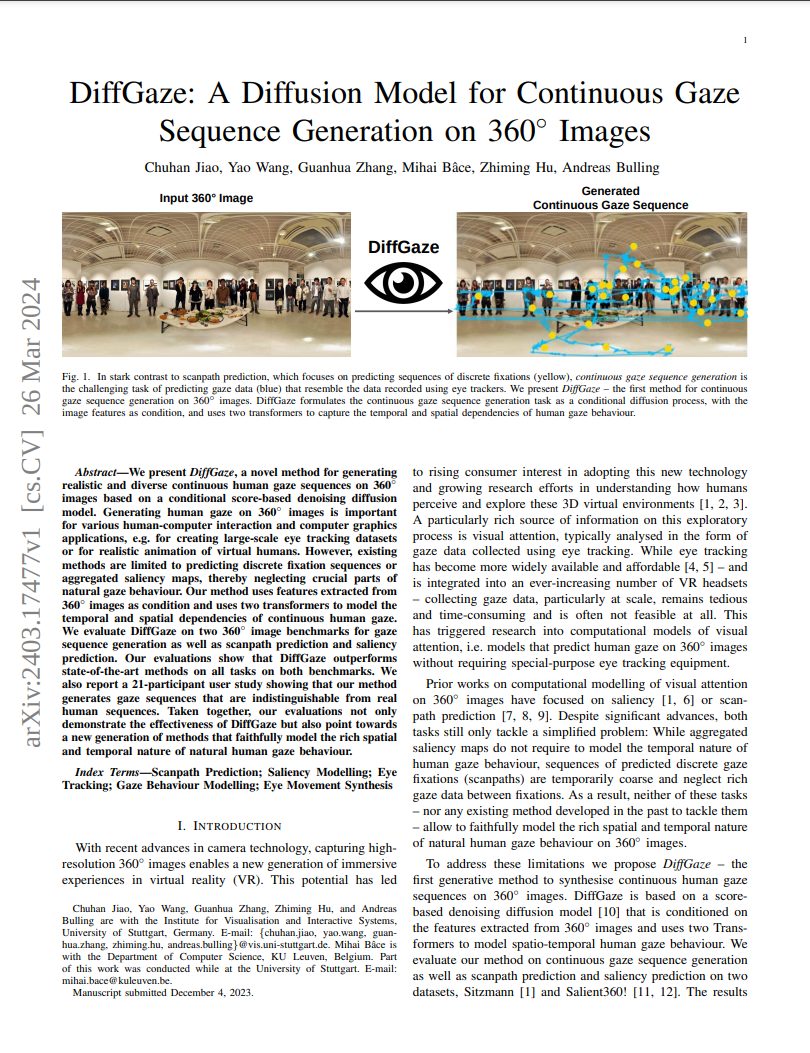

DiffGaze: A Diffusion Model for Continuous Gaze Sequence Generation on 360° Images

Chuhan Jiao, Yao Wang, Guanhua Zhang, Mihai Bâce, Zhiming Hu, Andreas Bulling

arXiv:2403.17477, pp. 1–13, 2024.

We present DiffGaze, a novel method for generating realistic and diverse continuous human gaze sequences on 360° images based on a conditional score-based denoising diffusion model. Generating human gaze on 360° images is important for various human-computer interaction and computer graphics applications, e.g. for creating large-scale eye tracking datasets or for realistic animation of virtual humans. However, existing methods are limited to predicting discrete fixation sequences or aggregated saliency maps, thereby neglecting crucial parts of natural gaze behaviour. Our method uses features extracted from 360° images as condition and uses two transformers to model the temporal and spatial dependencies of continuous human gaze. We evaluate DiffGaze on two 360° image benchmarks for gaze sequence generation as well as scanpath prediction and saliency prediction. Our evaluations show that DiffGaze outperforms state-of-the-art methods on all tasks on both benchmarks. We also report a 21-participant user study showing that our method generates gaze sequences that are indistinguishable from real human sequences. Taken together, our evaluations not only demonstrate the effectiveness of DiffGaze but also point towards a new generation of methods that faithfully model the rich spatial and temporal nature of natural human gaze behaviour.Paper Access: https://arxiv.org/abs/2403.17477@techreport{jiao24_arxiv, title = {DiffGaze: A Diffusion Model for Continuous Gaze Sequence Generation on 360° Images}, author = {Jiao, Chuhan and Wang, Yao and Zhang, Guanhua and B{\^a}ce, Mihai and Hu, Zhiming and Bulling, Andreas}, year = {2024}, pages = {1--13}, url = {https://arxiv.org/abs/2403.17477} } -

GazeMotion: Gaze-guided Human Motion Forecasting

Zhiming Hu, Syn Schmitt, Daniel Häufle, Andreas Bulling

arXiv:2403.09885, pp. 1–6, 2024.

We present GazeMotion, a novel method for human motion forecasting that combines information on past human poses with human eye gaze. Inspired by evidence from behavioural sciences showing that human eye and body movements are closely coordinated, GazeMotion first predicts future eye gaze from past gaze, then fuses predicted future gaze and past poses into a gaze-pose graph, and finally uses a residual graph convolutional network to forecast body motion. We extensively evaluate our method on the MoGaze, ADT, and GIMO benchmark datasets and show that it outperforms state-of-the-art methods by up to 7.4% improvement in mean per joint position error. Using head direction as a proxy to gaze, our method still achieves an average improvement of 5.5%. We finally report an online user study showing that our method also outperforms prior methods in terms of perceived realism. These results show the significant information content available in eye gaze for human motion forecasting as well as the effectiveness of our method in exploiting this information.Paper Access: https://arxiv.org/abs/2403.09885@techreport{hu24_arxiv, author = {Hu, Zhiming and Schmitt, Syn and Häufle, Daniel and Bulling, Andreas}, title = {GazeMotion: Gaze-guided Human Motion Forecasting}, year = {2024}, pages = {1--6}, url = {https://arxiv.org/abs/2403.09885} } -

ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos

Lei Shi, Paul Burkner, Andreas Bulling

arXiv:2403.08591, pp. 1–6, 2024.

We present ActionDiffusion – a novel diffusion model for procedure planning in instructional videos that is the first to take temporal inter-dependencies between actions into account in a diffusion model for procedure planning. This approach is in stark contrast to existing methods that fail to exploit the rich information content available in the particular order in which actions are performed. Our method unifies the learning of temporal dependencies between actions and denoising of the action plan in the diffusion process by projecting the action information into the noise space. This is achieved 1) by adding action embeddings in the noise masks in the noiseadding phase and 2) by introducing an attention mechanism in the noise prediction network to learn the correlations between different action steps. We report extensive experiments on three instructional video benchmark datasets (CrossTask, Coin, and NIV) and show that our method outperforms previous state-of-the-art methods on all metrics on CrossTask and NIV and all metrics except accuracy on Coin dataset. We show that by adding action embeddings into the noise mask the diffusion model can better learn action temporal dependencies and increase the performances on procedure planningPaper Access: https://arxiv.org/abs/2403.08591@techreport{shi24_arxiv, title = {ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos}, author = {Shi, Lei and Burkner, Paul and Bulling, Andreas}, year = {2024}, pages = {1--6}, url = {https://arxiv.org/abs/2403.08591} } -

Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition

Matteo Bortoletto, Constantin Ruhdorfer, Adnen Abdessaied, Lei Shi, Andreas Bulling

arXiv:2405.12621, pp. 1–16, 2024.

Recent work on dialogue-based collaborative plan acquisition (CPA) has suggested that Theory of Mind (ToM) modelling can improve missing knowledge prediction in settings with asymmetric skill-sets and knowledge. Although ToM was claimed to be important for effective collaboration, its real impact on this novel task remains under-explored. By representing plans as graphs and by exploiting task-specific constraints we show that, as performance on CPA nearly doubles when predicting one’s own missing knowledge, the improvements due to ToM modelling diminish. This phenomenon persists even when evaluating existing baseline methods. To better understand the relevance of ToM for CPA, we report a principled performance comparison of models with and without ToM features. Results across different models and ablations consistently suggest that learned ToM features are indeed more likely to reflect latent patterns in the data with no perceivable link to ToM. This finding calls for a deeper understanding of the role of ToM in CPA and beyond, as well as new methods for modelling and evaluating mental states in computational collaborative agents.@techreport{bortoletto24_arxiv, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Abdessaied, Adnen and Shi, Lei and Bulling, Andreas}, title = {Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition}, year = {2024}, pages = {1--16}, url = {https://arxiv.org/abs/2405.12621} } -

Benchmarking Mental State Representations in Language Models

Matteo Bortoletto, Constantin Ruhdorfer, Lei Shi, Andreas Bulling

arXiv:2406.17513, pp. 1–21, 2024.

While numerous works have assessed the generative performance of language models (LMs) on tasks requiring Theory of Mind reasoning, research into the models’ internal representation of mental states remains limited. Recent work has used probing to demonstrate that LMs can represent beliefs of themselves and others. However, these claims are accompanied by limited evaluation, making it difficult to assess how mental state representations are affected by model design and training choices. We report an extensive benchmark with various LM types with different model sizes, fine-tuning approaches, and prompt designs to study the robustness of mental state representations and memorisation issues within the probes. Our results show that the quality of models’ internal representations of the beliefs of others increases with model size and, more crucially, with fine-tuning. We are the first to study how prompt variations impact probing performance on theory of mind tasks. We demonstrate that models’ representations are sensitive to prompt variations, even when such variations should be beneficial. Finally, we complement previous activation editing experiments on Theory of Mind tasks and show that it is possible to improve models’ reasoning performance by steering their activations without the need to train any probe.@techreport{bortoletto24_arxiv_2, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Shi, Lei and Bulling, Andreas}, title = {Benchmarking Mental State Representations in Language Models}, year = {2024}, pages = {1--21}, url = {https://arxiv.org/abs/2406.17513} } -

Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions

Matteo Bortoletto, Constantin Ruhdorfer, Lei Shi, Andreas Bulling

arXiv:2407.06762, pp. 1–11, 2024.

We propose MToMnet - a Theory of Mind (ToM) neural network for predicting beliefs and their dynamics during human social interactions from multimodal input. ToM is key for effective nonverbal human communication and collaboration, yet, existing methods for belief modelling have not included explicit ToM modelling or have typically been limited to one or two modalities. MToMnet encodes contextual cues (scene videos and object locations) and integrates them with person-specific cues (human gaze and body language) in a separate MindNet for each person. Inspired by prior research on social cognition and computational ToM, we propose three different MToMnet variants: two involving fusion of latent representations and one involving re-ranking of classification scores. We evaluate our approach on two challenging real-world datasets, one focusing on belief prediction, while the other examining belief dynamics prediction. Our results demonstrate that MToMnet surpasses existing methods by a large margin while at the same time requiring a significantly smaller number of parameters. Taken together, our method opens up a highly promising direction for future work on artificial intelligent systems that can robustly predict human beliefs from their non-verbal behaviour and, as such, more effectively collaborate with humans.Paper Access: https://arxiv.org/abs/2407.06762@techreport{bortoletto24_arxiv_3, author = {Bortoletto, Matteo and Ruhdorfer, Constantin and Shi, Lei and Bulling, Andreas}, title = {Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions}, year = {2024}, pages = {1--11}, url = {https://arxiv.org/abs/2407.06762} } -

Learning User Embeddings from Human Gaze for Personalised Saliency Prediction

Florian Strohm, Mihai Bâce, Andreas Bulling

arXiv:2403.13653, pp. 1–15, 2024.

Reusable embeddings of user behaviour have shown significant performance improvements for the personalised saliency prediction task. However, prior works require explicit user characteristics and preferences as input, which are often difficult to obtain. We present a novel method to extract user embeddings from pairs of natural images and corresponding saliency maps generated from a small amount of user-specific eye tracking data. At the core of our method is a Siamese convolutional neural encoder that learns the user embeddings by contrasting the image and personal saliency map pairs of different users. Evaluations on two saliency datasets show that the generated embeddings have high discriminative power, are effective at refining universal saliency maps to the individual users, and generalise well across users and images. Finally, based on our model’s ability to encode individual user characteristics, our work points towards other applications that can benefit from reusable embeddings of gaze behaviour.Paper Access: https://arxiv.org/abs/2403.13653@techreport{strohm24_arxiv, title = {Learning User Embeddings from Human Gaze for Personalised Saliency Prediction}, author = {Strohm, Florian and Bâce, Mihai and Bulling, Andreas}, year = {2024}, pages = {1--15}, url = {https://arxiv.org/abs/2403.13653} } -

MultiMediate’24: Multi-Domain Engagement Estimation

Philipp Müller, Michal Balazia, Tobias Baur, Michael Dietz, Alexander Heimerl, Anna Penzkofer, Dominik Schiller, François Brémond, Jan Alexandersson, Elisabeth André, Andreas Bulling

arXiv:2408.16625, pp. 1–6, 2024.

Estimating the momentary level of participant’s engagement is an important prerequisite for assistive systems that support human interactions. Previous work has addressed this task in within-domain evaluation scenarios, i.e. training and testing on the same dataset. This is in contrast to real-life scenarios where domain shifts between training and testing data frequently occur. With MultiMediate’24, we present the first challenge addressing multi-domain engagement estimation. As training data, we utilise the NOXI database of dyadic novice-expert interactions. In addition to within-domain test data, we add two new test domains. First, we introduce recordings following the NOXI protocol but covering languages that are not present in the NOXI training data. Second, we collected novel engagement annotations on the MPIIGroupInteraction dataset which consists of group discussions between three to four people. In this way, MultiMediate’24 evaluates the ability of approaches to generalise across factors such as language and cultural background, group size, task, and screen-mediated vs. face-to-face interaction. This paper describes the MultiMediate’24 challenge and presents baseline results. In addition, we discuss selected challenge solutions.Paper: mueller24_arxiv.pdfPaper Access: http://arxiv.org/abs/2408.16625@techreport{mueller24_arxiv, title = {MultiMediate'24: Multi-Domain Engagement Estimation}, author = {M{\"{u}}ller, Philipp and Balazia, Michal and Baur, Tobias and Dietz, Michael and Heimerl, Alexander and Penzkofer, Anna and Schiller, Dominik and Brémond, François and Alexandersson, Jan and André, Elisabeth and Bulling, Andreas}, year = {2024}, pages = {1--6}, doi = {10.48550/arXiv.2408.16625}, url = {http://arxiv.org/abs/2408.16625} } -

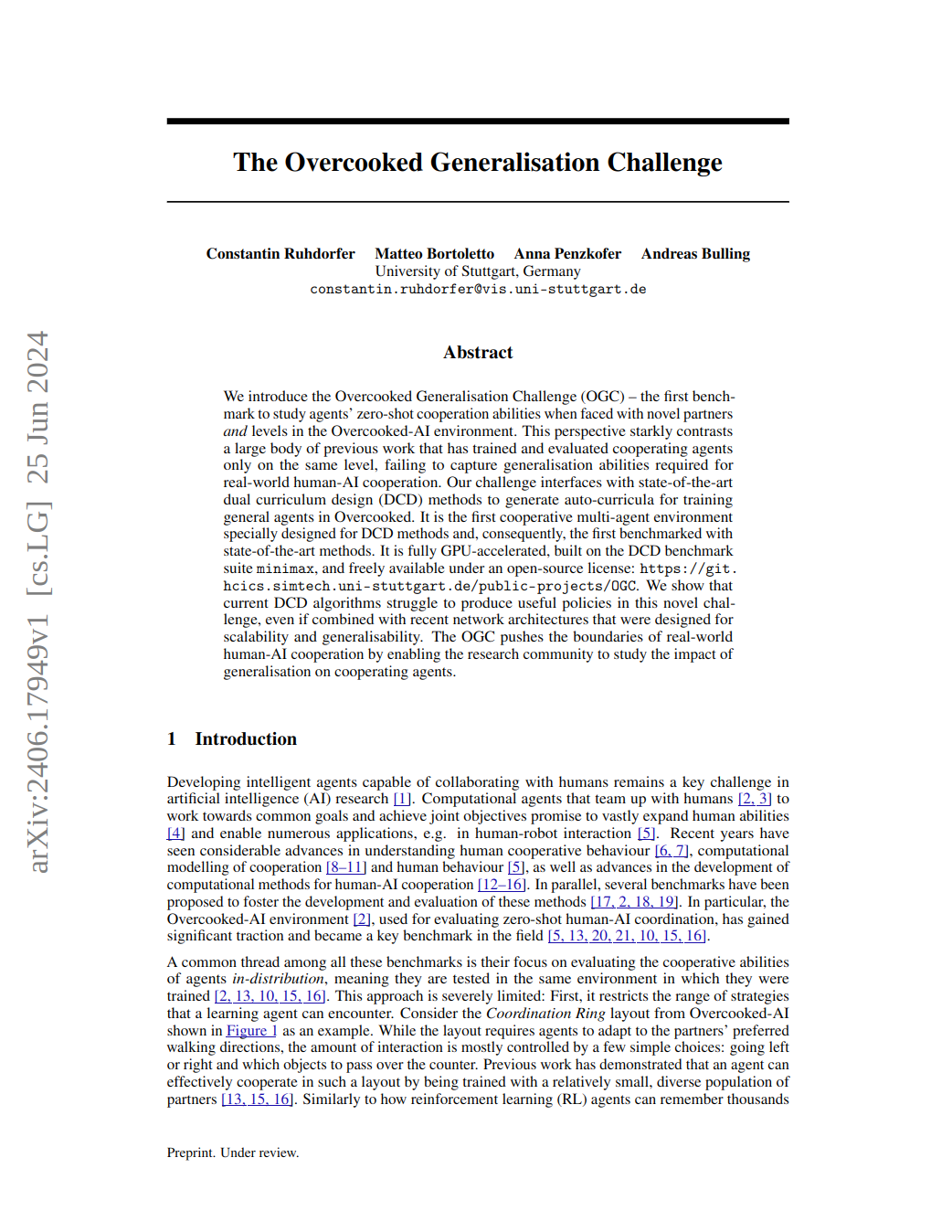

The Overcooked Generalisation Challenge

Constantin Ruhdorfer, Matteo Bortoletto, Anna Penzkofer, Andreas Bulling

arxiv:2406.17949, pp. 1-25, 2024.