How far are we from quantifying visual attention in mobile HCI?

Mihai Bâce, Sander Staal, Andreas Bulling

IEEE Pervasive Computing, 19(2), pp. 46-55, 2020.

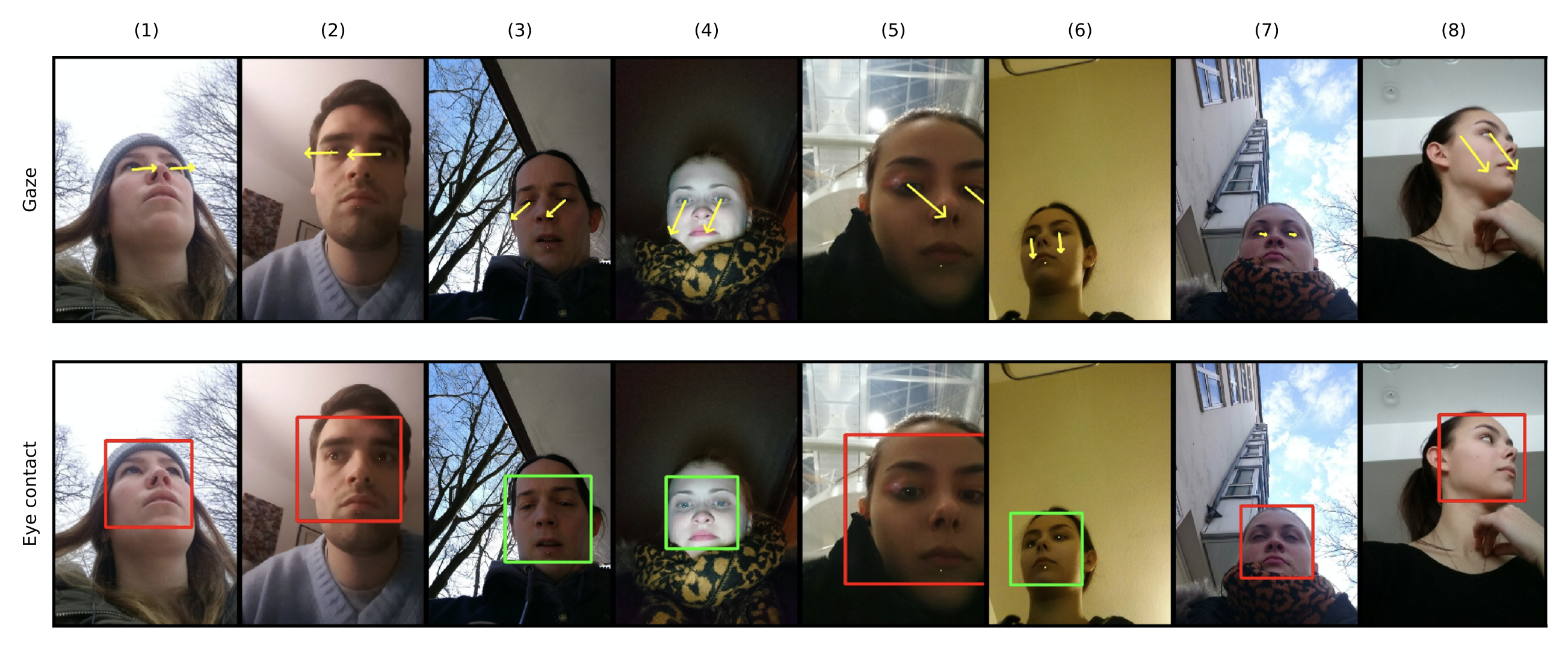

Sample images with the corresponding gaze estimates and the predicted eye contact label (green represents eye contact, red no eye contact). While being computationally simpler, the state-of-the-art method proposed by Zhang et al. for eye contact detection builds on an appearance-based gaze estimator. Thus, the performance of the method is dependent on the performance of the underlying gaze estimates. E.g., for certain head poses (column 6), if the gaze estimates are incorrect, the eye contact label will also be incorrect.

Abstract

With an ever-increasing number of mobile devices competing for attention, quantifying when, how often, or for how long users look at their devices has emerged as a key challenge in mobile human-computer interaction. Encouraged by recent advances in automatic eye contact detection using machine learning and device-integrated cameras, we provide a fundamental investigation into the feasibility of quantifying overt visual attention during everyday mobile interactions. We discuss the main challenges and sources of error associated with sensing visual attention on mobile devices in the wild, including the impact of face and eye visibility, the importance of robust head pose estimation, and the need for accurate gaze estimation. Our analysis informs future research on this emerging topic and underlines the potential of eye contact detection for exciting new applications towards next-generation pervasive attentive user interfaces.Links

doi: 10.1109/MPRV.2020.2967736

Paper: bace20_pcm.pdf

BibTeX

@article{bace20_pcm,

title = {How far are we from quantifying visual attention in mobile HCI?},

author = {B{\^a}ce, Mihai and Staal, Sander and Bulling, Andreas},

journal = {IEEE Pervasive Computing},

year = {2020},

volume = {19},

number = {2},

doi = {10.1109/MPRV.2020.2967736},

pages = {46-55}

}