VisRecall: Quantifying Information Visualisation Recallability via Question Answering

Yao Wang, Chuhan Jiao, Mihai Bâce, Andreas Bulling

arXiv:2112.15217, pp. 1–10, 2021.

Abstract

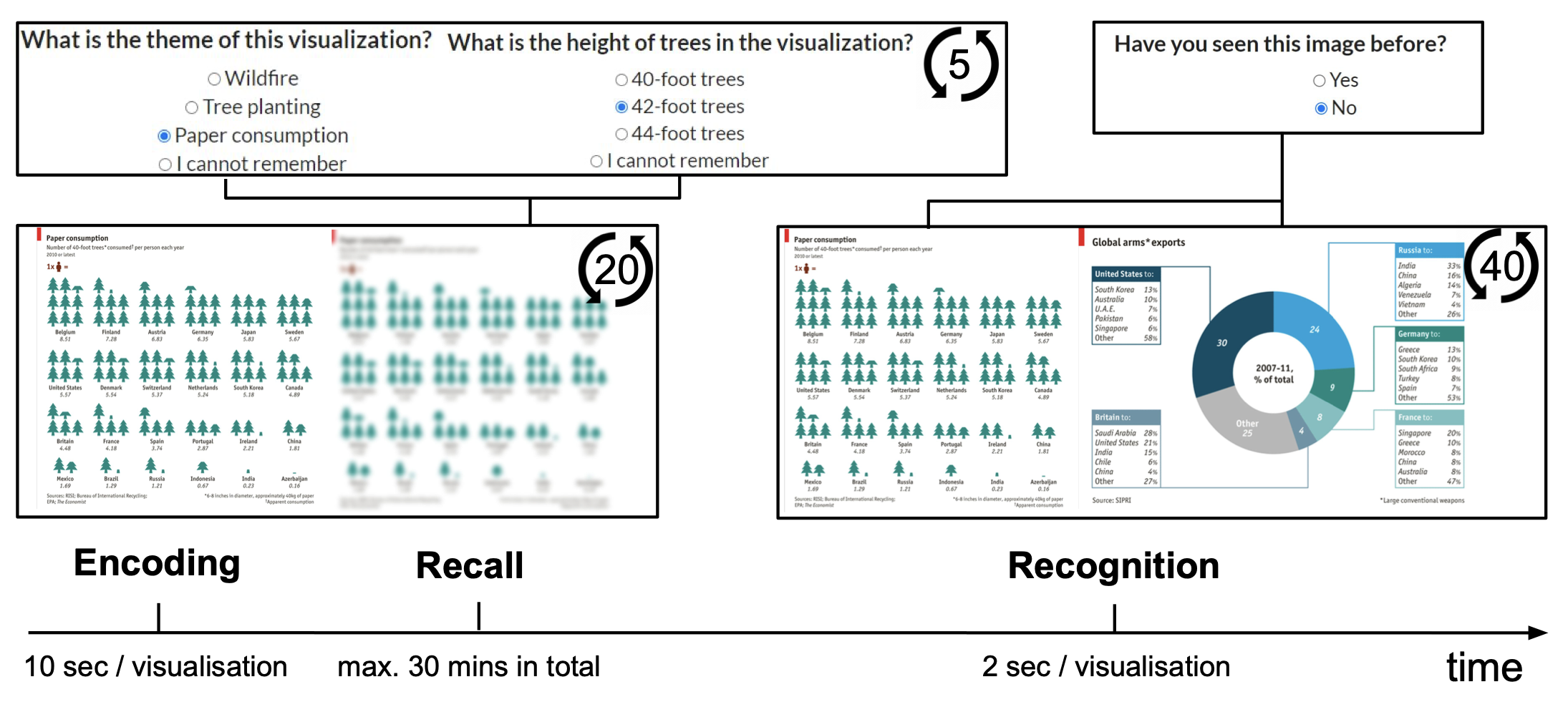

Despite its importance for assessing the effectiveness of communicating information visually, fine-grained recallability of information visualisations has not been studied quantitatively so far. In this work we propose a visual question answering (VQA) paradigm to study visualisation recallability and present VisRecall — a novel dataset consisting of 200 visualisations that are annotated with crowd-sourced human (N = 305) recallability scores obtained from 1,000 questions from five question types. Furthermore, we present the first computational method to predict recallability of different visualisation elements, such as the title or specific data values. We report detailed analyses of our method on VisRecall and demonstrate that it outperforms several baselines in overall recallability and FE-, F-, RV-, and U-question recallability. We further demonstrate one possible application of our method: recommending the visualisation type that maximises user recallability for a given data source. Taken together, our work makes fundamental contributions towards a new generation of methods to assist designers in optimising visualisations.Links

Paper: wang21_arxiv_2.pdf

Paper Access: https://arxiv.org/abs/2112.15217

Dataset: https://darus.uni-stuttgart.de/dataset.xhtml?persistentId=doi:10.18419/darus-2826

BibTeX

@techreport{wang21_arxiv_2,

title = {VisRecall: Quantifying Information Visualisation Recallability via Question Answering},

author = {Wang, Yao and Jiao, Chuhan and B{\^a}ce, Mihai and Bulling, Andreas},

year = {2021},

pages = {1--10},

url = {https://arxiv.org/abs/2112.15217}

}