Gaze+RST: Integrating Gaze and Multitouch for Remote Rotate-Scale-Translate Tasks

Jayson Turner, Jason Alexander, Andreas Bulling, Hans Gellersen

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 4179-4188, 2015.

Abstract

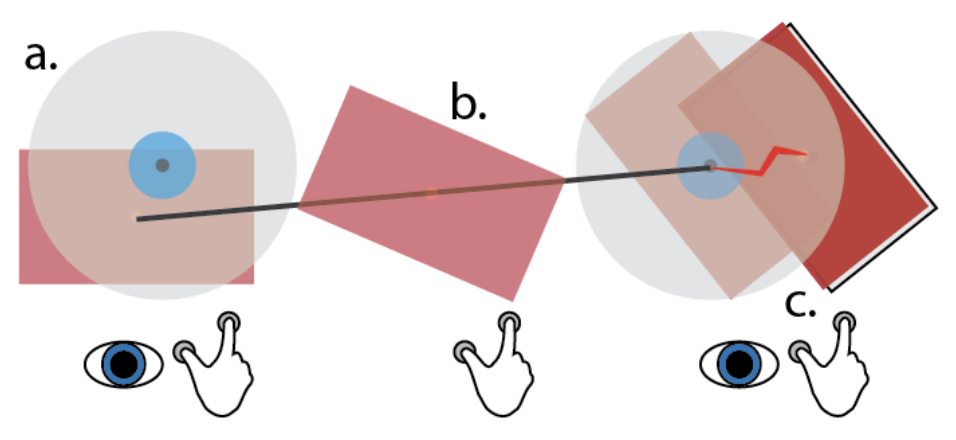

Our work investigates the use of gaze and multitouch to flu- idly perform rotate-scale translate (RST) tasks on large dis- plays. The work specifically aims to understand if gaze can provide benefit in such a task, how task complexity af- fects performance, and how gaze and multitouch can be com- bined to create an integral input structure suited to the task of RST. We present four techniques that individually strike a different balance between gaze-based and touch-based trans- lation while maintaining concurrent rotation and scaling op- erations. A 16 participant empirical evaluation revealed that three of our four techniques present viable options for this scenario, and that larger distances and rotation/scaling opera- tions can significantly affect a gaze-based translation configu- ration. Furthermore we uncover new insights regarding mul- timodal integrality, finding that gaze and touch can be com- bined into configurations that pertain to integral or separable input structures.Links

Paper: turner15_chi.pdf

BibTeX

@inproceedings{turner15_chi,

author = {Turner, Jayson and Alexander, Jason and Bulling, Andreas and Gellersen, Hans},

title = {Gaze+RST: Integrating Gaze and Multitouch for Remote Rotate-Scale-Translate Tasks},

booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)},

year = {2015},

pages = {4179-4188},

doi = {10.1145/2702123.2702355}

}