Lei Shi

Institute for Visualisation and Interactive Systems, University of Stuttgart

Pfaffenwaldring 5a, 70569 Stuttgart, Germany University of Stuttgart, SimTech Building Google Scholar

Biography

Lei Shi is a post-doctoral researcher in the Perceptual User Interfaces group since March 2022. He received his PhD degree from the University of Antwerp, Belgium, in the InViLab research group. He received his master's degree (cum laude) in Mechatronics from the Tallinn University of Technology, Estonia and his bachelor's degree in Electrical Engineering and Automation from Shanghai Maritime University, China. His research interests include eye tracking, intention prediction and graph neural networks.

Teaching

- 2024

- Machine Perception and Learning (Teaching Assistant) (winter)Master

- 2023

- Machine Perception and Learning (Teaching Assistant) (winter)Master

- 2022

- Machine Perception and Learning (Tutor) (summer)Master

- Machine Perception and Learning (Teaching Assistant) (winter)Master

- 2024

- Gaze-based Transformer for Improving Action Recognition in Egocentric Videos (M.Sc.) Mengze Lu

-

ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos

Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. , 2025.

-

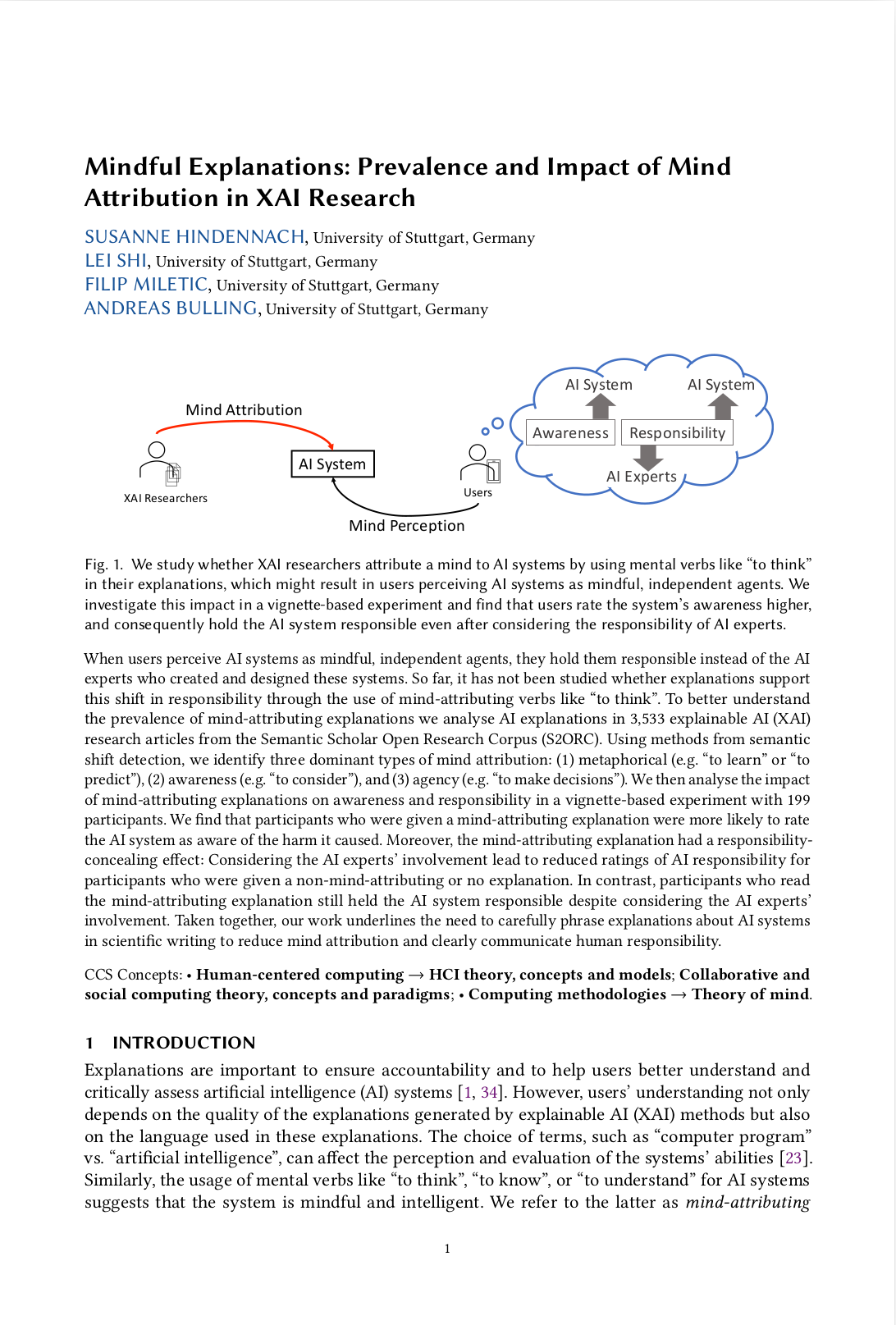

Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (CSCW), pp. 1–42, 2024.

-

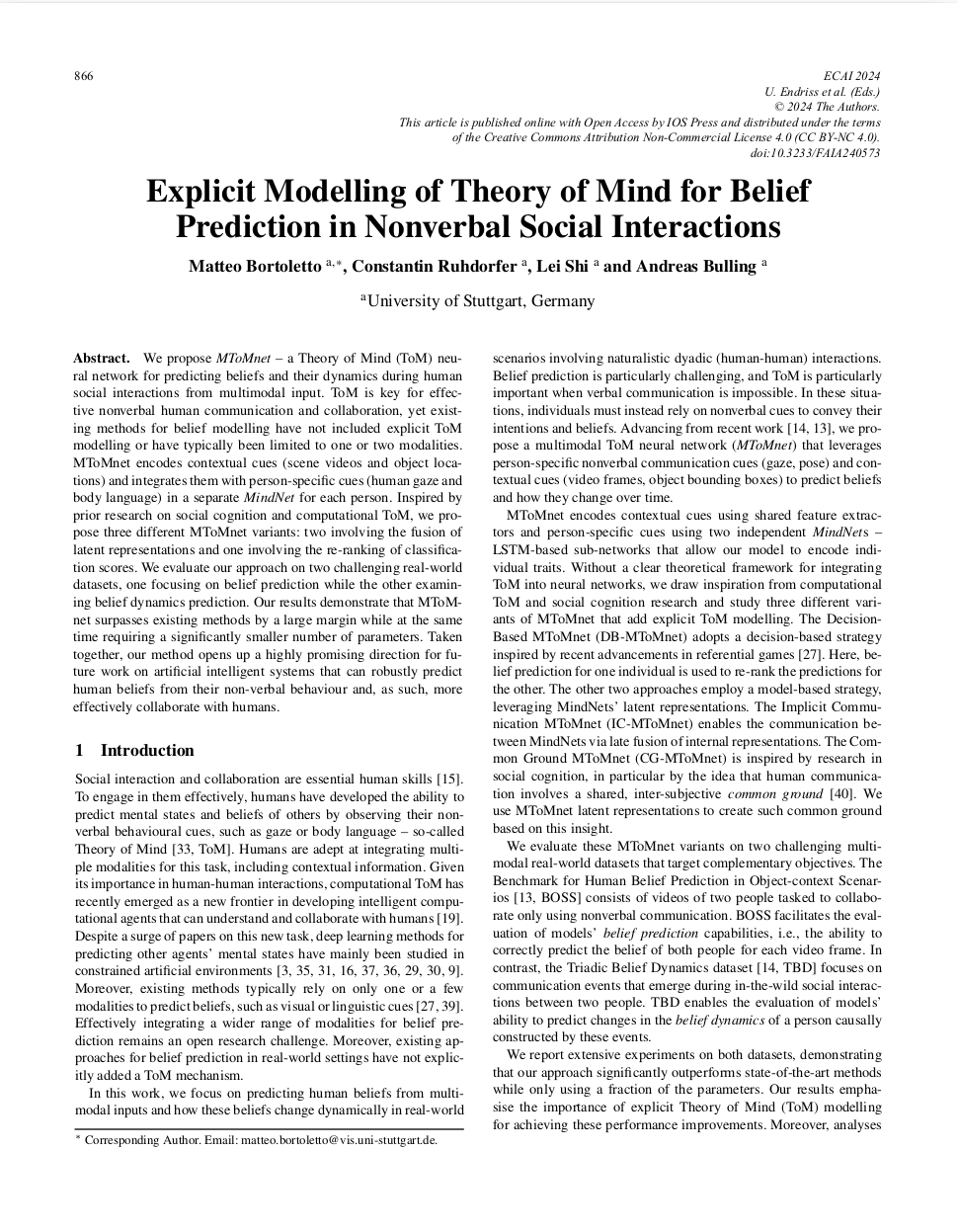

Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions

Proc. 27th European Conference on Artificial Intelligence (ECAI), pp. 866–873, 2024.

-

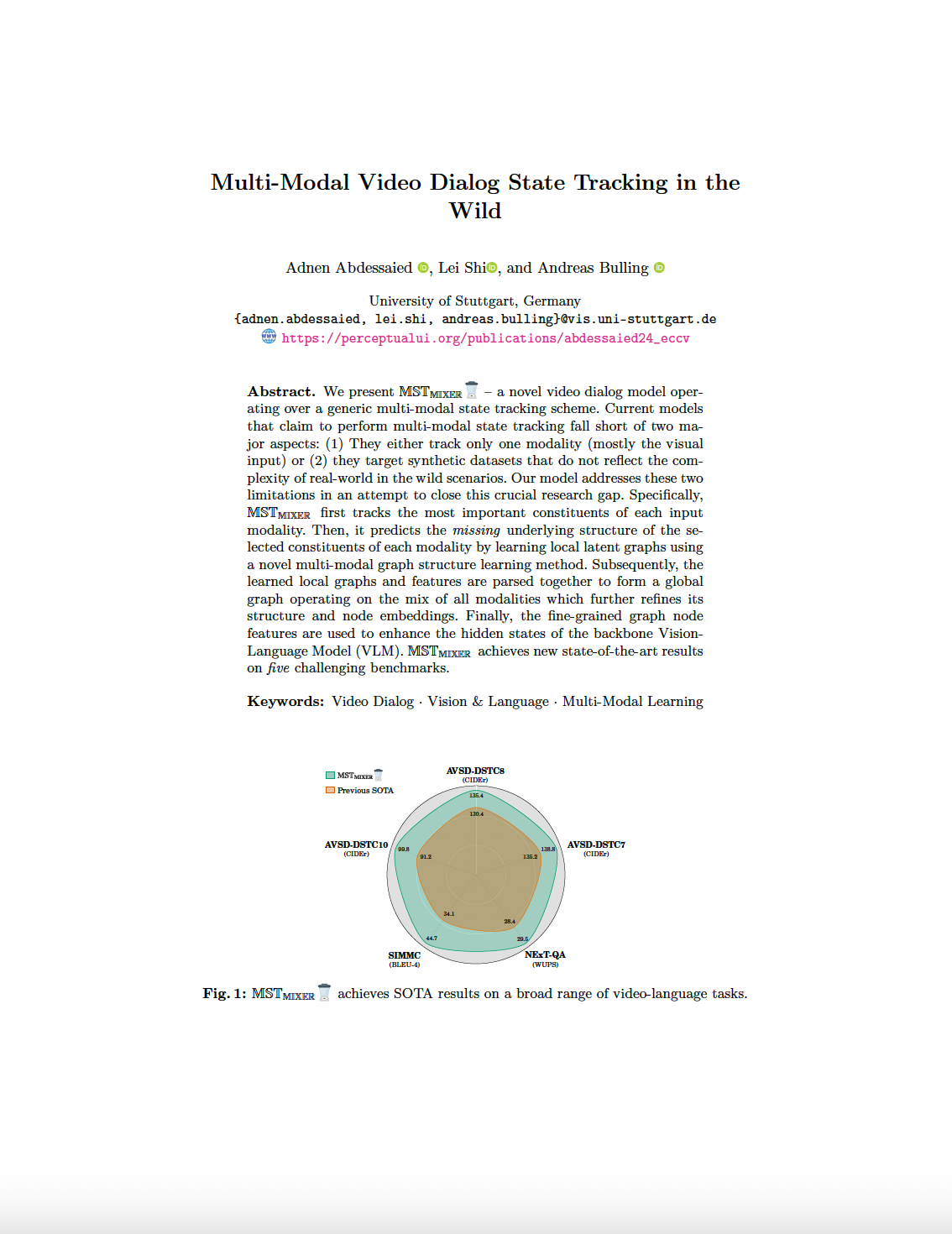

Multi-Modal Video Dialog State Tracking in the Wild

Proc. 18th European Conference on Computer Vision (ECCV), pp. 1–25, 2024.

-

Benchmarking Mental State Representations in Language Models

Proc. ICML 2024 Workshop on Mechanistic Interpretability, pp. 1–21, 2024.

-

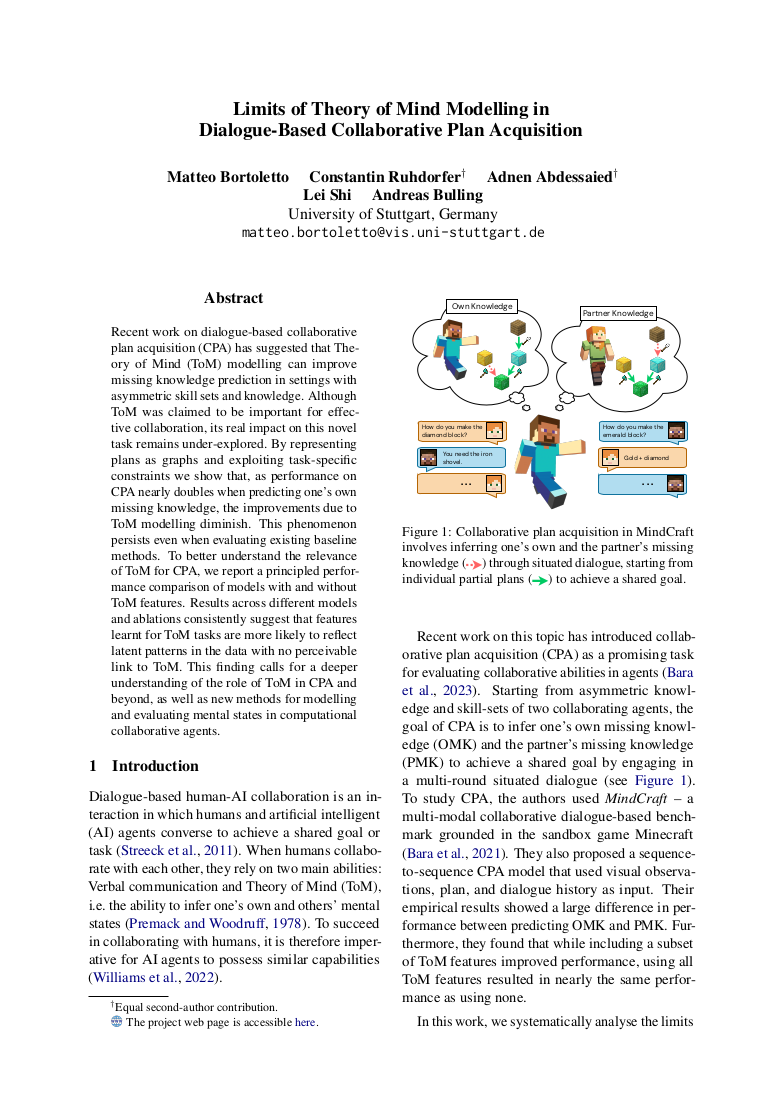

Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition

Proc. 62nd Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1–16, 2024.

-

VSA4VQA: Scaling A Vector Symbolic Architecture To Visual Question Answering on Natural Images

Proc. 46th Annual Meeting of the Cognitive Science Society (CogSci), 2024.

-

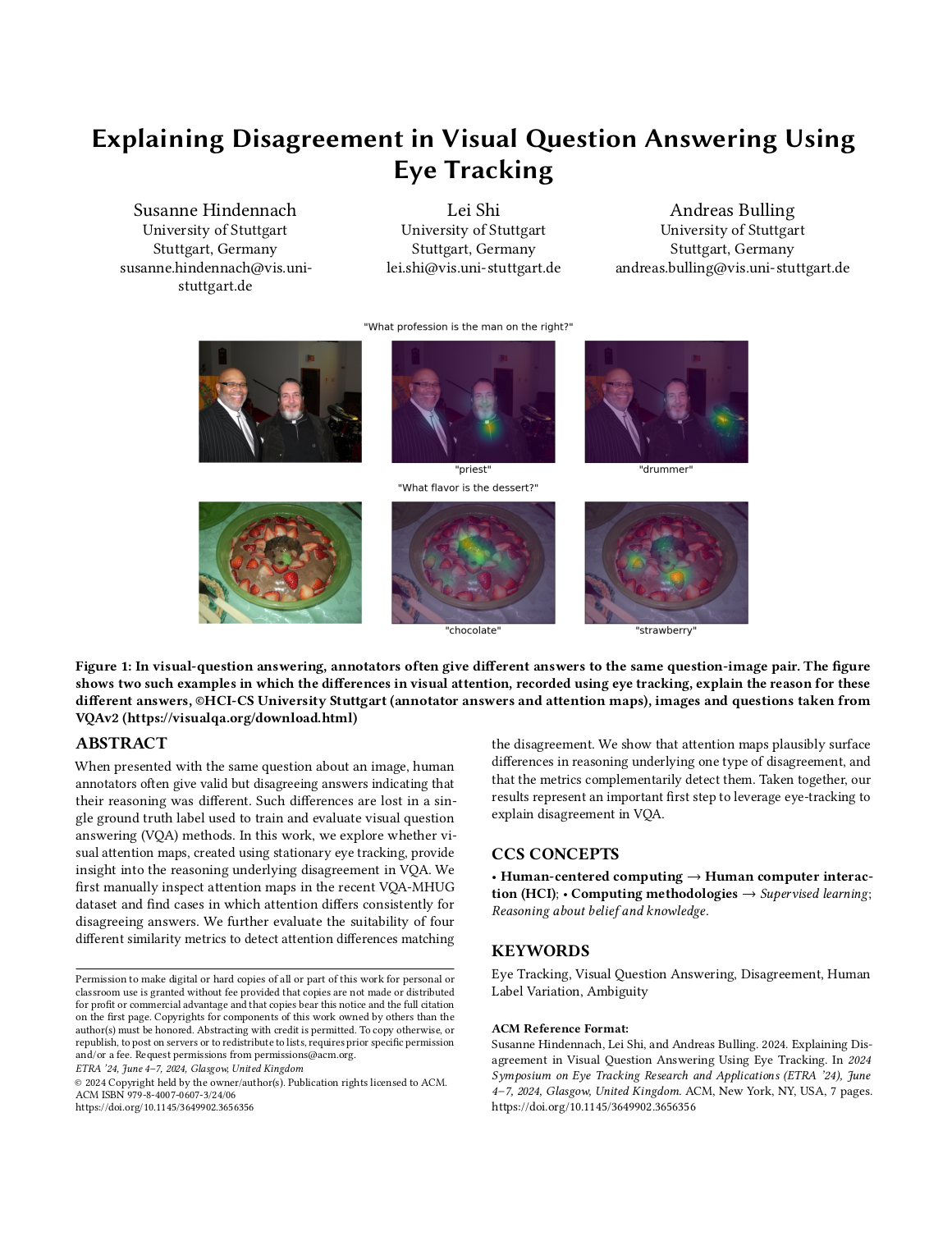

Explaining Disagreement in Visual Question Answering Using Eye Tracking

Proc. International Workshop on Pervasive Eye Tracking and Mobile Gaze-Based Interaction (PETMEI), pp. 1–7, 2024.

-

Inferring Human Intentions from Predicted Action Probabilities

Proc. Workshop on Theory of Mind in Human-AI Interaction at CHI 2024, pp. 1–7, 2024.

-

Neural Reasoning About Agents’ Goals, Preferences, and Actions

Proc. 38th AAAI Conference on Artificial Intelligence (AAAI), pp. 456–464, 2024.

-

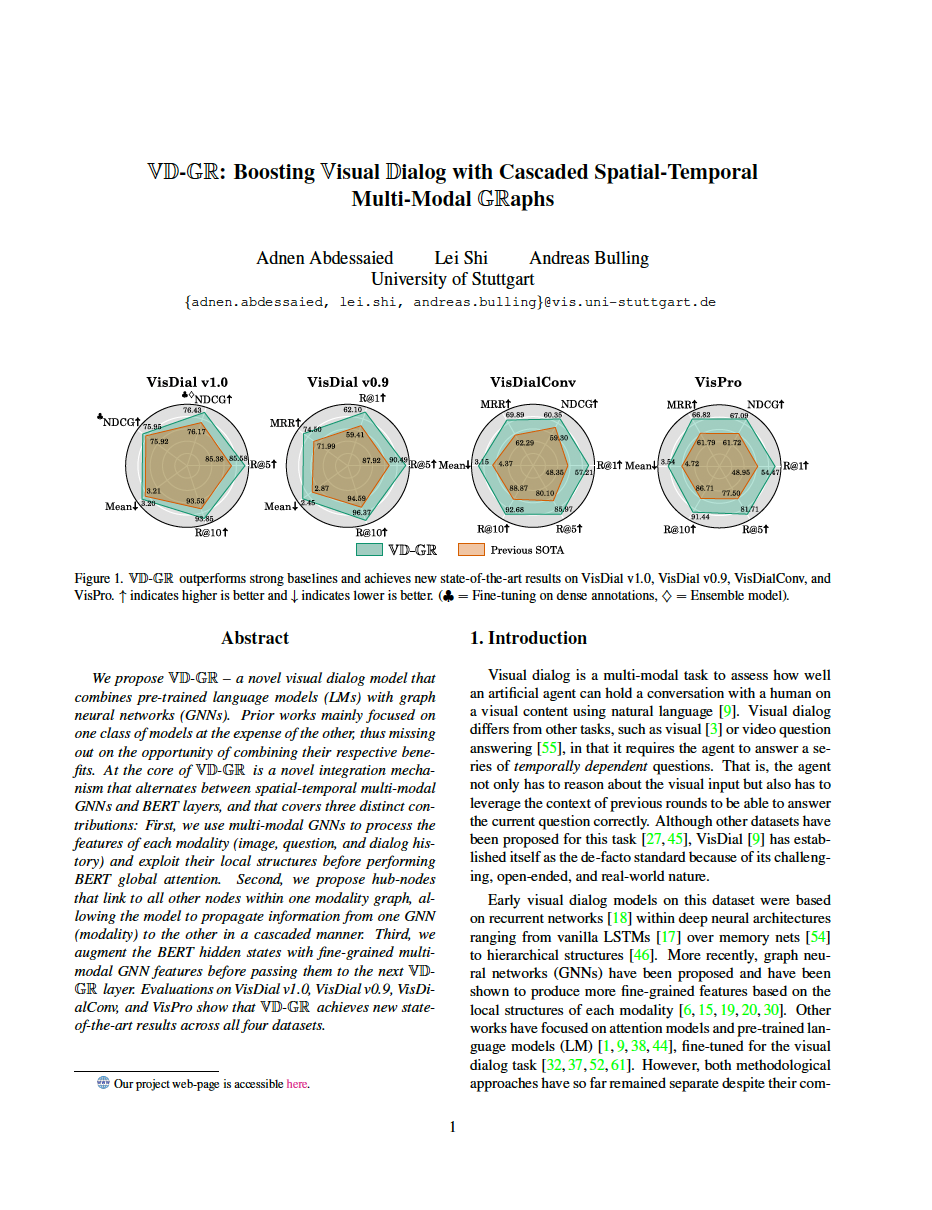

VD-GR: Boosting Visual Dialog with Cascaded Spatial-Temporal Multi-Modal GRaphs

Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. 5805–5814, 2024.

-

ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos

arXiv:2403.08591, pp. 1–6, 2024.

-

Improving Neural Saliency Prediction with a Cognitive Model of Human Visual Attention

Proc. the 45th Annual Meeting of the Cognitive Science Society (CogSci), pp. 3639–3646, 2023.

-

Exploring Natural Language Processing Methods for Interactive Behaviour Modelling

Proc. IFIP TC13 Conference on Human-Computer Interaction (INTERACT), pp. 1–22, 2023.

-

Evaluating Dropout Placements in Bayesian Regression Resnet

Journal of Artificial Intelligence and Soft Computing Research, 12 (1), pp. 61–73, 2022.

-

Gaze Gesture Recognition by Graph Convolutional Networks

Frontiers in Robotics and AI, 8, 2021.

-

GazeEMD: Detecting Visual Intention in Gaze-Based Human-Robot Interaction

Robotics, 10 (2), pp. 1–18, 2021.

-

A Bayesian Deep Neural Network for Safe Visual Servoing in Human–Robot Interaction

Frontiers in Robotics and AI, 8, pp. 1–13, 2021.

-

Visual Intention Classification by Deep Learning for Gaze-based Human-Robot Interaction

IFAC-PapersOnLine, 53 (5), pp. 750-755, 2020.

-

A Deep Regression Model for Safety Control in Visual Servoing Applications

Proc. IEEE International Conference on Robotic Computing (IRC), pp. 360–366, 2020.

-

A Performance Analysis of Invariant Feature Descriptors in Eye Tracking based Human Robot Collaboration

Proc. International Conference on Control, Automation and Robotics (ICCAR), pp. 256–260, 2019.

-

Application of Visual Servoing and Eye Tracking Glass in Human Robot Interaction: A case study

Proc. International Conference on System Theory, Control and Computing (ICSTCC), pp. 515–520, 2019.

-

Automatic Tuning Methodology of Visual Servoing System Using Predictive Approach

Proc. IEEE International Conference on Control and Automation (ICCA), pp. 776–781, 2019.