Adversarial Attacks on Classifiers for Eye-based User Modelling

Inken Hagestedt, Michael Backes, Andreas Bulling

Adj. Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1-3, 2020.

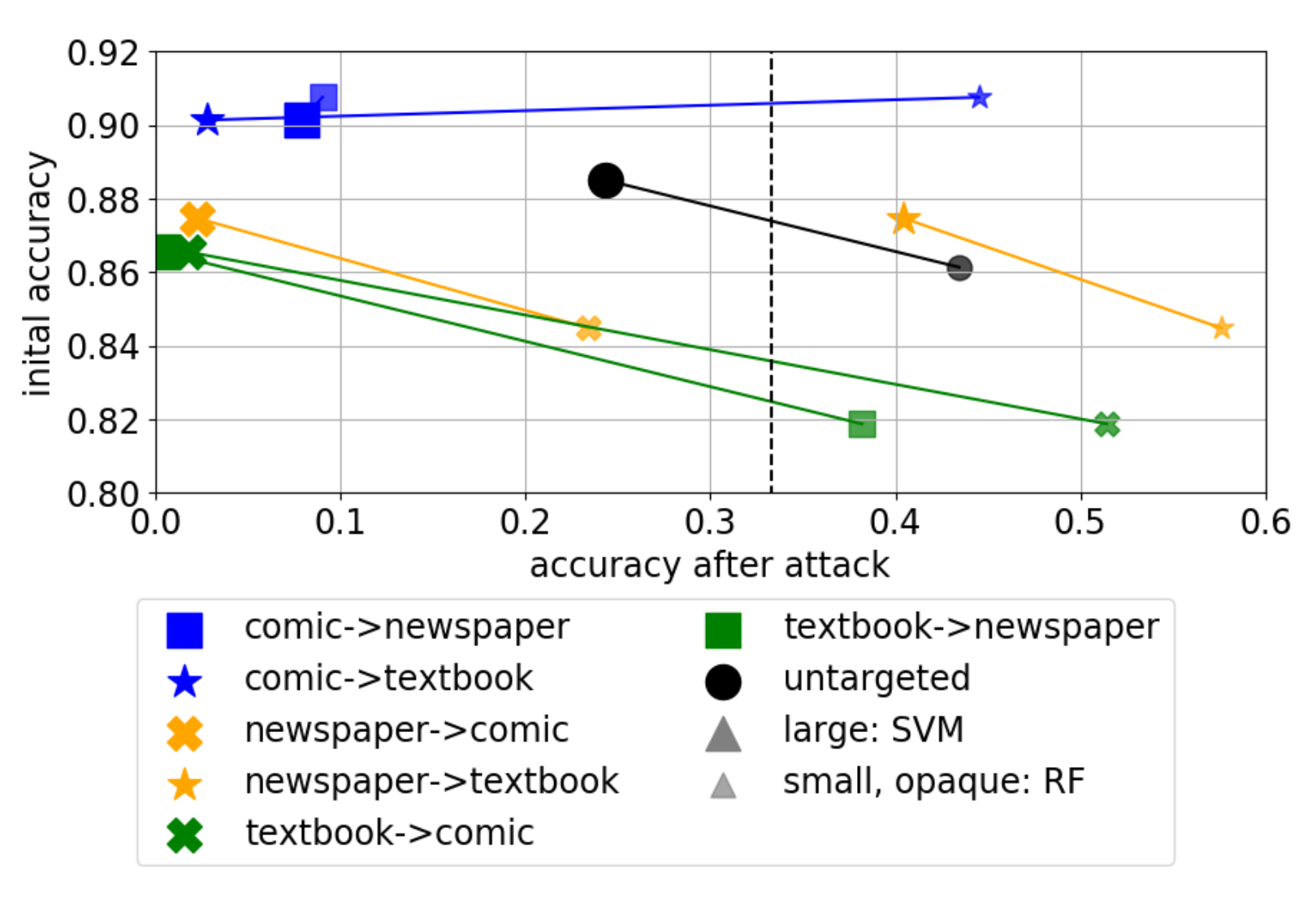

Accuracies after attacks on SVM with FGSM (larger markers) and transfer to RF (smaller markers). Different colors and markers show different document types under attack. The dashed black line visualizes the chance level.

Abstract

An ever-growing body of work has demonstrated the rich information content available in eye movements for user modelling, e.g. for predicting users’ activities, cognitive processes, or even personality traits. We show that state-of-the-art classifiers for eye-based user modelling are highly vulnerable to adversarial examples: small artificial perturbations in gaze input that can dramatically change a classifier’s predictions. On the sample task of eye-based document type recognition we study the success of adversarial attacks with and without targeting the attack to a specific class.Links

Paper: hagestedt20_etra.pdf

BibTeX

@inproceedings{hagestedt20_etra,

title = {Adversarial Attacks on Classifiers for Eye-based User Modelling},

author = {Hagestedt, Inken and Backes, Michael and Bulling, Andreas},

year = {2020},

pages = {1-3},

doi = {10.1145/3379157.3390511},

booktitle = {Adj. Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)}

}