Learning an appearance-based gaze estimator from one million synthesised images

Erroll Wood, Tadas Baltrušaitis, Louis-Philippe Morency, Peter Robinson, Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 131–138, 2016.

Emerging Investigator Award

Abstract

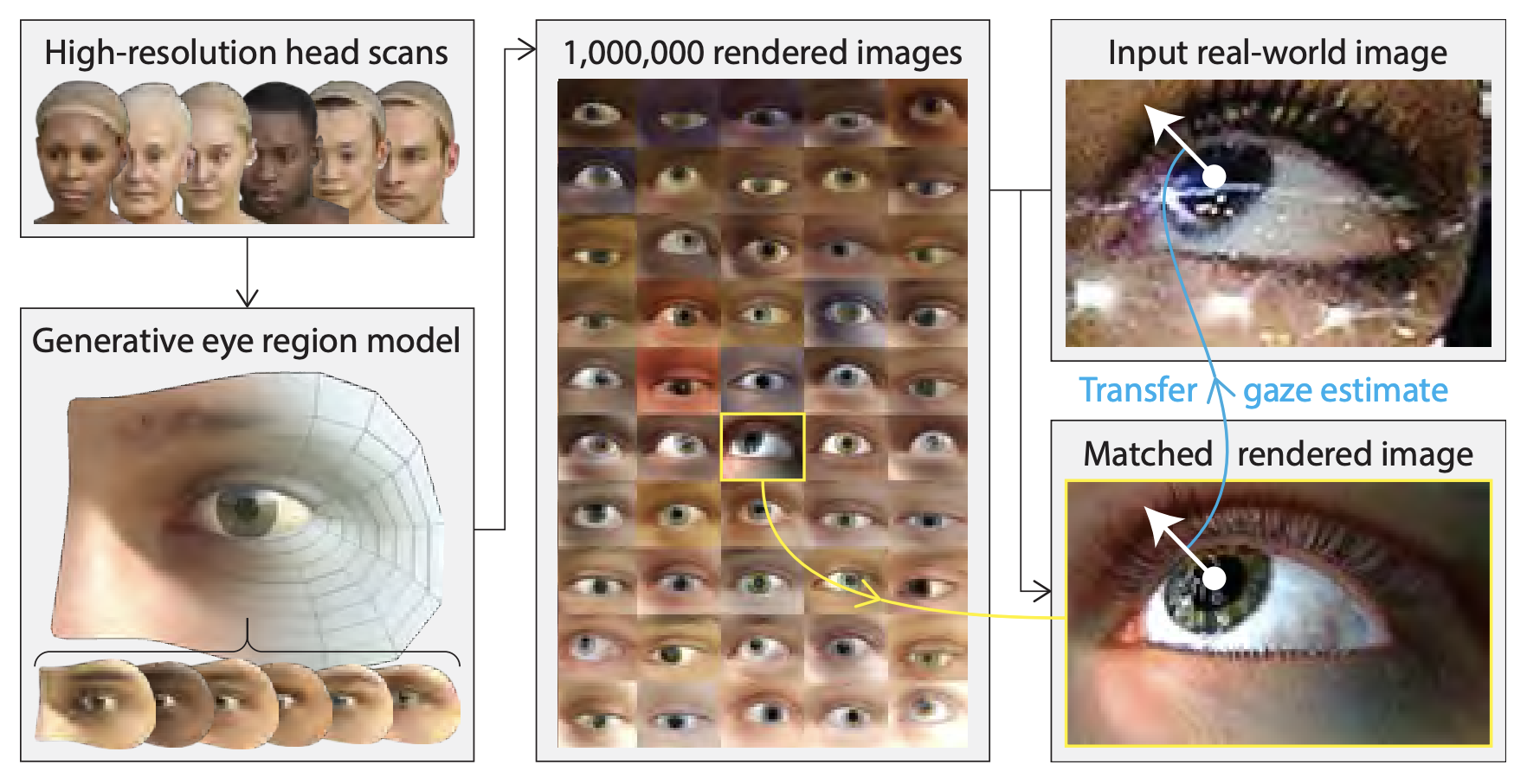

Learning-based methods for appearance-based gaze estimation achieve state-of-the-art performance in challenging real-world settings but require large amounts of labelled training data. Learning-by-synthesis was proposed as a promising solution to this problem but current methods are limited with respect to speed, the appearance variability as well as the head pose and gaze angle distribution they can synthesize. We present UnityEyes, a novel method to rapidly synthesize large amounts of variable eye region images as training data. Our method combines a novel generative 3D model of the human eye region with a real-time rendering framework. The model is based on high-resolution 3D face scans and uses real- time approximations for complex eyeball materials and structures as well as novel anatomically inspired procedural geometry methods for eyelid animation. We show that these synthesized images can be used to estimate gaze in difficult in-the-wild scenarios, even for extreme gaze angles or in cases in which the pupil is fully occluded. We also demonstrate competitive gaze estimation results on a benchmark in-the-wild dataset, despite only using a light-weight nearest-neighbor algorithm. We are making our UnityEyes synthesis framework freely available online for the benefit of the research community.Links

Paper: wood16_etra.pdf

BibTeX

@inproceedings{wood16_etra,

author = {Wood, Erroll and Baltru{\v{s}}aitis, Tadas and Morency, Louis-Philippe and Robinson, Peter and Bulling, Andreas},

title = {Learning an appearance-based gaze estimator from one million synthesised images},

booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)},

year = {2016},

pages = {131--138},

doi = {10.1145/2857491.2857492}

}