TextPursuits: Using Text for Pursuits-Based Interaction and Calibration on Public Displays

Mohamed Khamis, Ozan Saltuk, Alina Hang, Katharina Stolz, Andreas Bulling, Florian Alt

Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 274-285, 2016.

Abstract

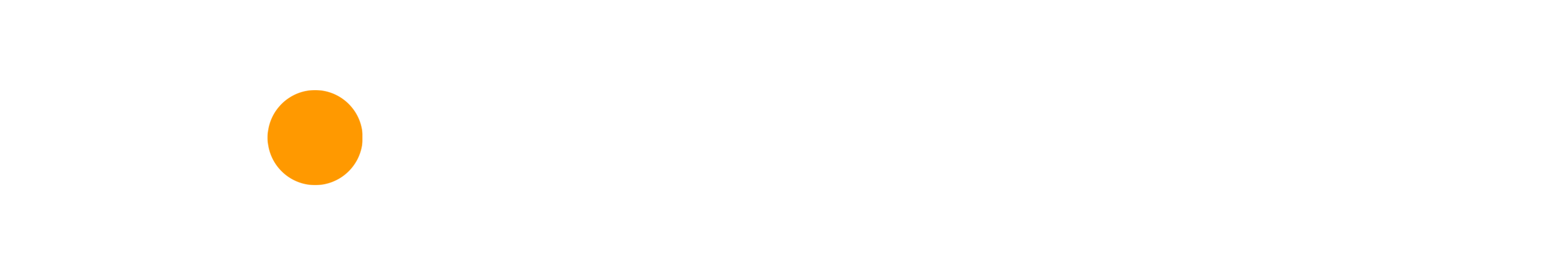

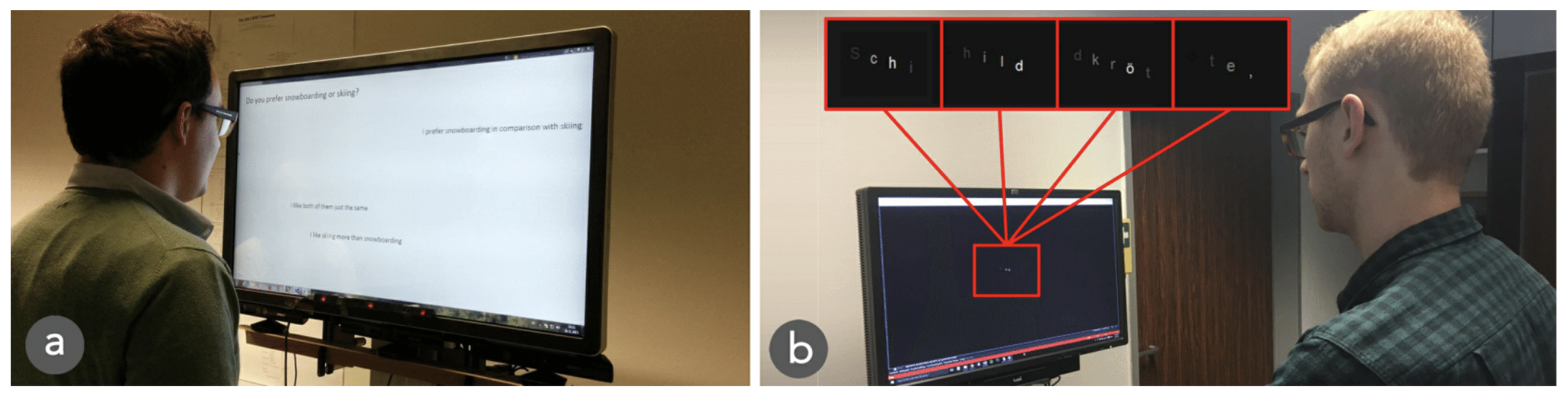

Pursuits, a technique that correlates users’ eye movements with moving on-screen targets, was recently introduced for calibration-free interaction with public displays. While prior work used abstract objects or dots as targets, we explore the use of Pursuits with text (read-and-pursue). Given that much of the content on public displays includes text, designers could greatly benefit from users being able to spontaneously interact and implicitly calibrate an eye tracker while simply read- ing text on a display. At the same time, using Pursuits with textual content is challenging given that the eye movements performed while reading interfere with the pursuit movements. We present two systems, EyeVote and Read2Calibrate, that enable spontaneous gaze interaction and implicit calibration by reading text. Results from two user studies (N=37) show that Pursuits with text is feasible and can achieve similar accu- racy as non text-based pursuit approaches. While calibration is less accurate, it integrates smoothly with reading and allows to identify areas of the display the user is looking at.Links

Paper: khamis16_ubicomp.pdf

BibTeX

@inproceedings{khamis16_ubicomp,

author = {Khamis, Mohamed and Saltuk, Ozan and Hang, Alina and Stolz, Katharina and Bulling, Andreas and Alt, Florian},

booktitle = {Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp)},

title = {TextPursuits: Using Text for Pursuits-Based Interaction and Calibration on Public Displays},

year = {2016},

doi = {10.1145/2971648.2971679},

pages = {274-285}

}