Realising the vision and exploiting the full potential of collaborative artificial intelligence requires significant advances on four primary research challenges: (1) Computational sensing and modelling of everyday verbal and non-verbal human behaviour; (2) Integration of these behaviour models with data-driven and theoretical models of human cognition and perception; (3) Analysing, evaluating, and facilitating the fundamental mechanisms of effective and natural human-AI collaboration; (4) Integrating social and ethical aspects in all method developments. Successfully addressing these challenges requires an interdisciplinary approach and advancing methods in multimodal machine learning, computational cognitive modelling, computer vision, and human-machine interaction.

Below is a summary of a selection of research projects that our group has been working on towards addressing these challenges. A full list of publications is available here.

Human Behaviour Sensing and Modelling

The ecology of the human ability to collaborate effectively is verbal and non-verbal behaviour. It is through our behaviour that we successfully perform tasks with different collaboration partners, for diverse purposes, and in different everyday contexts. Complementing the rich information content available in human language, non-verbal behaviour involving body language, facial expressions, or gaze is an essential, complementary communication channel for seamless coordination, negotiation, and social signalling. It is therefore of utmost importance for collaborative artificial intelligent systems to have similar abilities, i.e., to be able to (make) sense of human behaviour.

-

HOIMotion: Forecasting Human Motion During Human-Object Interactions Using Egocentric 3D Object Bounding Boxes

IEEE Transactions on Visualization and Computer Graphics (TVCG), , pp. 1–11, 2024.

-

Mouse2Vec: Learning Reusable Semantic Representations of Mouse Behaviour

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–17, 2024.

-

Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention

Advances in Neural Information Processing Systems (NeurIPS), pp. 1–15, 2020.

-

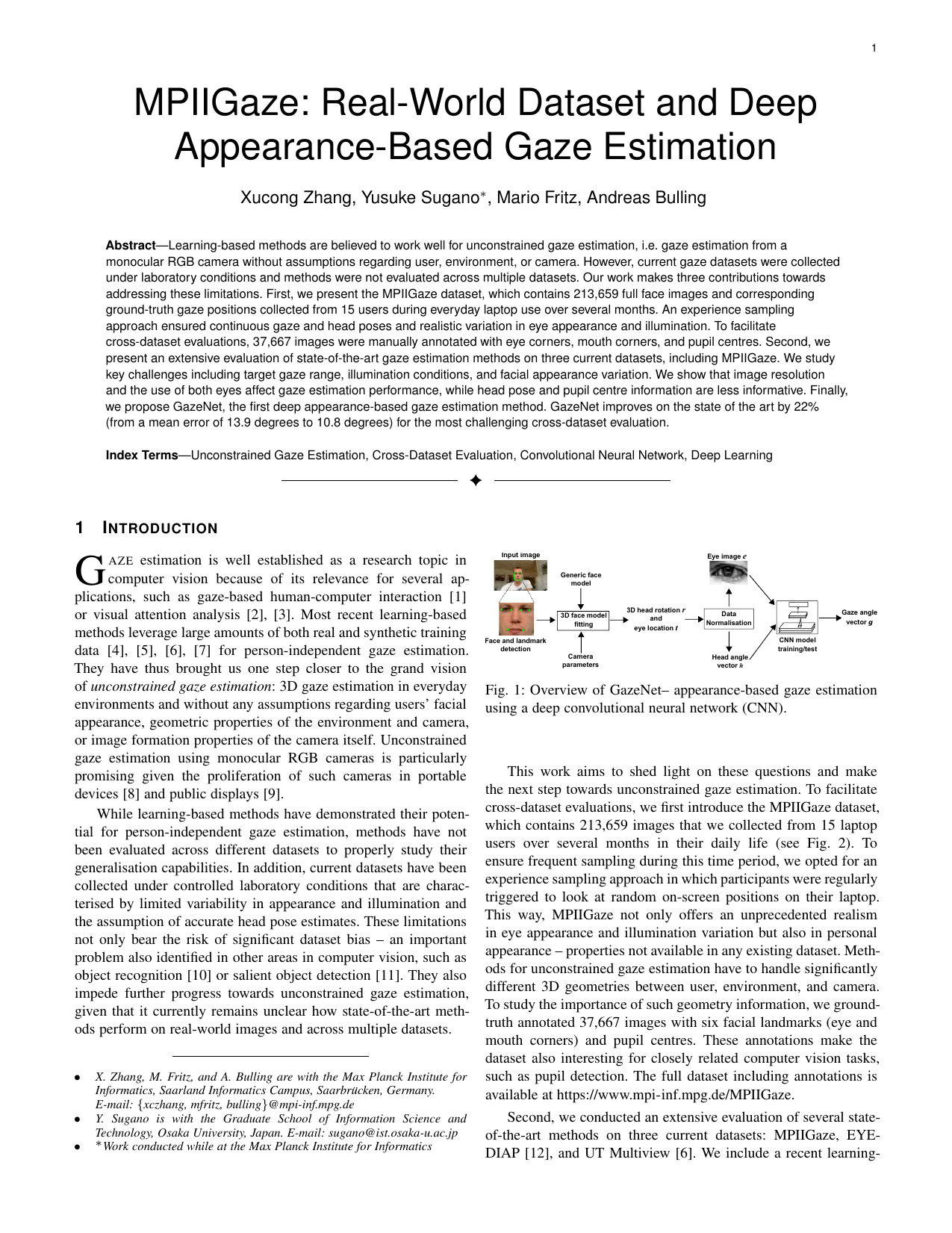

MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 41 (1), pp. 162-175, 2019.

-

Eye Movement Analysis for Activity Recognition Using Electrooculography

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 33 (4), pp. 741-753, 2011.

Computational Cognitive Modelling

Successful collaboration between humans grounds deeply in our ability to form and maintain mental models of our interaction partners, and in robustly predicting their goals, intentions, and beliefs (also known as Theory of Mind). We further rely on our ability to predict what others are attending to, and what they are likely to know or remember. These abilities not only allow us to anticipate others’ behaviour, but also to behave pro-actively ourselves, greatly improving the robustness, efficiency, and seamlessness of our interactions with others. Despite their importance, however, research on installing similar cognitive modelling capabilities in machines is still in its infancy.

-

Neural Reasoning About Agents’ Goals, Preferences, and Actions

Proc. 38th AAAI Conference on Artificial Intelligence (AAAI), pp. 456–464, 2024.

-

VSA4VQA: Scaling A Vector Symbolic Architecture To Visual Question Answering on Natural Images

Proc. 46th Annual Meeting of the Cognitive Science Society (CogSci), 2024.

-

Scanpath Prediction on Information Visualisations

IEEE Transactions on Visualization and Computer Graphics (TVCG), 30 (7), pp. 3902–3914, 2023.

-

Improving Neural Saliency Prediction with a Cognitive Model of Human Visual Attention

Proc. the 45th Annual Meeting of the Cognitive Science Society (CogSci), pp. 3639–3646, 2023.

-

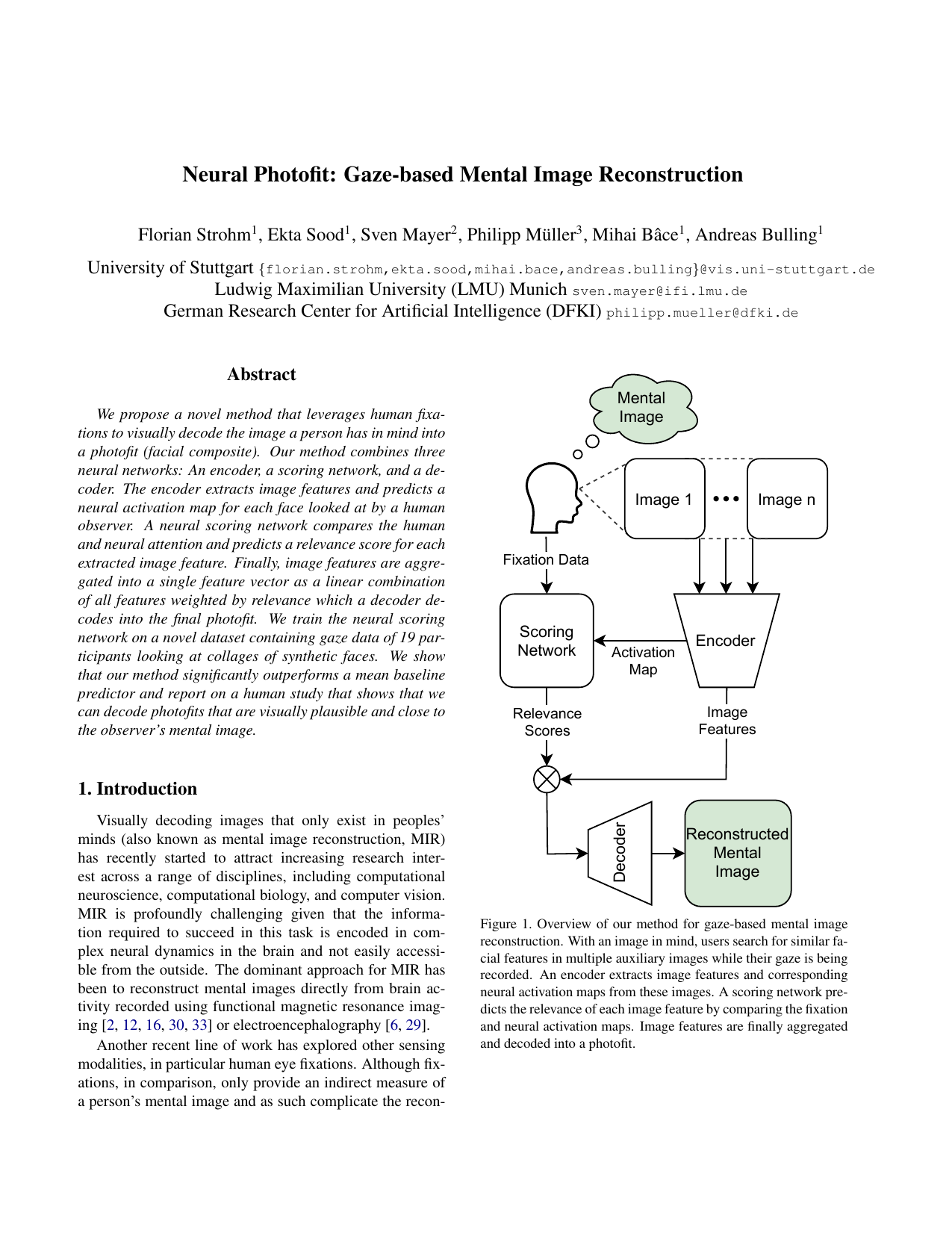

Neural Photofit: Gaze-based Mental Image Reconstruction

Proc. IEEE International Conference on Computer Vision (ICCV), pp. 245-254, 2021.

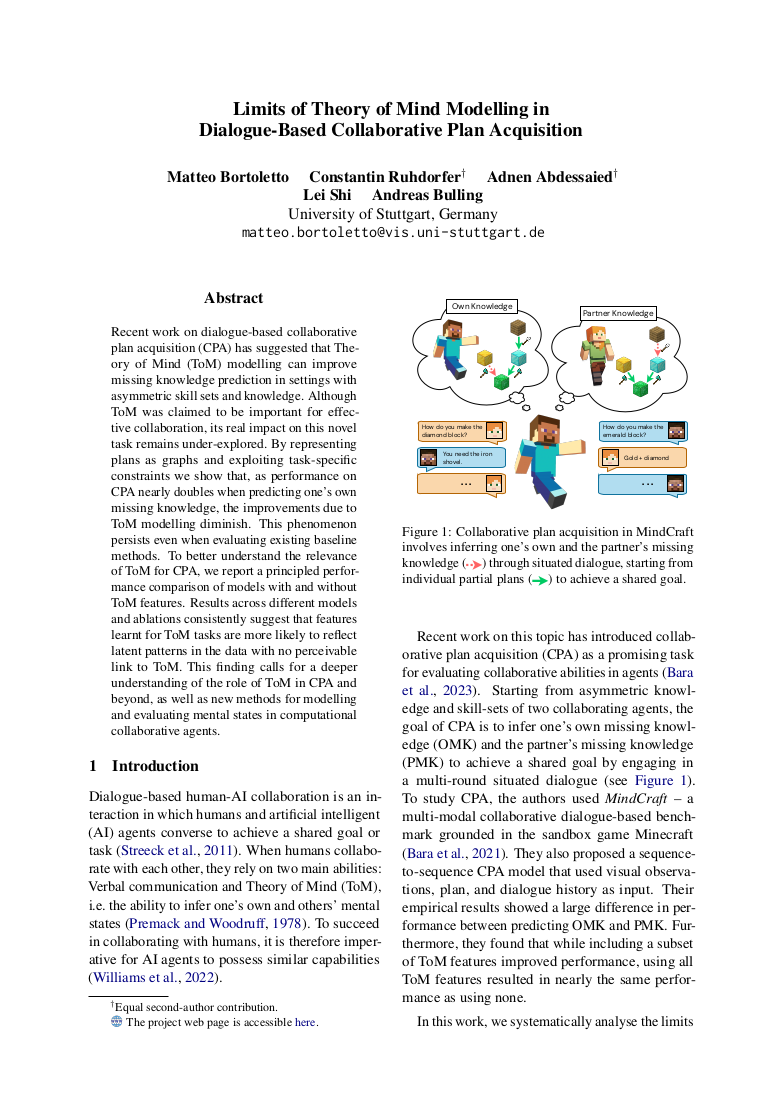

Mechanisms of Human-AI Collaboration

Collaboration is a complex, iterative process in which two or more interaction partners engage in a multi-round interactive dialogue, aiming to achieve a shared goal and in which the outcomes typically go beyond what each partner could achieve on their own. To enable machines to successfully participate in such interactions as equal partners requires understanding and computationally replicating the mechanisms of successful collaboration. This involves, for example, keeping track of the dialogue state by integrating multimodal information, or the ability to adapt and generalise to arbitrary interaction partners.

-

Limits of Theory of Mind Modelling in Dialogue-Based Collaborative Plan Acquisition

Proc. 62nd Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1–16, 2024.

-

Explicit Modelling of Theory of Mind for Belief Prediction in Nonverbal Social Interactions

Proc. 27th European Conference on Artificial Intelligence (ECAI), pp. 866–873, 2024.

-

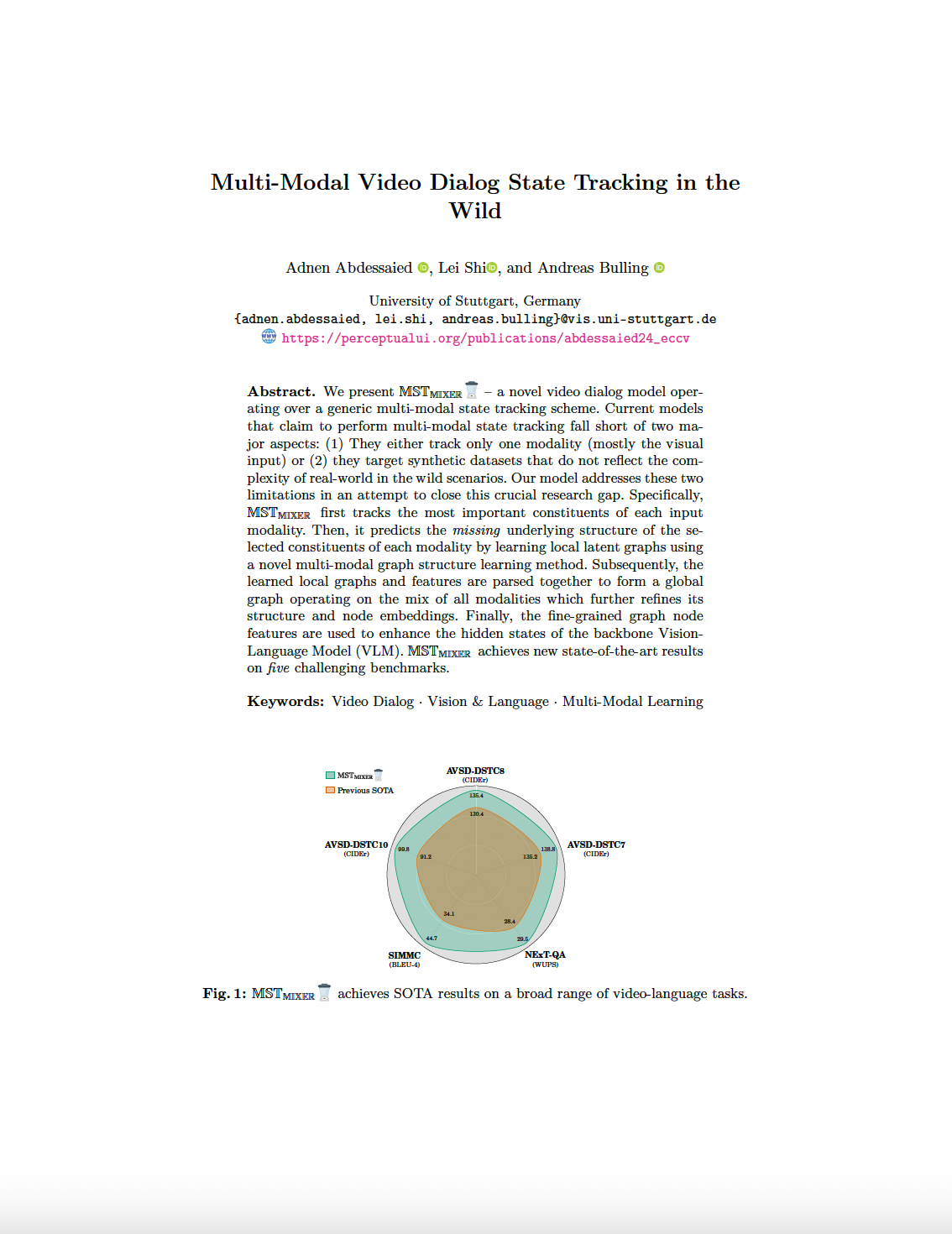

Multi-Modal Video Dialog State Tracking in the Wild

Proc. 18th European Conference on Computer Vision (ECCV), pp. 1–25, 2024.

-

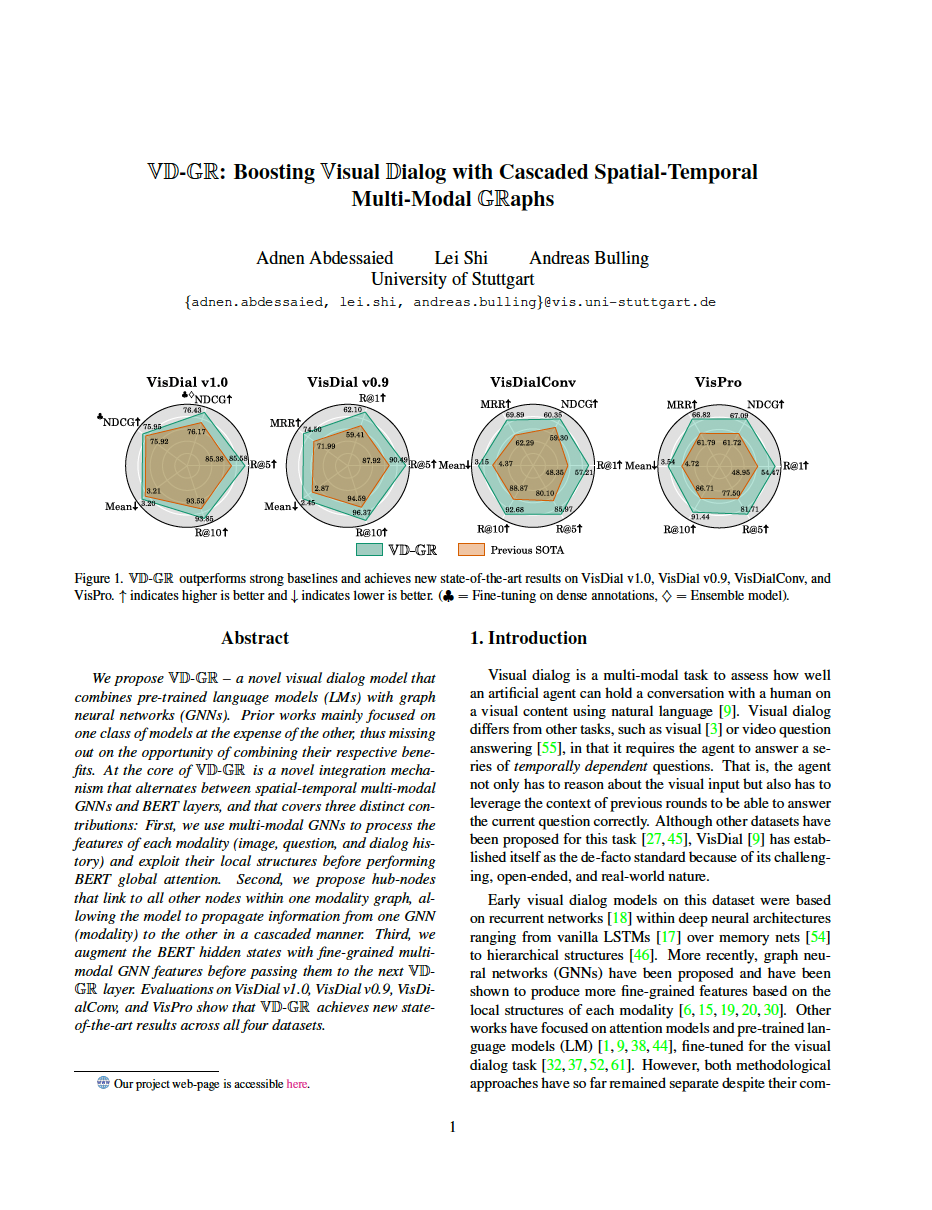

VD-GR: Boosting Visual Dialog with Cascaded Spatial-Temporal Multi-Modal GRaphs

Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. 5805–5814, 2024.

Social/societal aspects of CAI

As artificial intelligent systems will be more deeply embedded and will collaborate with us in an increasing number of everyday situations, social and ethical implications of their doings, explainability of their behaviour, as well as questions related to the privacy of the information they obtain in interactions will become crucial. Privacy-preserving methods are essential to protect users' sensitive information, particularly as collaboration may reveal or require sharing personal information. Ethical frameworks ensure that collaborative AI systems align with societal values, addressing biases, fairness, and accountability in decision-making.

-

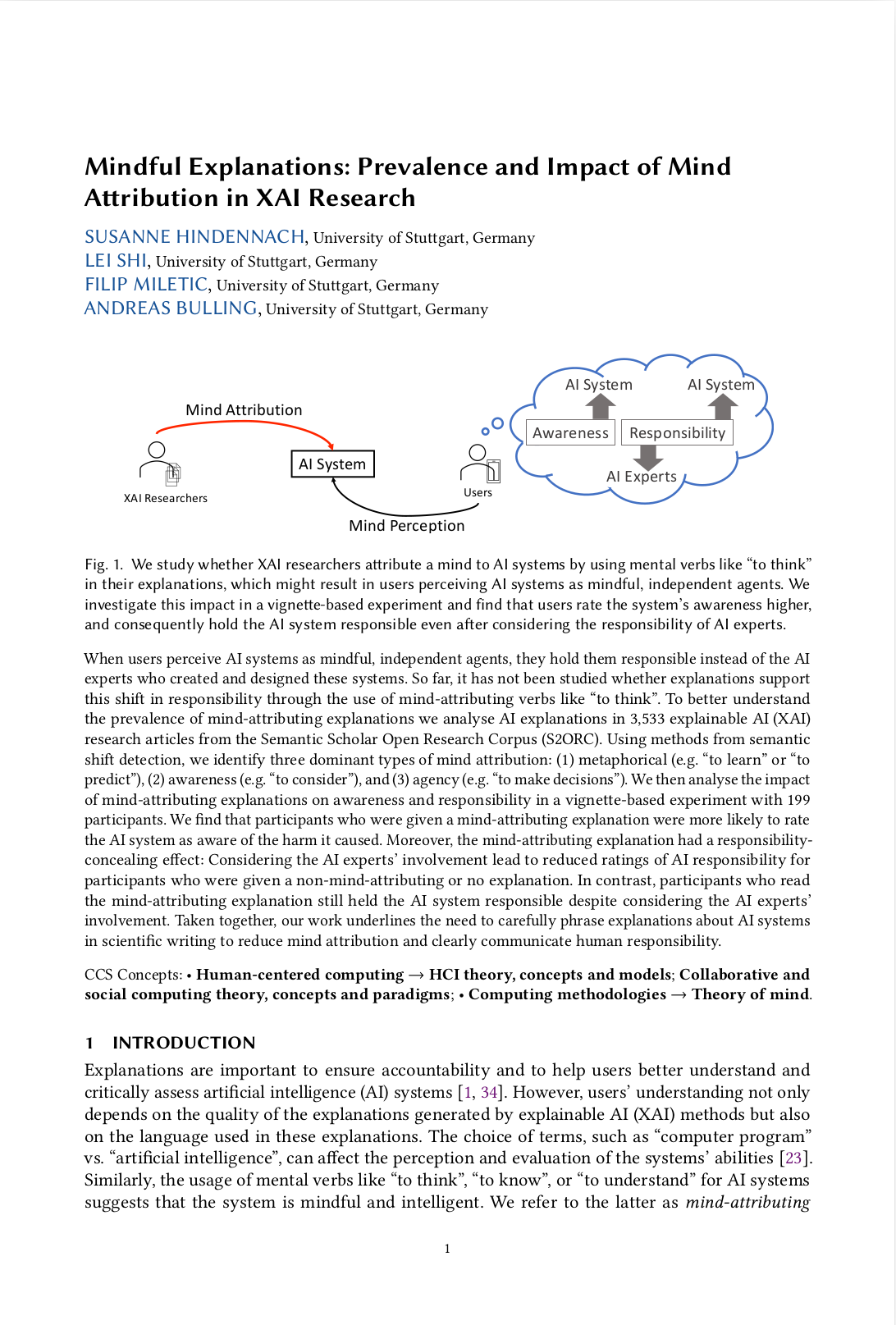

Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (CSCW), pp. 1–42, 2024.

-

PrivatEyes: Appearance-based Gaze Estimation Using Federated Secure Multi-Party Computation

Proc. ACM on Human-Computer Interaction (PACM HCI), 8 (ETRA), pp. 1–23, 2024.

-

Impact of Privacy Protection Methods of Lifelogs on Remembered Memories

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–10, 2023.

-

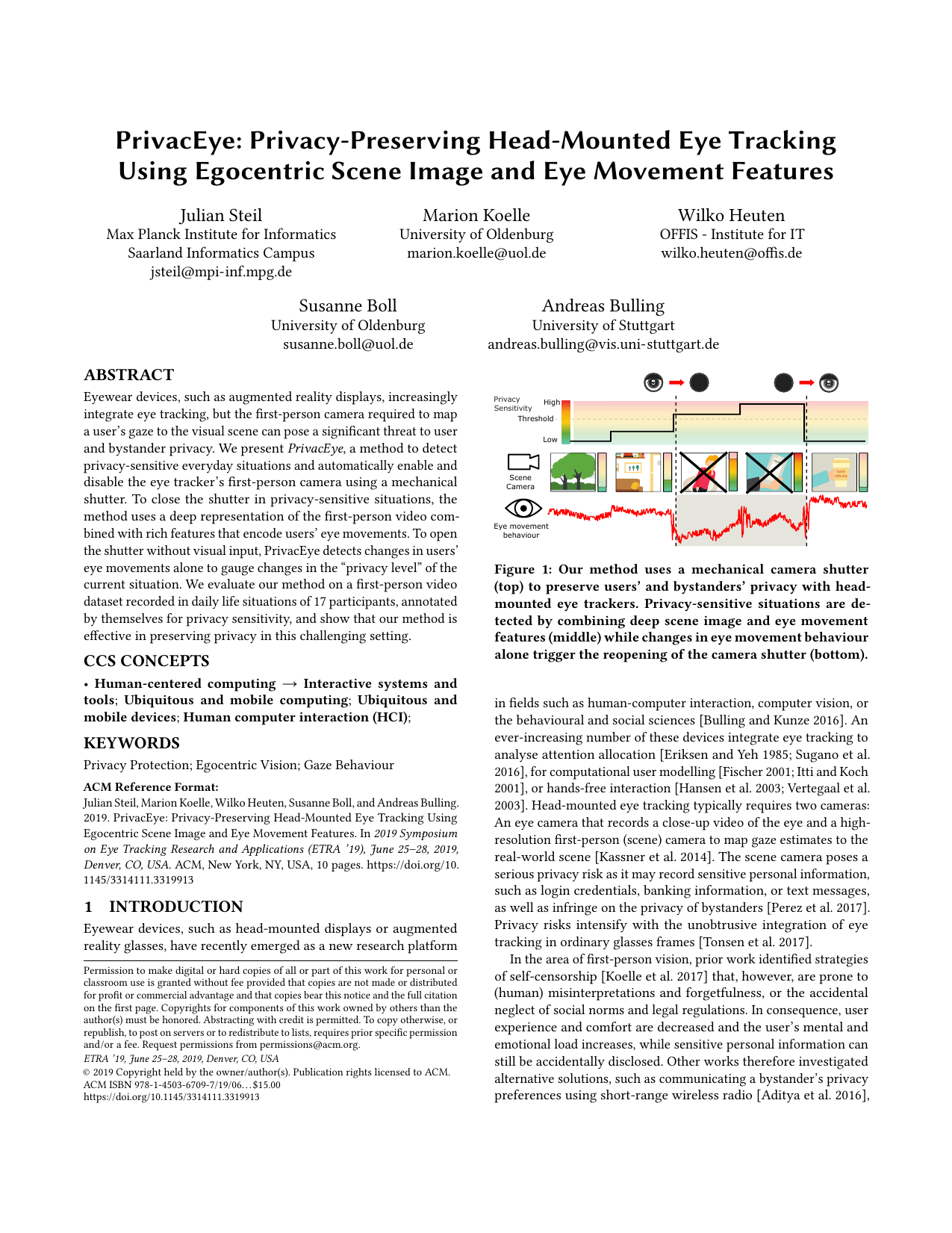

PrivacEye: Privacy-Preserving Head-Mounted Eye Tracking Using Egocentric Scene Image and Eye Movement Features

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–10, 2019.

-

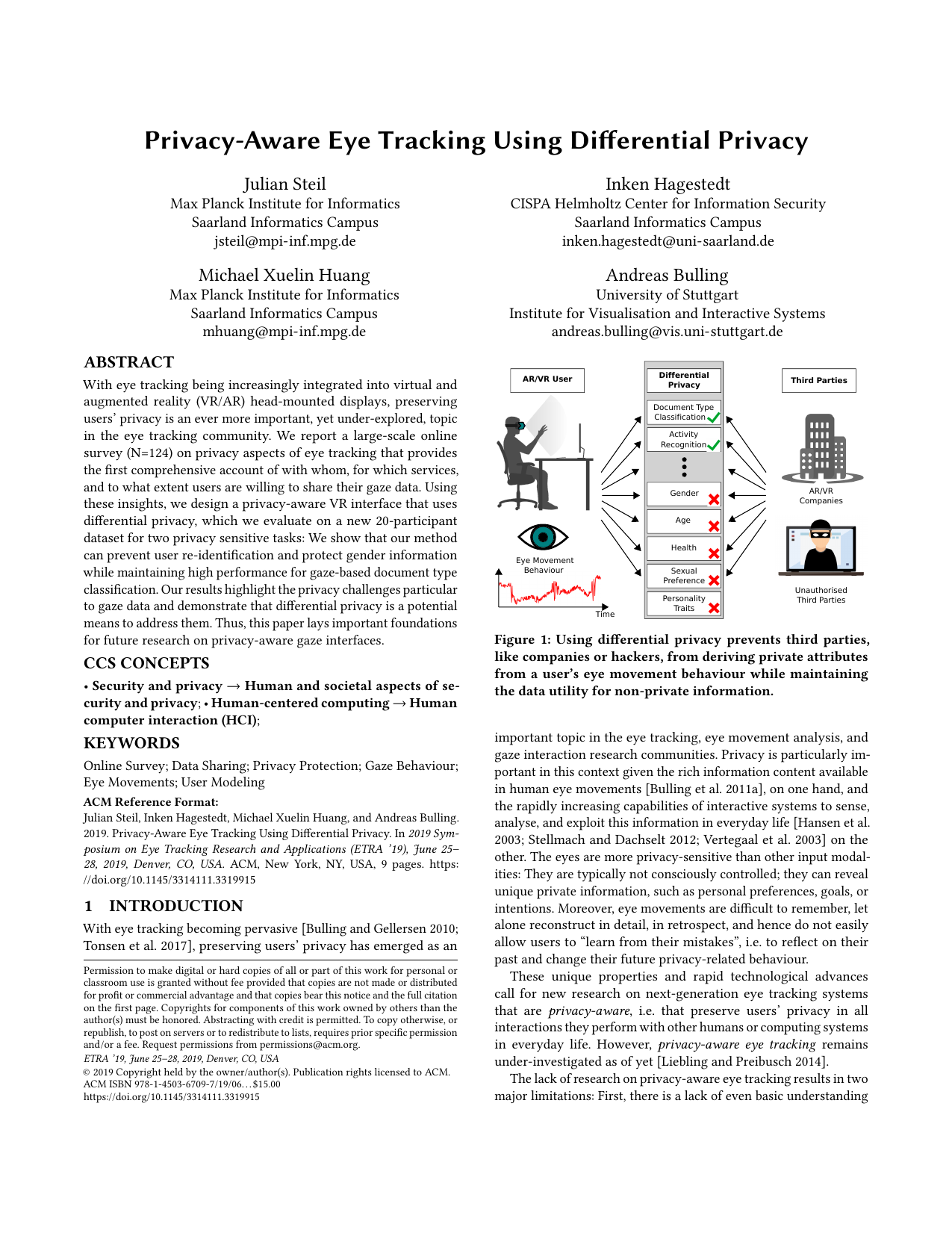

Privacy-Aware Eye Tracking Using Differential Privacy

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2019.