Explaining Disagreement in Visual Question Answering Using Eye Tracking

Susanne Hindennach, Lei Shi, Andreas Bulling

Proc. International Workshop on Pervasive Eye Tracking and Mobile Gaze-Based Interaction (PETMEI), pp. 1–7, 2024.

Abstract

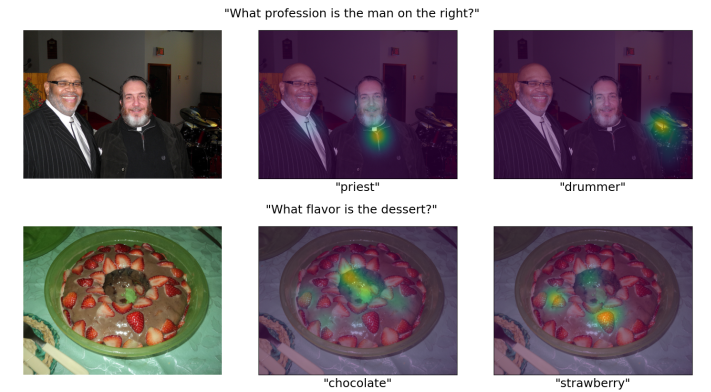

When presented with the same question about an image, human annotators often give valid but disagreeing answers indicating that their reasoning was different. Such differences are lost in a single ground truth label used to train and evaluate visual question answering (VQA) methods. In this work, we explore whether visual attention maps, created using stationary eye tracking, provide insight into the reasoning underlying disagreement in VQA. We first manually inspect attention maps in the recent VQA-MHUG dataset and find cases in which attention differs consistently for disagreeing answers. We further evaluate the suitability of four different similarity metrics to detect attention differences matching the disagreement. We show that attention maps plausibly surface differences in reasoning underlying one type of disagreement, and that the metrics complementarily detect them. Taken together, our results represent an important first step to leverage eye-tracking to explain disagreement in VQA.Links

Paper: hindennach24_petmei.pdf

BibTeX

@inproceedings{hindennach24_petmei,

title = {Explaining Disagreement in Visual Question Answering Using Eye Tracking},

author = {Hindennach, Susanne and Shi, Lei and Bulling, Andreas},

year = {2024},

pages = {1--7},

doi = {10.1145/3649902.3656356},

booktitle = {Proc. International Workshop on Pervasive Eye Tracking and Mobile Gaze-Based Interaction (PETMEI)}

}