Probing Interactive Explainable AI

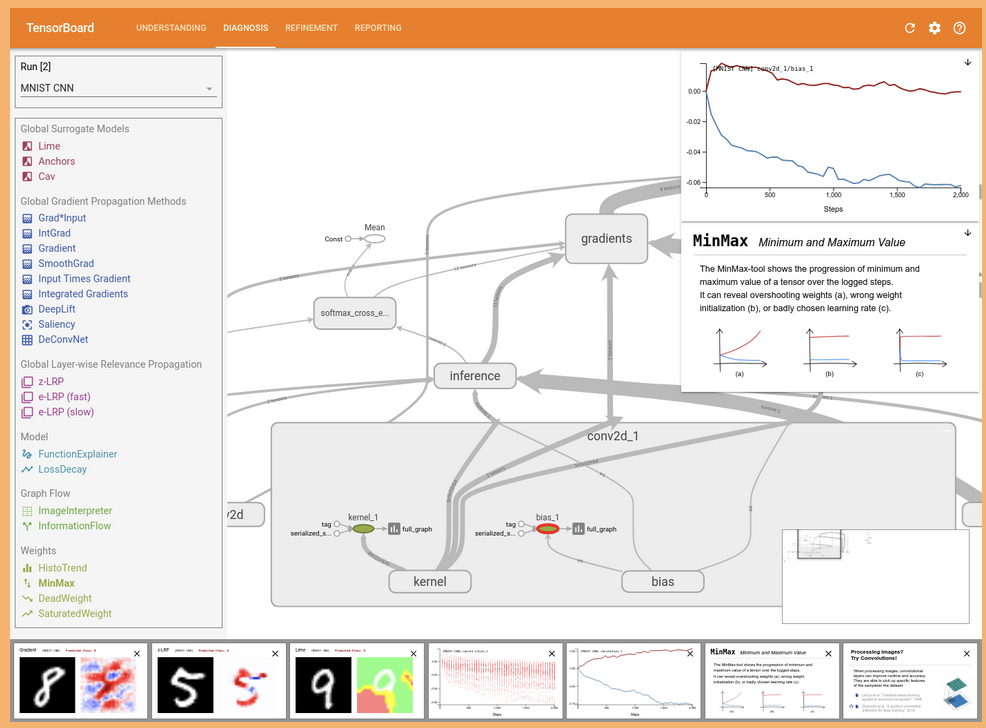

Description: Kaur et al. (2020) showed that data scientists are unable to detect common issues in AI systems using existing explainable AI (XA) methods. There are multiple tools that try to simplify working with XAI methods, like explAIner (Spinner et al., 2020), BEAMES (Das et al., 2019), QUESTO (Das et al., 2020), and the What-If-Tool.

The goal of this project is to compare interactive XAI tools and investigate whether they support users better in finding issues in AI systems. For this, the contextual inquiry used by Kaur et al. (2020) can be replicated with a choice of interactive XAI tools.

Supervisor: Susanne Hindennach

Distribution: 20% Literature, 20% Experiment design, 20% Implementation, 40% Data analysis

Requirements: Interest in XAI and (UX) study design

Literature:

Das, S. D. Cashman, R. Chang and A. Endert. 2019. BEAMES: Interactive Multimodel Steering, Selection, and Inspection for Regression Tasks. IEEE Computer Graphics and Applications, 39(5), p.20-32.

Das, S., S. Xu, M. Gleicher, R. Chang, and A. Endert. 2020. QUESTO: Interactive Construction of Objective Functions for Classification Tasks. Computer Graphics Forum, 39(3), p.153–165.

Kaur, H., H. Nori, S. Jenkins, R. Caruana, H. Wallach and J. Wortman Vaughan. 2020. Interpreting Interpretability: Understanding Data Scientists’ Use of Interpretability Tools for Machine Learning. Proceedings of the Conference on Human Factors in Computing Systems (CHI), p.1-14.

Spinner, T., U. Schlegel, H. Schäfer, and M. El-Assady. 2020. explAIner: A visual analytics framework for interactive and explainable machine learning. IEEE Transactions on Visualization and Computer Graphics, 26(1), p.1064–1074.

What-If-Tool: https://github.com/pair-code/what-if-tool