MPIIEgoFixation: Fixation Detection for Head-Mounted Eye Tracking Based on Visual Similarity of Gaze Targets

Abstract

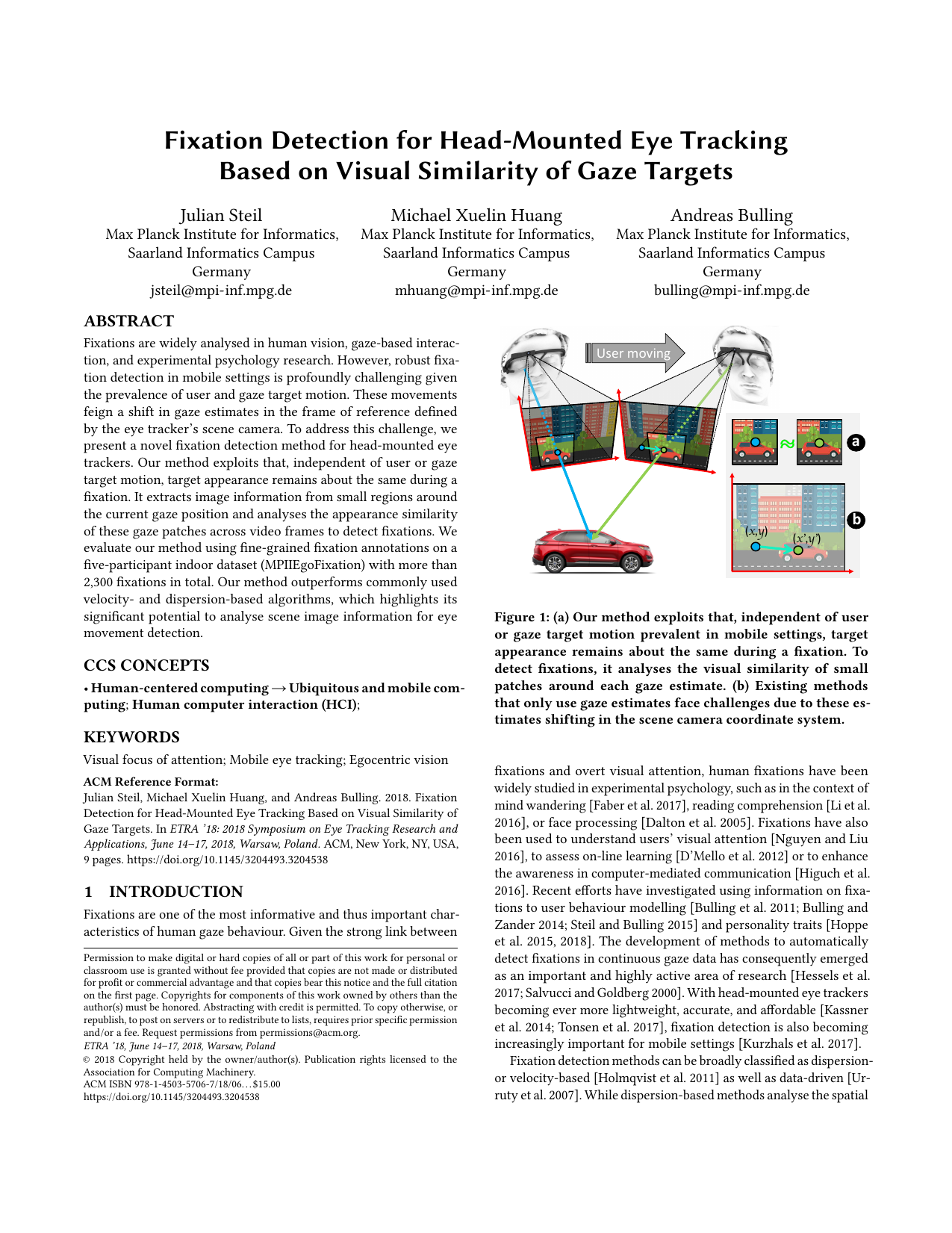

Fixations are widely analysed in human vision, gaze-based interaction, and experimental psychology research. However, robust fixation detection in mobile settings is profoundly challenging given the prevalence of user and gaze target motion. These movements feign a shift in gaze estimates in the frame of reference defined by the eye tracker’s scene camera. To address this challenge, we present a novel fixation detection method for head-mounted eye trackers. Our method exploits that, independent of user or gaze target motion, target appearance remains about the same during a fixation. It extracts image information from small regions around the current gaze position and analyses the appearance similarity of these gaze patches across video frames to detect fixations. We evaluate our method using fine-grained fixation annotations on a five-participant indoor dataset (MPIIEgoFixation) with more than 2,300 fixations in total. Our method outperforms commonly used velocity- and dispersion-based algorithms, which highlights its significant potential to analyse scene image information for eye movement detection.

Download (3.2 Mb)

Contact: Andreas Bulling, . Videos can be requested here.

The data is only to be used for non-commercial scientific purposes. If you use this dataset in a scientific publication, please cite the following paper:

-

Fixation Detection for Head-Mounted Eye Tracking Based on Visual Similarity of Gaze Targets

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2018.

Dataset

We have evaluated our method on a recent mobile eye tracking dataset [Sugano and Bulling 2015]. This dataset is particularly suitable because participants walked around throughout the recording period. Walking leads to a large amount of head motion and scene dynamics, which is both challenging and interesting for our detection task. Since the dataset was not yet publicly available, we requested it directly from the authors. The eye tracking headset (Pupil [Kassner et al. 2014]) featured a 720p world camera as well as an infra-red eye camera equipped on an adjustable camera arm. Both cameras recorded at 30 Hz. Egocentric videos were recorded using the world camera and synchronised via hardware timestamps. Gaze estimates were given in the dataset.

The dataset consists of 5 folders (Indoor-Recordings: P1 (1 recording), P2 (1 recording), P3 (2 recordings), P4 (1 recording), P5 (2 recordings)). Each folder consists of a data file as well as a ground truth file with fixation IDs, start and end frame of the corresponding scene video. Both files are available as .npy and .csv format.

Data Annotation

Given the significant amount of work and cost of fine-grained fixation annotation, we used only a subset from five participants (four males, one female, all ages 20–33). This subset contains five videos, each lasting five minutes (i.e. 9,000 frames each). We asked one annotator to annotate fixations frame-by-frame for all recordings using Advene [Aubert et al. 2012]. Each frame was assigned a fixation ID, so that frames belonging to the same fixation had the same ID. We instructed the annotator to start a new fixation segment after an observable gaze shift and a change of gaze target. Similarly, a fixation segment should end when the patch content changes noticeably, even though the position of the gaze point might remain in the same position in the scene video. In addition, if a fixation segment lasted for less than five consecutive frames (i.e. 150ms), it was to be discarded. During the annotation, the gaze patch as well as the scene video superimposed with gaze points were shown to the annotator. The annotator was allowed to scroll back and forth along the time line to mark and correct the fixation annotation.

Annotation Scheme

| Scene Frame Number | Scene Frame Time | Eye Frame Number | Eye Frame Time | Normalised Pupil_X | Normalised Pupil_Y | Normalised Gaze_X | Normalised Gaze_Y | Eye Movement | Patch Similarity |