Predicting Next Actions and Latent Intents during Text Formatting

Guanhua Zhang, Susanne Hindennach, Jan Leusmann, Felix Bühler, Benedict Steuerlein, Sven Mayer, Mihai Bâce, Andreas Bulling

Proc. the CHI Workshop Computational Approaches for Understanding, Generating, and Adapting User Interfaces, pp. 1–6, 2022.

Abstract

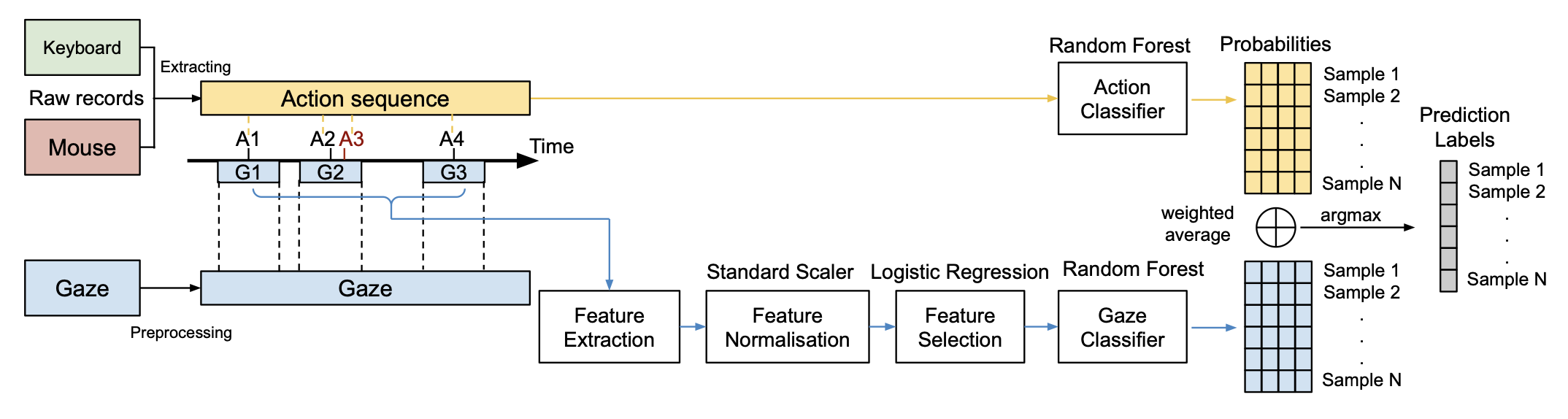

In this work we investigate the challenging task of predicting user intents from mouse and keyboard input as well as gaze behaviour. In contrast to prior work we study intent prediction at two different resolutions on the behavioural timeline: predicting future input actions as well as latent intents to achieve a high-level interaction goal. Results from a user study (N=15) on a sample text formatting task show that the sequence of prior actions is more informative for intent prediction than gaze. Only using the action sequence, we can predict the next action and the high-level intent with an accuracy of 66% and 96%, respectively. In contrast, accuracy when using features extracted from gaze behaviour was significantly lower, at 41% and 46%. This finding is important for the development of future anticipatory user interfaces that aim to proactively adapt to user intents and interaction goals.Links

Paper: zhang22_caugaui.pdf

BibTeX

@inproceedings{zhang22_caugaui,

author = {Zhang, Guanhua and Hindennach, Susanne and Leusmann, Jan and Bühler, Felix and Steuerlein, Benedict and Mayer, Sven and Bâce, Mihai and Bulling, Andreas},

title = {Predicting Next Actions and Latent Intents during Text Formatting},

booktitle = {Proc. the CHI Workshop Computational Approaches for Understanding, Generating, and Adapting User Interfaces},

year = {2022},

pages = {1--6}

}