Interpreting Attention-based Visual Question Answering Models with Multimodal Human Visual Attention

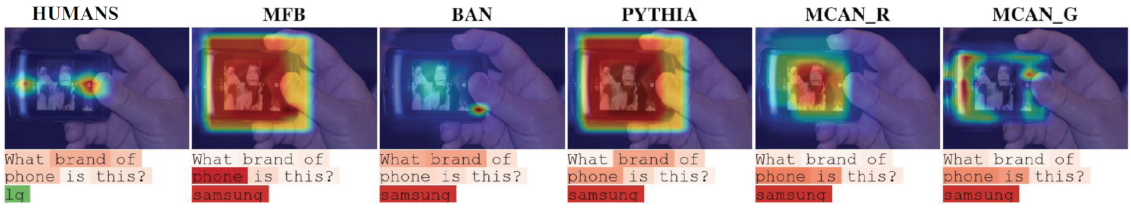

Description: In visual question answering (VQA) the task for the network is to answer questions about a given image and a natural language (Agrawal et al. 2015). VQA is a machine comprehension task and as such allows researchers to test if models are able to learn reasoning capabilities between various modalities. It is a field of interest for researchers from various backgrounds as it intertwines fields of computer vision and natural language processing. Inspired by human visual attention, in recent years many models incorporate various neural attention algorithms. Attention mechanisms supply the network with the ability to focus on a particular instance/element of the input sequence. Subsequently, attention-based networks often yield better results. However, in recent years, performance is not just the main focus. In addition to high performing models, researchers (Das et al. 2016, Sood et al. 2021) are also interested in interpreting neural attention and bridging the gap between neural attention and human visual attention.

To that end, in this project the student will interpret the learned multimodal attention of off-the-shelf VQA models which have performed with SOTA results. The student will extend the work of Sood et al. 2021, by using the same approach for evaluating human versus machine multimodal attention, on additional SOTA attentive VQA models with a focus on the current high performing transformer-based networks.

Supervisor: Ekta Sood

Distribution: 20% Literature, 10% Data Collection, 30% Implementation, 40% Data Analysis and Evaluation.

Requirements: Interest in attention based neural networks, cognitive science, and multimodal representation learning, experience with data analysis/statistics, familiarity with machine learning and exposure to at least one the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Aishwarya Agrawal, Stanislaw Antol, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, and Devi Parikh. 2015. VQA: Visual question answering. arxiv:1505.00468.

Abhishek Das, Harsh Agrawal, C. Lawrence Zitnick, Devi Parikh, and Dhruv Batra. 2016. Human attention in visual question answering: Do humans and deep networks look at the same regions? arxiv:1606.03556.

Ekta Sood, Fabian Kögel, Florian Strohm, Prajit Dhar, and Andreas Bulling. 2021. VQA-MHUG: A gaze dataset to study multimodal neural attention in VQA. Proceedings of the 2021 ACL SIGNLL Conference on Computational Natural Language Learning (CoNLL).