Attack and Defend: Gaze Data Poisoning

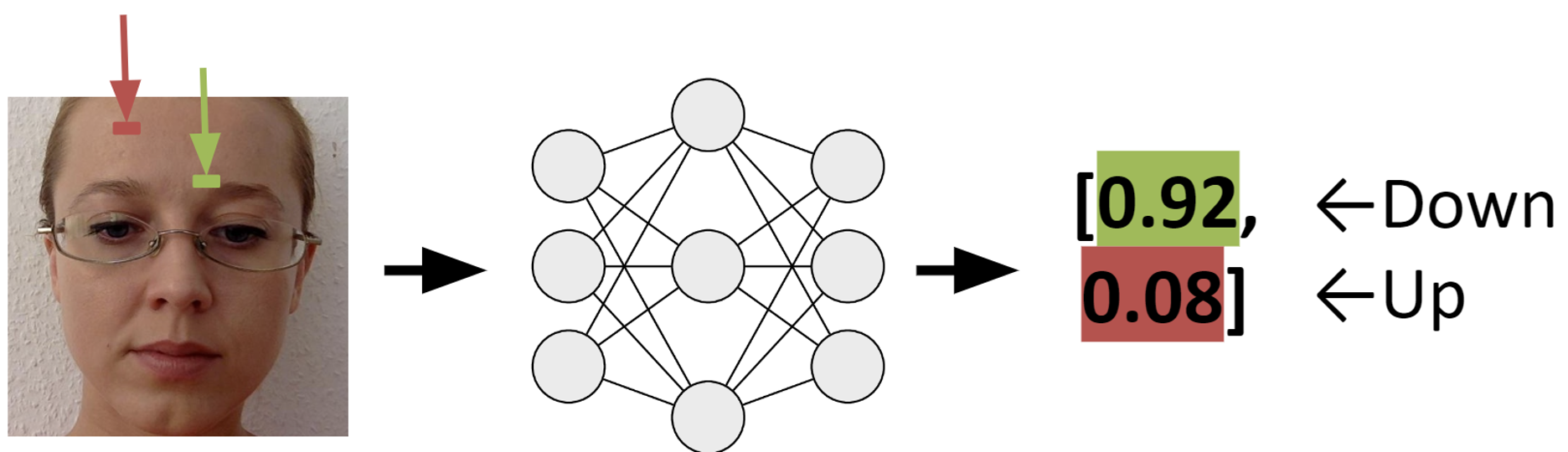

Description: Poisoning attacks on computer vision models' training have been widely studied in the literature [1]. An adversary can impair the performance of a gaze estimation model by changing data features or labels. As a defense mechanism, adversarial training [2] can be applied to generate more robust datasets and models.

Goal: The goal of this project is to create adversarial samples for the MPIIGaze [3] dataset and train a robust model on the generated dataset.

Supervisor: Mayar Elfares

Distribution: 20% Literature, 60% Implementation, 20% Analysis

Requirements: Good Python skills (Pytorch or Tenserflow)

Literature:

[1] Xu, Mingjie, Haofei Wang, Yunfei Liu and Feng Lu. 2021. Vulnerability of Appearance-based Gaze Estimation. arXiv:2103.13134.

[2] Shafahi, Ali, Mahyar Najibi, Amin Ghiasi, Zheng Xu, John Dickerson, Christoph Studer, Larry S. Davis, Gavin Taylor and Tom Goldstein. 2019. Adversarial Training for Free! Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS).

[3] Zhang, Xucong, Yusuke Sugano, Mario Fritz and Andreas Bulling. 2017. It's Written All Over Your Face: Full-Face Appearance-Based Gaze Estimation. Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW).