Consciousness in Encoded States

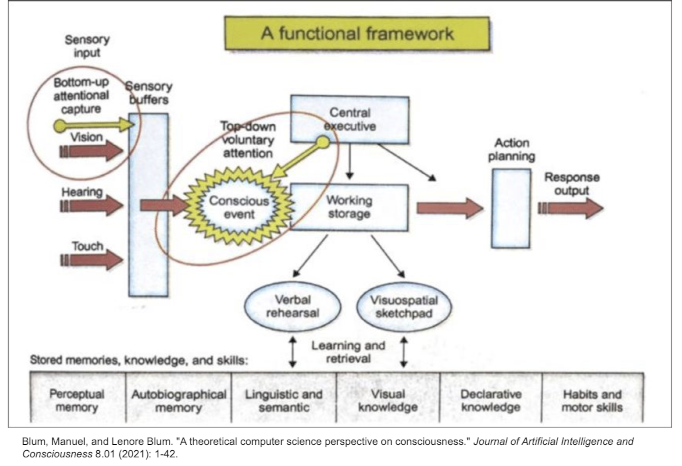

Description: Bengio 2017 states that our consciousness can be defined as awareness (or attentiveness) of a small amount of high-level and abstract features which are deemed relevant to the task in question. The research question is: can this method of using encoded states instead of fine-tuning (or both), be more generalizable between tasks? If so, we might be able to argue for modeling some form of consciousness or perhaps better to be inspired from Bengio 2017, in which authors postulate this would be some form of consciousness.

The idea here would be to better understand the encoded state of pre-trained language models. Reading over this paper (Bengio 2017), authors suggest that a form of consciousness can be seen as “the sense of awareness or attention rather than qualia”. In the context of this project, the student will implement the pre-trained BeRT model (encoder) for three NLP tasks (QA, Paraphrasing, Summarization) [for this the student will have to implement a data augmentation method to obtain parallel corpora for the tasks] — these are the baseline models. Then the student will need to swap the encoded state of the models for the different tasks, but will not fine-tune (the student will not continue to train for the alternative/different task). At this point, the student will perform analysis in order to understand if the model performs well when swapping the encoded state, to see if the attention maps look different, and to interpret the loss landscape visualization (compared to the baseline models). Next the student will implement a method to regularize the encoded states between tasks (instead of fine-tuning, like which was done for the baseline). The student will evaluate model performance when swapping the encoded state, and repeat qualitative analysis as described above. Lastly, the student will both regularize the encoded state and also fine-tune and then evaluate the model to compare to the previous experimental results.

Supervisor: Ekta Sood

Distribution: 20% Literature, 20% Data Collection, 30% Implementation, 30% Analysis

Requirements: Interest in NLP, cognitive modeling, neural interpretability and generalizability. Familiar with data processing and data augmentation methods, some machine learning experience, and it is helpful to have exposure to the following framework – Tensorflow, Pytorch or Keras.

Literature: Yoshua Bengio. 2017. The consciousness prior. arXiv:1709.08568.