Reasoning about Intermodal Correspondences — Aligned Representation Learning with Human Attention

Description: Vision and language are two instrumental modalities in which humans obtain knowledge and conceptualize the world. Recent machine learning approaches have aimed to combine these modalities in a variety of tasks in order to leverage the human ability of learning from our rich perceptual environment — multimodal knowledge acquisition from our interactive environment.

A method by Izadinia et al. (2015) combines these two modalities by their proposed ”Segment-Phrase Table” (SPT), for the task of semantic segmentation. The SPT is a trainable sized curation of one to one correspondences between each element of a given set (there are two sets — image segments and text phrases); this means they created bijective associations between their image segments and textual phrases for said segments in a given image. For example, if the image depicts a horse jumping over a log, they generate (semi-supervised) textual phrases for segments of that image — these segments are organized in a parse tree like nature, such that hierarchy of the features in each segment are taken into account. The goal of their work was to cluster, localize and segment instances by reasoning about commonalities (Izadinia et al., 2015). Ultimately, the authors showed SOTA results over benchmark datasets in the task of semantic segmentation and perhaps more importantly supplied their model with richer semantic understanding such that the phrases acted as a catalyst for enhanced contextual reasoning.

To that end, Aytar et al. (2017) explored leveraging various aligned visual, textual and audio corpora, in order to implement their neural network which learned the aligned representations of the three introduced modalities. Specifically, they trained with pairs of either images and sentences or images and audio (extracted from videos), however their network could also learn aligned representations between corresponding text and audio (though was never trained to learn these alignments). The method of evaluation of these aligned representations was a cross-modal retrieval task: given a query, with only one of the three inputs (for example an image), they evaluated the accuracy of retrieval for the corresponding aligned pair from one of the other modalities (for example the corresponding audio). The results indicate that cross modal aligned representations improved performance on retrieval and classification tasks. In addition, their network was able to learn representations between audio and text without being explicitly trained with said alignments — supporting the notion that the images perhaps bridged the gap between these other two modalities.

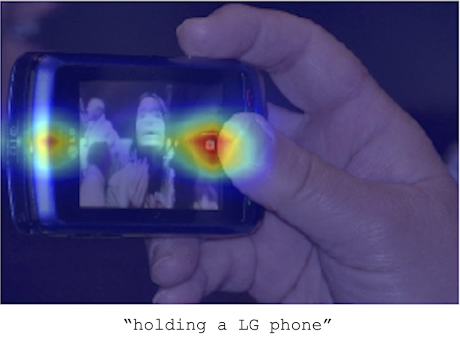

Given previous work, this project aims to further implement and reason about these intermodal correspondences. For a given task, I propose implementing an existing SOTA semantic segmentation model (from a paper or github repository) (the task is not a hard constraint), which incorporates the SPT proposed by Izadinia et al. (2015). In addition to the alignment between text phrases and image segments, the task would be to introduce and train the network with a third modality, that being gaze data (corresponding gaze data on images can be found for various benchmark datasets, such as MScoco or SUN). The objective is to create a composition of three bijective functions — align three modalities for a high level task. The research question is, can we translate/bridge information across these three modalities. To that end, how does gaze information enhance a task such as semantic segmentation, does it provide the network with even more semantic context — for example, using the SPT we can evaluate for cross-modal semantic similarity. Additionally, as with the work proposed from Aytar et al. (2017), though this network would never be trained with text and gaze pairs, the aim would be to investigate if the network can learn representations between text and gaze by using the images to bridge the gap.

Supervisor: Ekta Sood

Distribution: 20% Literature, 20% Data Collection, 40% Implementation, 20% Data Analysis and Evaluation.

Requirements: Interest in multimodal machine learning, familiarity with data processing and analysis/statistics, experience with Tensorflow/PyTorch/Keras.

Literature: Yusuf Aytar, Carl Vondrick, and Antonio Torralba. 2017. See, hear, and read: Deep aligned representations. arXiv:1706.00932.

Hamid Izadinia, Fereshteh Sadeghi, Santosh Kumar Divvala, Yejin Choi, and Ali Farhadi. 2015. Segment-phrase table for semantic segmentation, visual entailment and paraphrasing. Proceedings of the 2015 International Conference on Computer Vision (ICCV).