MPIIDPEye: Privacy-Aware Eye Tracking Using Differential Privacy

Abstract

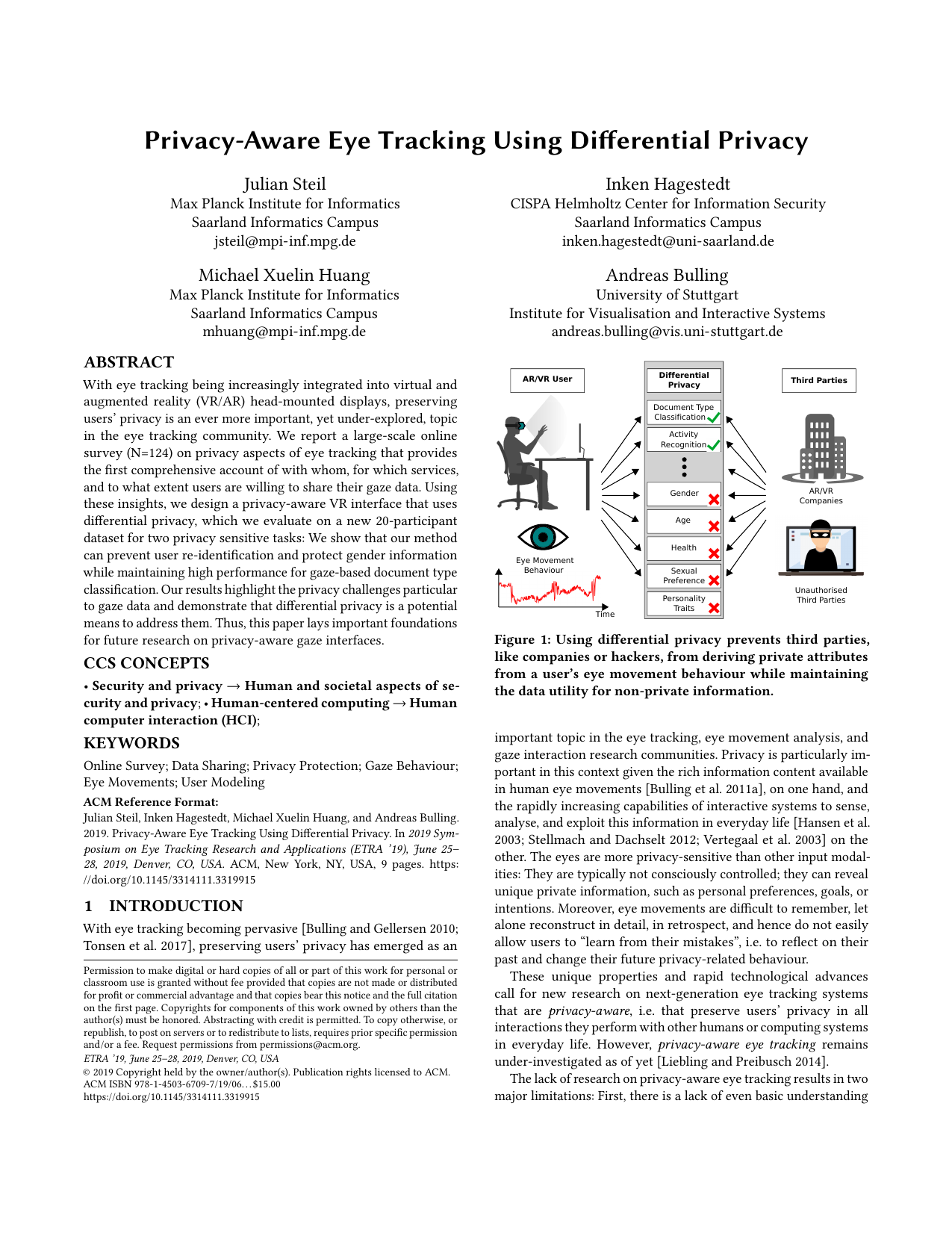

With eye tracking being increasingly integrated into virtual and augmented reality (VR/AR) head-mounted displays, preserving users’ privacy is an ever more important, yet under-explored, topic in the eye tracking community. We report a large-scale online survey (N=124) on privacy aspects of eye tracking that provides the first comprehensive account of with whom, for which services, and to which extent users are willing to share their gaze data. Using these insights, we design a privacy-aware VR interface that uses differential privacy, which we evaluate on a new 20-participant dataset for two privacy sensitive tasks: We show that our method can prevent user re-identification and protect gender information while maintaining high performance for gaze-based document type classification. Our results highlight the privacy challenges particular to gaze data and demonstrate that differential privacy is a potential means to address them. Thus, this paper lays important foundations for future research on privacy-aware gaze interfaces.

Download (64 MB)

The data is only to be used for non-commercial scientific purposes. If you use this dataset in a scientific publication, please cite the following paper:

-

Privacy-Aware Eye Tracking Using Differential Privacy

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2019.

Privacy Concerns in Eye Tracking

We conducted a large-scale online survey to shed light on users' privacy concerns related to eye tracking technology and the information that can be inferred from eye movement data. We recruited our participants via social platforms (Facebook, WeChat) and student mailing lists. The survey opened with general questions about eye tracking and VR technologies, continued with questions about future use and applications, data sharing and privacy (especially with whom users are willing to share their data), and concluded with questions about the participants' willingness to share different eye movement representations. Participants answered each question on a 7-point Likert scale (1: Strongly disagree to 7: Strongly agree). For simplicity of result visualisation, we merged the score 1 to 3 to ''Disagree'' and 5 to 7 to ''Agree''. At the end we asked for demographic information and offered a raffle.

The survey took about 20 minutes to complete, was set up as a Google Form, and was split into the parts described above. Our design ensured that participants without pre-knowledge of eye tracking and VR technology could participate as well: We provided a slide show containing information about eye tracking in general, and in VR devices specifically, and introduced the di erent forms of data representation, showing example images or explanatory texts. In our survey, 124 people (81 male, 39 female, 4 did not tick the gender box) participated, aged 21 to 66 (mean = 28.07, std = 5.89). The participants where vastly spread over the world coming from 29 different countries (Germany: 39%, India: 12%, Pakistan: 6%, Italy: 6%, China: 5%, USA: 3%). Sixty-seven percent of them had a graduate university degree (Master’s or PhD), and 22% had an un- dergraduate university degree (Bachelor’s). Fifty-one percent were students from a variety of subjects (law, language science, computer science, psychology, etc.), 34% were scientists and researchers, IT professionals (7%), or had business administration jobs (2%). Given the breadth of results, we highlight key insights most relevant for the current paper. We found nearly all answers for the provided questions in Figures 2, 3, and 4 to be signi cantly different from equal distribution tested with Pearson’s chi-squared test (p < 0.001, dof = 6). Detailed numbers, plots, and significance and skewness test results can be found in the supplementary material.

The raw survey data can be found here.

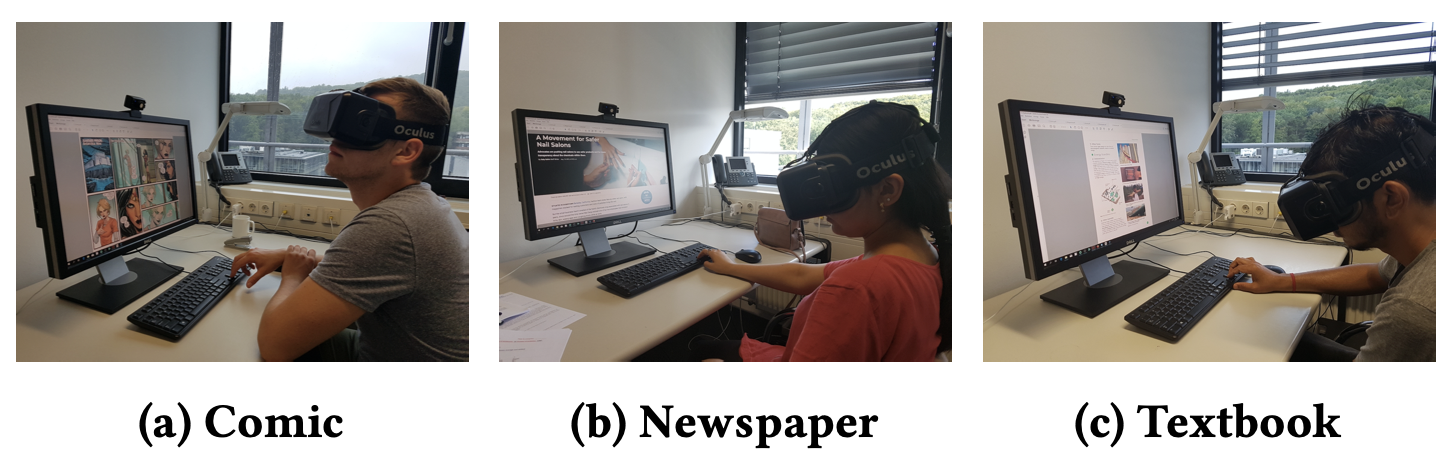

Data Collection

Given the lack of a suitable dataset for evaluating privacy- preserving eye tracking using differential privacy, we recorded our own dataset. As a utility task, we opted to detect different document types the users’ read, similar to a reading assistant [Kunze et al. 2013b]. Instead of printed documents, participants read in VR, wearing a corresponding headset. The recording of a single participant consists of three separate recording sessions, in which a participant reads one out of three different documents: comic, online newspaper, and textbook. All documents include a varying rate of text and images. Each of these documents was about a 10 minute read, depending on a user’s reading skill (about 30 minutes in total).

Participants. We recruited 20 participants (10 male, 10 female) aged 21 to 45 years through university mailing lists and adverts in different university buildings on campus. Most participants were BSc and MSc students from a large range of subjects (e.g. language science, psychology, business administration, computer science) and different countries (e.g. India, Pakistan, Germany, Italy). All participants had no, or only minor experience, with eye tracking studies and had normal or corrected-to-normal vision (contact lenses).

Apparatus. The recording system consisted of a desktop computer running Windows 10, a 24" computer screen, and an Oculus DK2 virtual reality headset connected to the computer via USB. We fitted the headset with a Pupil eye tracking add-on [Kassner et al. 2014] that provides state-of-the-art eye tracking capabilities. To have more flexibility in the applications used by the participants in the study, we opted for the Oculus ''Virtual Desktop'' that shows arbitrary application windows in the virtual environment. To record a user’s eye movement data, we used the capture software provided by Pupil. We recorded a separate video from each eye and each document. Participants used the mouse to start and stop the document interaction and were free to read the documents in arbitrary order. We encouraged participants to read at their usual speed and did not tell them what exactly we were measuring.

Recording Procedure. After arriving at the lab, participants were given time to familiarise themselves with the VR system. We showed each participant how to behave in the VR environment, given that most of them had never worn a VR headset before. We did not calibrate the eye tracker but only analysed users’ eye movements from the eye videos post hoc. This was to not make participants feel observed and to be able to record natural eye movement behaviour. Before starting the actual recording, we asked participants to sign a consent form. Participants then started to interact with the VR interface in which they were asked to read three documents floating in front of them. After finishing reading a document, the experimental assistant stopped and saved the recording and asked participants questions on their current level of fatigue, whether they liked and understood the document, and whether they found the document difficult using a 5-point Likert scale (1: Strongly disagree to 5: Strongly agree). Participants were further asked five questions about each document to measure their text understanding. The VR headset was kept on throughout the recording. After the recording, we asked participants to complete a questionnaire on demographics and any vision impairments. We also assessed their Big Five personality traits [John and Srivastava 1999] using established questionnaires from psychology. In this work we only use the given ground truth information of a user’s gender from all collected (private) information, the document type, and IDs we assigned to each participant, respectively.

The dataset consists of a .zip file with two folders (Eye_Tracking_Data and Eye_Movement_Features), a .csv file with the ground truth annotation (Ground_Truth.csv) and a Readme.txt file. In each folder there are two files for participant (P) for each recording (R = document class). These two files contain the recorded eye tracking data and the corresponding eye movement features. The data is saved as a .npy and .csv file. The data scheme of the eye tracking data and eye movement features is given in this Readme.txt file .