3DGazeSim: 3D Gaze Estimation from 2D Pupil Positions on Monocular Head-Mounted Eye Trackers

Abstract

3D gaze information is important for scene-centric attention analysis, but accurate estimation and analysis of 3D gaze in real-world environments remains challenging. We present a novel 3D gaze estimation method for monocular head-mounted eye trackers. In contrast to previous work, our method does not aim to infer 3D eyeball poses, but directly maps 2D pupil positions to 3D gaze directions in scene camera coordinate space. We first provide a detailed discussion of the 3D gaze estimation task and summarize different methods, including our own. We then evaluate the performance of different 3D gaze estimation approaches using both simulated and real data. Through experimental validation, we demonstrate the effectiveness of our method in reducing parallax error, and we identify research challenges for the design of 3D calibration procedures.

Download (82 Mb)

The data is only to be used for non-commercial scientific purposes. If you use this dataset in a scientific publication, please cite the following paper:

-

3D Gaze Estimation from 2D Pupil Positions on Monocular Head-Mounted Eye Trackers

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 197-200, 2016.

Simulation Environment

You can access the simulation environment via git:

git clone https://git.hcics.simtech.uni-stuttgart.de/public-projects/gazesim

Our simulation environment enables user to calibrate and test mapping approaches discussed in the paper on different formations of points. Parameters used in the simulation environment such as distance between points, camera intrinsics, calibration and test depths are selected in a such way that they match our measurements in the real-world study. While it is possible to visualize the objects in the environment, you are also able to simply run the tests with no visualization. Framework is written in Python and it is using VPython for visualization. More information about this environment and how to use it can be found in the project gitlab page.

Data Collection

We evaluated our approach both on real-world and simulation data. Using the simulation framework we performed the same experiments we made to investigate limitations of each approach and to see how expected the results really were. Calibration and test data was collected from 14 participants at 5 different depths (1m, 1.25m, 1.5m, 1.75m and 2m) from a 121.5cm × 68.7cm public display. We use a 5x5 grid pattern to display 25 calibration points and an inner 4x4 grid for displaying 16 test points. This is done by moving a target point on these grid positions and capturing eye/scene images from eye/scene camera at 30 Hz. We further perform marker detection using ArUco library [1] on target points to compute their 3D coordinates w.r.t. scene camera. In addition, we are given the 2D position of pupil center in each frame of the eye-camera from a (PUPIL) head-mounted eye tracker [2]. For more information and details please refer to section 3 in the paper.

Data is collected in an indoor setting and adds up to over 7 hours of eye tracking. Current dataset includes marker tracking results using ArUco per frame for every recording along with pupil tracking results from PUPIL eye tracker also for every frame of the eye video. We have also included camera intrinsic parameters for both eye camera and scene camera along with some post processed results such as frames corresponding to gaze intervals for every grid point. For more information on data format and how to use it please refer to the README file inside the dataset. In case you want to access the raw videos from both scene and eye camera please contact the authors.

Scene Camera Images: Images have a resolution of 1280x720 pixels. The marker pattern contains a red cross in the middle to grab user attention. Location of the center of this cross is used as ground truth target positions.

Eye Camera Images: Images have a resolution of 640x360 pixels.

For every user, we record data at each depth once for calibration and once for test points i.e. in total there are 10 recordings per user. Due to high resolution of the recordings, our dataset including the raw images adds up to nearly 30 GBs. However, the data after performing marker tracking and pupil detection is available from the "Download" section. More information about the format of the dataset can be found in a README file inside the dataset zip file.

Method

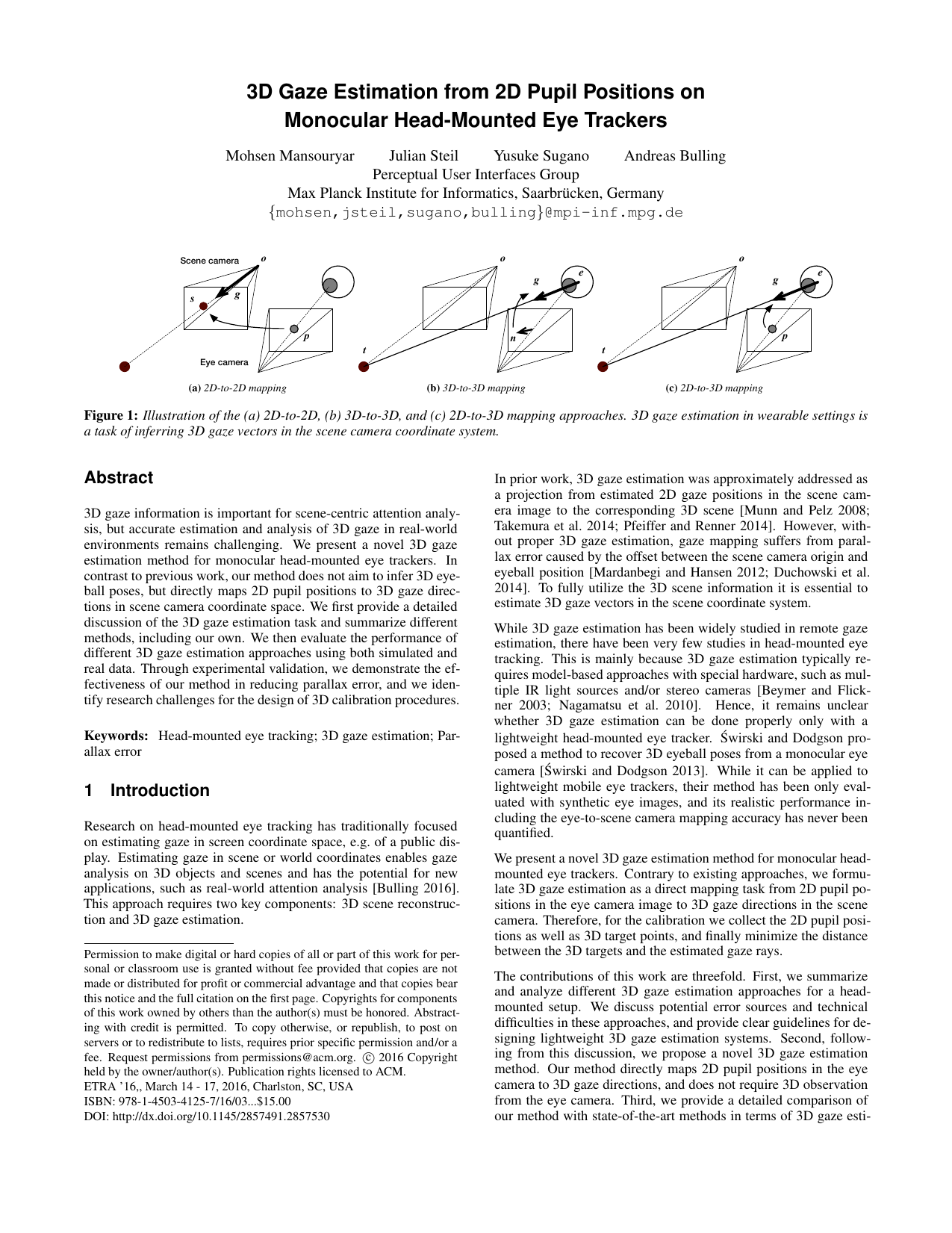

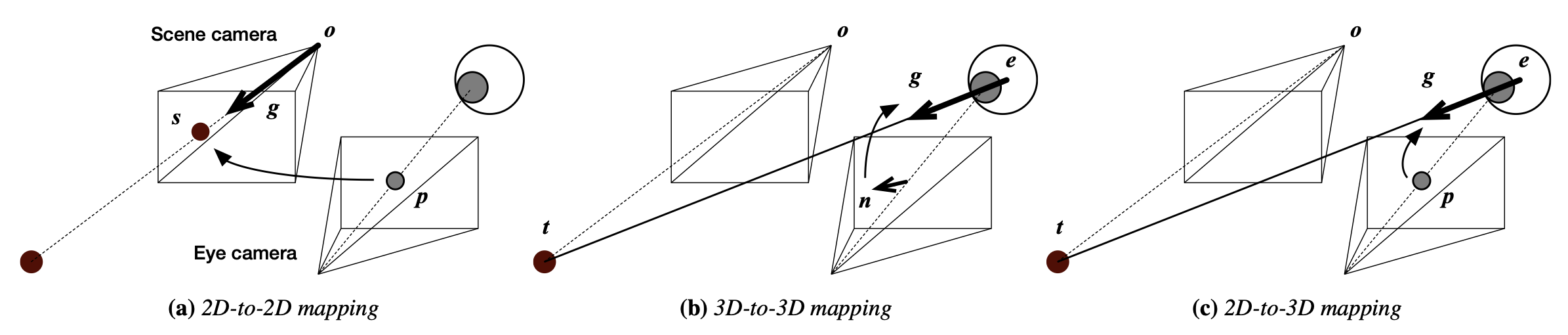

Given center of pupil in eye images and target points in scene camera images, we can basically map the two using a polynomial regression. this 2D-to-2D approach however suffers from parallax error [3]. In this work we are aiming to investigate two alternative mappings which can potentially give better gaze estimations and to see how they can tackle parallax error. 2D-to-3D mapping is when target points in the scene also contain the depth information. It can be thought of back projecting 2D target points into the scene. In addition, 3D-to-3D mapping is when we have 3D pose of the eyeball in eye camera coordinates. This can also be thought of keeping 3D information of the pupil which is lost during perspective projection. For more information on these mapping approaches please refer to section 2 in the paper. Interested reader is advised to refer to the Appendix section of our arXiv paper for a deeper understanding of the details.

Evaluation and Results

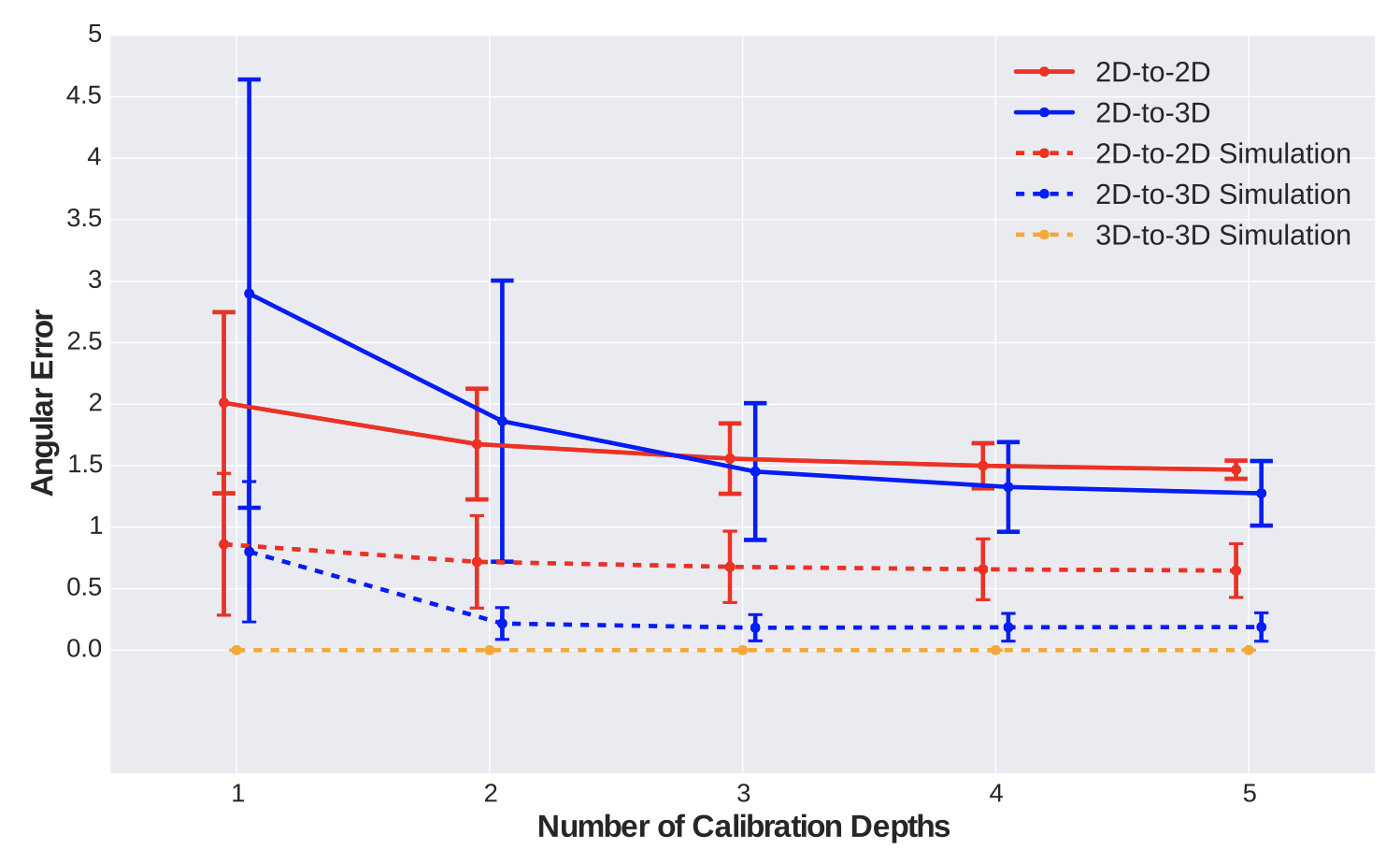

Our results show that 2D-to-3D mapping comparing to the baseline 2D-to-2D approach can give a better gaze estimation accuracy when calibration data from multiple depths are used. This observation is supported from both simulation and real-world data. Also our simulation results suggest that given the accurate pose of the eyeball in eye coordinate system, 3D-to-3D mapping does not suffer from parallax error. However, we find it still challenging to get the same results in real-world. For more information on how we evaluate different mapping approaches please refer to "Error Measurement" in section 3 of the paper.

References

[1] Garrido-Jurado, S., et al. "Automatic generation and detection of highly reliable fiducial markers under occlusion." Pattern Recognition 47.6 (2014): 2280-2292.

[2] Kassner, Moritz, William Patera, and Andreas Bulling. "Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction." Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication. ACM, 2014.

[3] Mardanbegi, Diako, and Dan Witzner Hansen. "Parallax error in the monocular head-mounted eye trackers." Proceedings of the 2012 acm conference on ubiquitous computing. ACM, 2012.