Eye tracking for public displays in the wild

Yanxia Zhang, Ming Ki Chong, Jörg Müller, Andreas Bulling, Hans Gellersen

Springer Personal and Ubiquitous Computing, 19(5), pp. 967-981, 2015.

Abstract

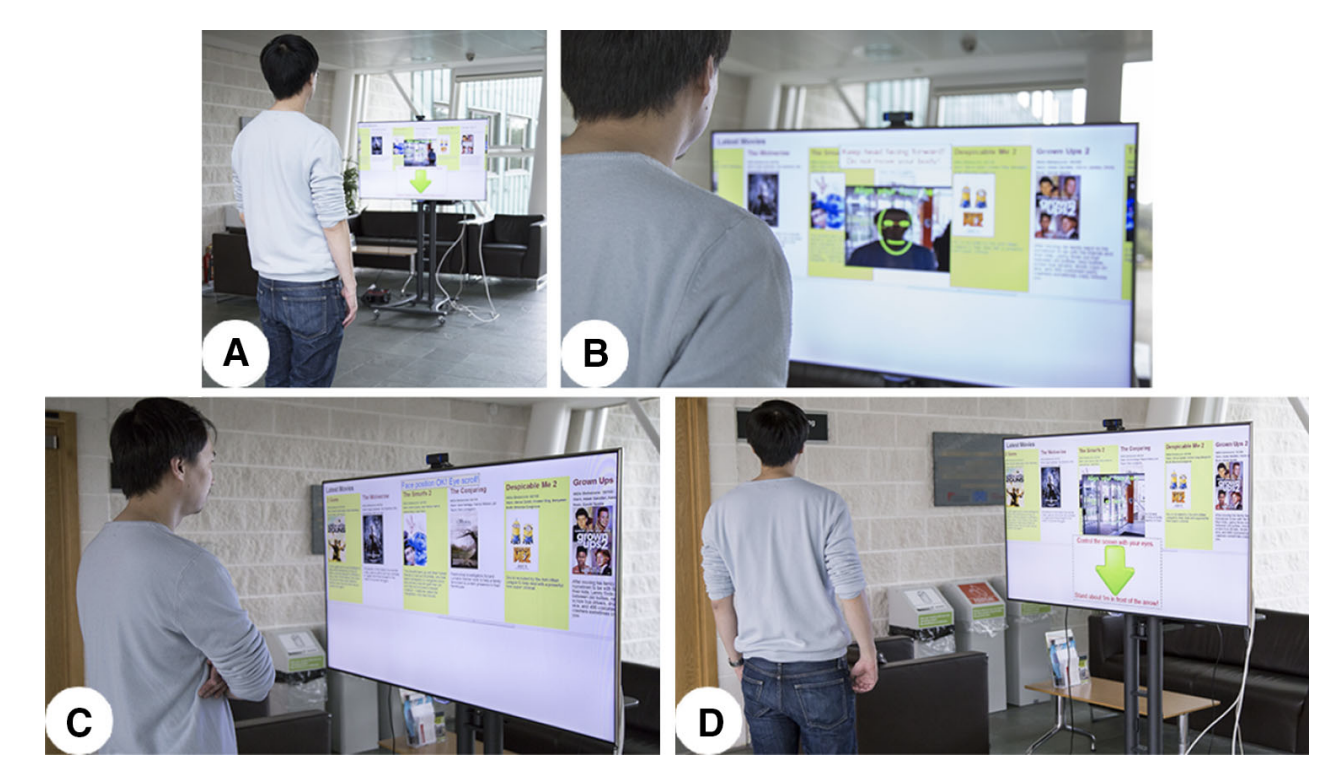

In public display contexts, interactions are spontaneous and have to work without preparation. We propose gaze as a modality for such con- texts, as gaze is always at the ready, and a natural indicator of the user’s interest. We present GazeHorizon, a system that demonstrates sponta- neous gaze interaction, enabling users to walk up to a display and navi- gate content using their eyes only. GazeHorizon is extemporaneous and optimised for instantaneous usability by any user without prior configura- tion, calibration or training. The system provides interactive assistance to bootstrap gaze interaction with unaware users, employs a single off-the- shelf web camera and computer vision for person-independent tracking of the horizontal gaze direction, and maps this input to rate-controlled nav- igation of horizontally arranged content. We have evaluated GazeHorizon through a series of field studies, culminating in a four-day deployment in a public environment during which over a hundred passers-by interacted with it, unprompted and unassisted. We realised that since eye move- ments are subtle, users cannot learn gaze interaction from only observing others, and as a results guidance is required.Links

doi: 10.1007/s00779-015-0866-8

Paper: zhang15_puc.pdf

BibTeX

@article{zhang15_puc,

title = {Eye tracking for public displays in the wild},

author = {Zhang, Yanxia and Chong, Ming Ki and M\"uller, J\"org and Bulling, Andreas and Gellersen, Hans},

year = {2015},

doi = {10.1007/s00779-015-0866-8},

pages = {967-981},

volume = {19},

number = {5},

journal = {Springer Personal and Ubiquitous Computing},

keywords = {Eye tracking; Gaze interaction; Public displays; Scrolling; Calibration-free; In-the-wild study; Deployment}

}