Eye Drop: An Interaction Concept for Gaze-Supported Point-to-Point Content Transfer

Jayson Turner, Andreas Bulling, Jason Alexander, Hans Gellersen

Proc. International Conference on Mobile and Ubiquitous Multimedia (MUM), pp. 1–4, 2013.

Abstract

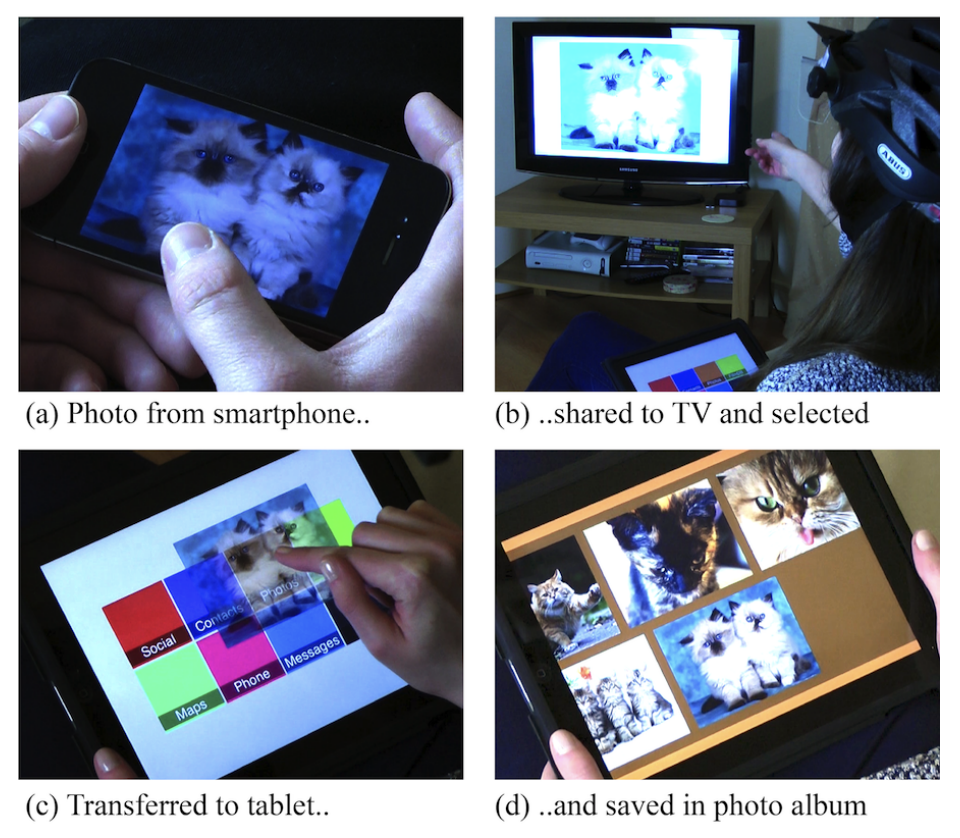

The shared displays in our environment contain content that we desire. Furthermore, we often acquire content for a specific purpose, i.e., the acquisition of a phone number to place a call. We have developed a content transfer concept, Eye Drop. Eye Drop provides techniques that allow fluid content acquisition, transfer from shared displays, and local positioning on personal devices using gaze combined with manual input. The eyes naturally focus on content we desire. Our techniques use gaze to point remotely, removing the need for explicit pointing on the user’s part. A manual trigger from a personal device confirms selection. Transfer is performed using gaze or manual input to smoothly transition content to a specific location on a personal device. This work demonstrates how techniques can be applied to acquire and apply actions to content through a natural sequence of interaction. We demonstrate a proof of concept prototype through five implemented application scenarios.Links

Paper: turner13_mum.pdf

BibTeX

@inproceedings{turner13_mum,

author = {Turner, Jayson and Bulling, Andreas and Alexander, Jason and Gellersen, Hans},

title = {Eye Drop: An Interaction Concept for Gaze-Supported Point-to-Point Content Transfer},

booktitle = {Proc. International Conference on Mobile and Ubiquitous Multimedia (MUM)},

year = {2013},

pages = {1--4},

doi = {10.1145/2541831.2541868}

}