Recognition of Hearing Needs From Body and Eye Movements to Improve Hearing Instruments

Bernd Tessendorf, Andreas Bulling, Daniel Roggen, Thomas Stiefmeier, Manuela Feilner, Peter Derleth, Gerhard Tröster

Proc. International Conference on Pervasive Computing (Pervasive), pp. 314-331, 2011.

Abstract

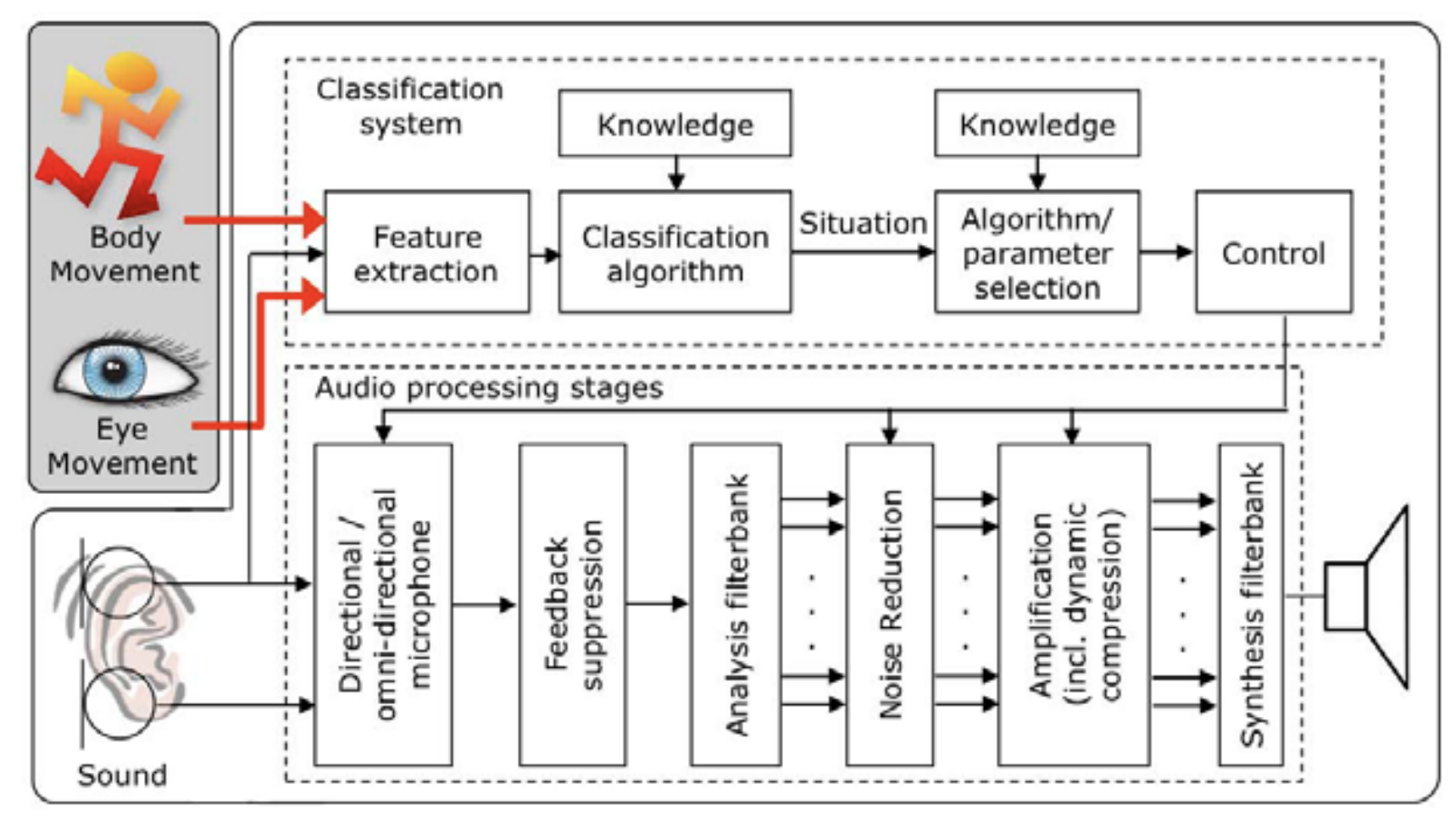

Hearing instruments (HIs) have emerged as true pervasive computers as they continuously adapt the hearing program to the user’s context. However, current HIs are not able to distinguish different hearing needs in the same acoustic environment. In this work, we explore how information derived from body and eye movements can be used to improve the recognition of such hearing needs. We conduct an experiment to provoke an acoustic environment in which different hearing needs arise: active conversation and working while colleagues are having a conversation in a noisy office environment. We record body movements on nine body locations, eye movements using electrooculography (EOG), and sound using commercial HIs for eleven participants. Using a support vector machine (SVM) classifier and person-independent training we improve the accuracy of 77% based on sound to an accuracy of 92% using body movements. With a view to a future implementation into a HI we then perform a detailed analysis of the sensors attached to the head. We achieve the best accuracy of 86% using eye movements compared to 84% for head movements. Our work demonstrates the potential of additional sensor modalities for future HIs and motivates to investigate the wider applicability of this approach on further hearing situations and needs.Links

BibTeX

@inproceedings{tessendorf11_pervasive,

author = {Tessendorf, Bernd and Bulling, Andreas and Roggen, Daniel and Stiefmeier, Thomas and Feilner, Manuela and Derleth, Peter and Tr{\"{o}}ster, Gerhard},

title = {Recognition of Hearing Needs From Body and Eye Movements to Improve Hearing Instruments},

booktitle = {Proc. International Conference on Pervasive Computing (Pervasive)},

year = {2011},

pages = {314-331},

doi = {10.1007/978-3-642-21726-5_20}

}