Predicting the Category and Attributes of Mental Pictures Using Deep Gaze Pooling

Hosnieh Sattar, Andreas Bulling, Mario Fritz

arXiv:1611.10162, pp. 1–14, 2016.

Abstract

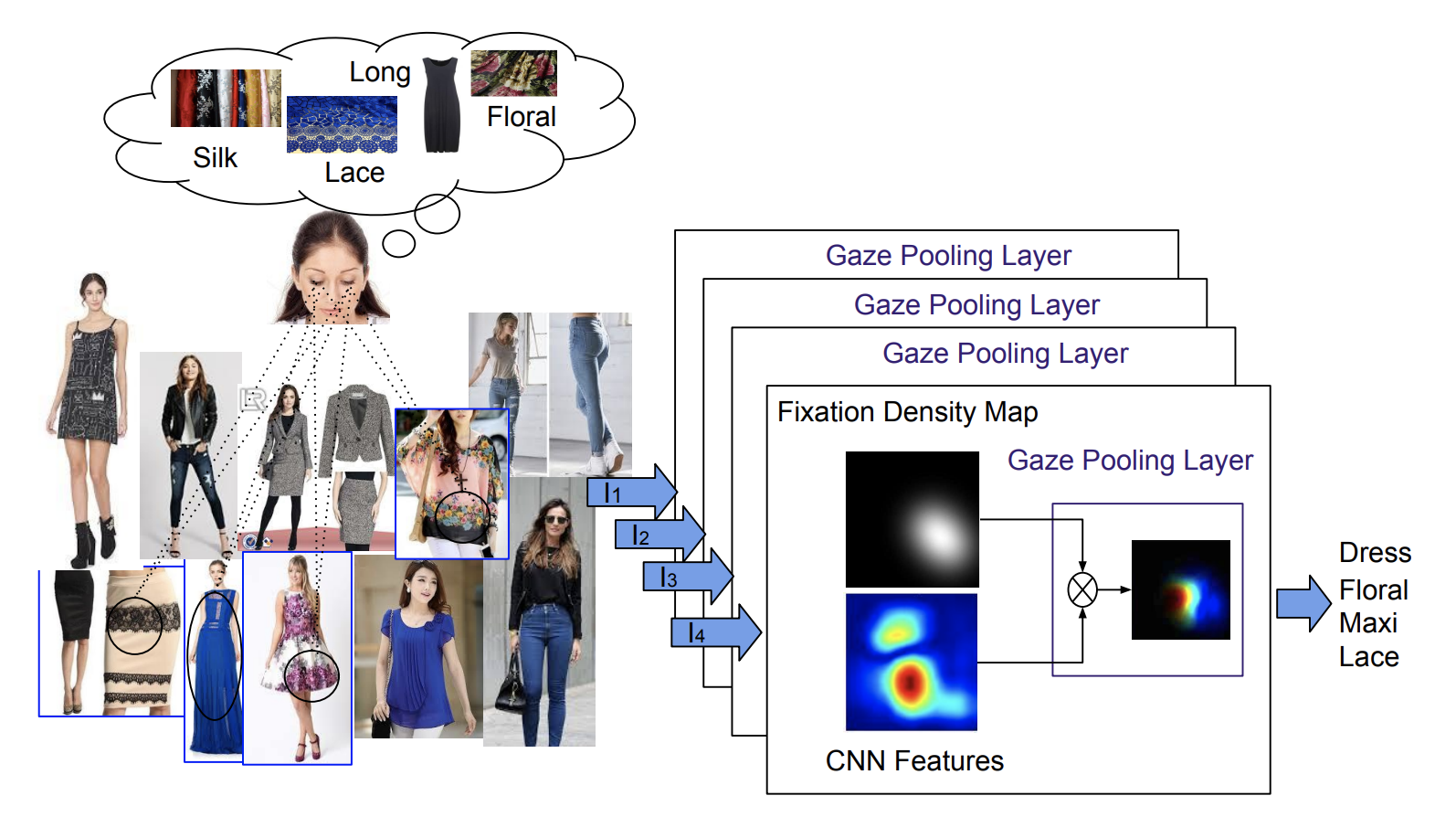

Predicting the target of visual search from eye fixation (gaze) data is a challenging problem with many applications in human-computer interaction. In contrast to previous work that has focused on individual instances as a search target, we propose the first approach to predict categories and attributes of search targets based on gaze data. However, state of the art models for categorical recognition, in general, require large amounts of training data, which is prohibitive for gaze data. To address this challenge, we propose a novel Gaze Pooling Layer that integrates gaze information into CNN-based architectures as an attention mechanism - incorporating both spatial and temporal aspects of human gaze behavior. We show that our approach is effective even when the gaze pooling layer is added to an already trained CNN, thus eliminating the need for expensive joint data collection of visual and gaze data. We propose an experimental setup and data set and demonstrate the effectiveness of our method for search target prediction based on gaze behavior. We further study how to integrate temporal and spatial gaze information most effectively, and indicate directions for future research in the gaze-based prediction of mental states.Links

Paper: sattar16_arxiv.pdf

Paper Access: https://arxiv.org/abs/1611.10162

BibTeX

@techreport{sattar16_arxiv,

title = {Predicting the Category and Attributes of Mental Pictures Using Deep Gaze Pooling},

author = {Sattar, Hosnieh and Bulling, Andreas and Fritz, Mario},

year = {2016},

pages = {1--14},

url = {https://arxiv.org/abs/1611.10162}

}