Concept for Using Eye Tracking in a Head-mounted Display to Adapt Rendering to the User’s Current Visual Field

Daniel Pohl, Xucong Zhang, Andreas Bulling, Oliver Grau

Proc. of the 22nd ACM Conference on Virtual Reality Software and Technology (VRST), pp. 323-324, 2016.

Abstract

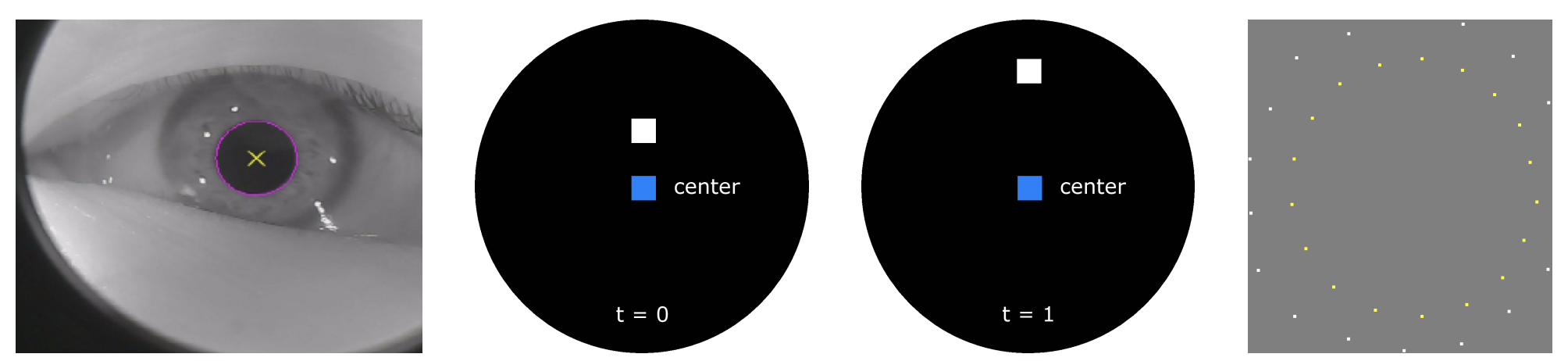

With increasing spatial and temporal resolution in head-mounted displays (HMDs), using eye trackers to adapt rendering to the user is getting important to handle the rendering workload. Besides using methods like foveated rendering, we propose to use the current visual field for rendering, depending on the eye gaze. We use two effects for performance optimizations. First, we noticed a lens defect in HMDs, where depending on the distance of the eye gaze to the center, certain parts of the screen towards the edges are not visible anymore. Second, if the user looks up, he cannot see the lower parts of the screen anymore. For the invisible areas, we propose to skip rendering and to reuse the pixels colors from the previous frame. We provide a calibration routine to measure these two effects. We apply the current visual field to a renderer and get up to 2x speed-ups.Links

Paper: pohl16_vrst.pdf

BibTeX

@inproceedings{pohl16_vrst,

title = {Concept for Using Eye Tracking in a Head-mounted Display to Adapt Rendering to the User's Current Visual Field},

author = {Pohl, Daniel and Zhang, Xucong and Bulling, Andreas and Grau, Oliver},

doi = {10.1145/2993369.2996300},

year = {2016},

booktitle = {Proc. of the 22nd ACM Conference on Virtual Reality Software and Technology (VRST)},

pages = {323-324}

}