Reducing Calibration Drift in Mobile Eye Trackers by Exploiting Mobile Phone Usage

Philipp Müller, Daniel Buschek, Michael Xuelin Huang, Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2019.

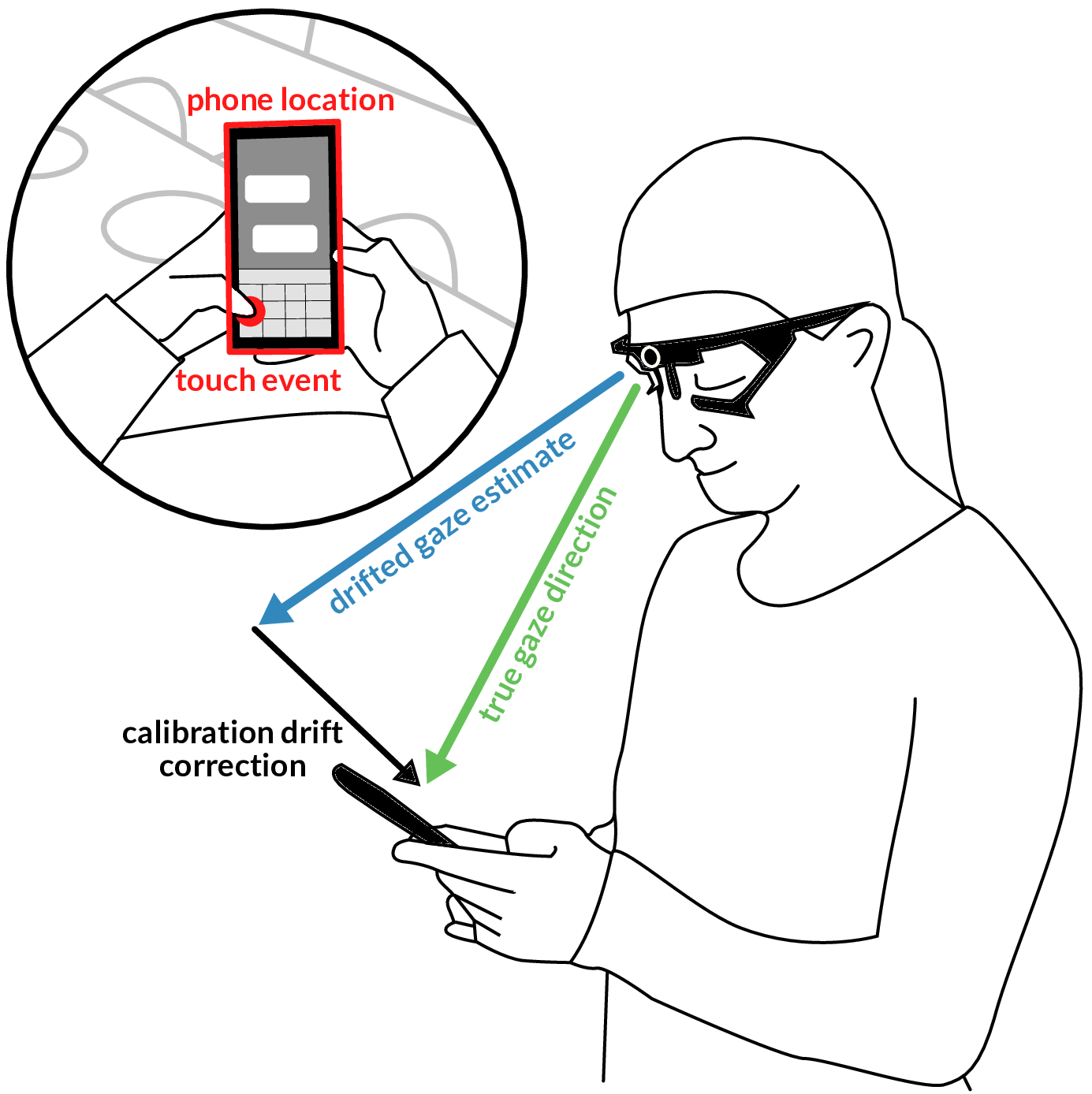

Mobile eye trackers suffer from calibration drift and inaccurate gaze estimates (blue arrow), for example caused by headset slippage. Our two novel automatic recalibration methods correct for calibration drift (black arrow) by either using the phone’s location or users’ touch events (red) to infer their true gaze direction (green arrow).

Abstract

Automatic saliency-based recalibration is promising for addressing calibration drift in mobile eye trackers but existing bottom-up saliency methods neglect user’s goal-directed visual attention in natural behaviour. By inspecting real-life recordings of egocentric eye tracker cameras, we reveal that users are likely to look at their phones once these appear in view. We propose two novel automatic recalibration methods that exploit mobile phone usage: The first builds saliency maps using the phone location in the egocentric view to identify likely gaze locations. The second uses the occurrence of touch events to recalibrate the eye tracker, thereby enabling privacy-preserving recalibration. Through in-depth evaluations on a recent mobile eye tracking dataset (N=17, 65 hours) we show that our approaches outperform a state-of-the-art saliency approach for the automatic recalibration task. As such, our approach improves mobile eye tracking and gaze-based interaction, particularly for long-term use.Links

Paper: mueller19_etra.pdf

BibTeX

@inproceedings{mueller19_etra,

title = {Reducing Calibration Drift in Mobile Eye Trackers by Exploiting Mobile Phone Usage},

author = {M{\"{u}}ller, Philipp and Buschek, Daniel and Huang, Michael Xuelin and Bulling, Andreas},

year = {2019},

booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)},

doi = {10.1145/3314111.3319918},

pages = {1--9}

}