Emotion recognition from embedded bodily expressions and speech during dyadic interactions

Philipp Müller, Sikandar Amin, Prateek Verma, Mykhaylo Andriluka, Andreas Bulling

Proc. International Conference on Affective Computing and Intelligent Interaction (ACII), pp. 663-669, 2015.

Abstract

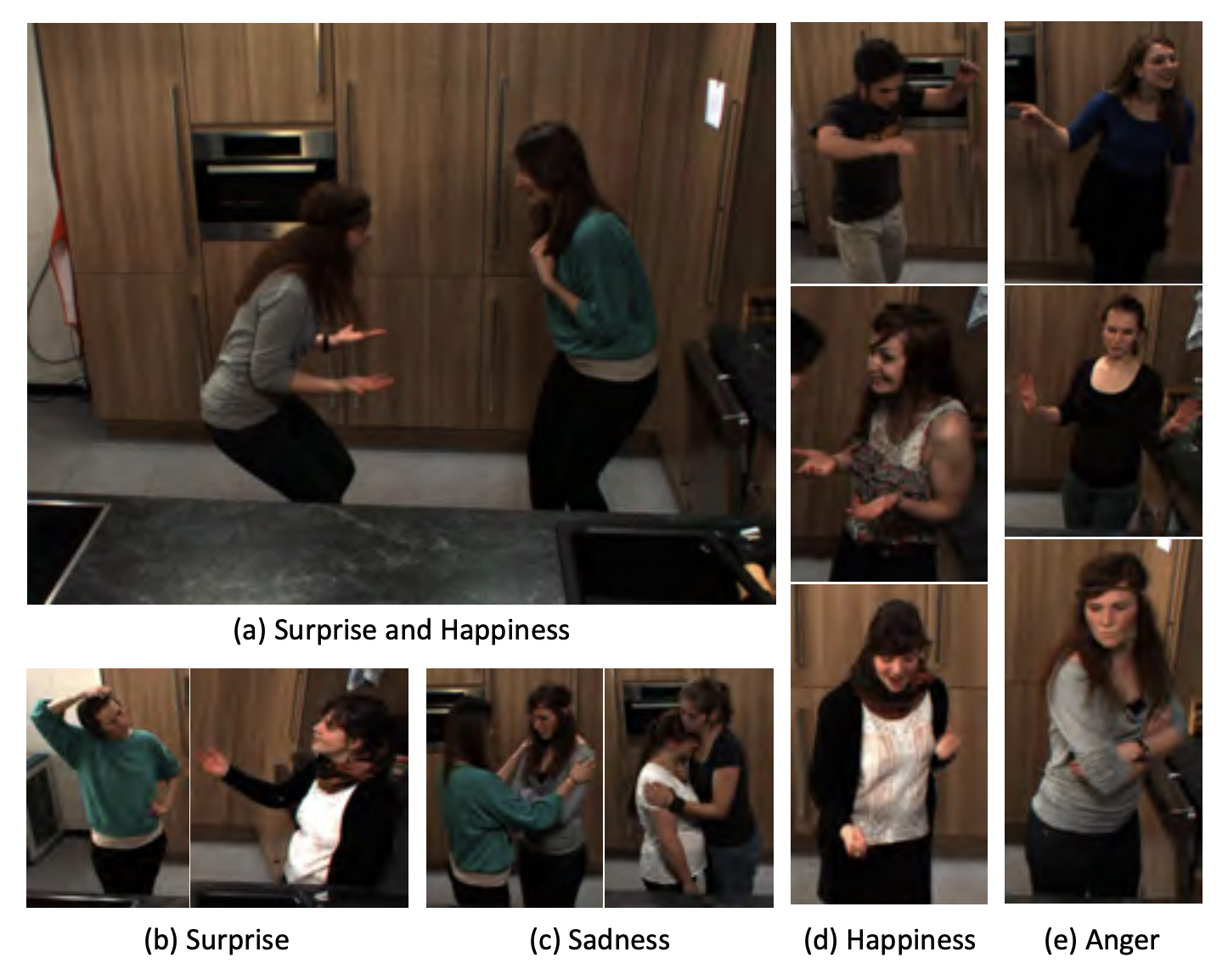

Previous work on emotion recognition from bodily expressions focused on analysing such expressions in isolation, of individuals or in controlled settings, from a single camera view, or required intrusive motion tracking equipment. We study the problem of emotion recognition from bodily expressions and speech during dyadic (person-person) interactions in a real kitchen instrumented with ambient cameras and microphones. We specifically focus on bodily expressions that are embedded in regular interactions and background activities and recorded without human augmentation to increase naturalness of the expressions. We present a human-validated dataset that contains 224 high-resolution, multi-view video clips and audio recordings of emotionally charged interactions between eight couples of actors. The dataset is fully annotated with categorical labels for four basic emotions (anger, happiness, sadness, and surprise) and continuous labels for valence, activation, power, and anticipation provided by five annotators for each actor. We evaluate vision and audio-based emotion recognition using dense trajectories and a standard audio pipeline and provide insights into the importance of different body parts and audio features for emotion recognition.Links

doi: 10.1109/ACII.2015.7344640

Paper: mueller15_acii.pdf

BibTeX

@inproceedings{mueller15_acii,

title = {Emotion recognition from embedded bodily expressions and speech during dyadic interactions},

author = {M{\"{u}}ller, Philipp and Amin, Sikandar and Verma, Prateek and Andriluka, Mykhaylo and Bulling, Andreas},

year = {2015},

pages = {663-669},

doi = {10.1109/ACII.2015.7344640},

booktitle = {Proc. International Conference on Affective Computing and Intelligent Interaction (ACII)}

}