GazeDrone: Mobile Eye-Based Interaction in Public Space Without Augmenting the User

Mohamed Khamis, Anna Kienle, Florian Alt, Andreas Bulling

Proc. ACM Workshop on Micro Aerial Vehicle Networks, Systems, and Applications (DroNet), pp. 66-71, 2018.

Abstract

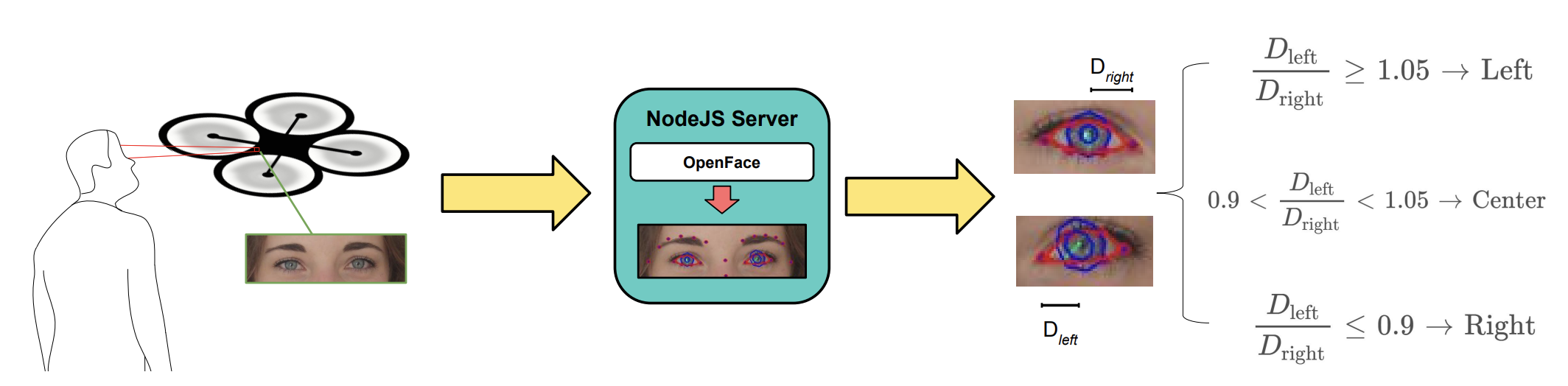

Gaze interaction holds a lot of promise for seamless human-computer interaction. At the same time, current wearable mobile eye trackers require user augmentation that negatively impacts natural user behavior while remote trackers require users to position themselves within a confined tracking range. We present GazeDrone, the first system that combines a camera-equipped aerial drone with a computational method to detect sidelong glances for spontaneous (calibration-free) gaze-based interaction with surrounding pervasive systems (e.g., public displays). GazeDrone does not require augmenting each user with on-body sensors and allows interaction from arbitrary positions, even while moving. We demonstrate that drone-supported gaze interaction is feasible and accurate for certain movement types. It is well-perceived by users, in particular while interacting from a fixed position as well as while moving orthogonally or diagonally to a display. We present design implications and discuss opportunities and challenges for drone-supported gaze interaction in public.Links

Paper: khamis18_dronet.pdf

BibTeX

@inproceedings{khamis18_dronet,

title = {GazeDrone: Mobile Eye-Based Interaction in Public Space Without Augmenting the User},

author = {Khamis, Mohamed and Kienle, Anna and Alt, Florian and Bulling, Andreas},

doi = {10.1145/3213526.3213539},

year = {2018},

booktitle = {Proc. ACM Workshop on Micro Aerial Vehicle Networks, Systems, and Applications (DroNet)},

pages = {66-71}

}