Adversarial Attacks on Classifiers for Eye-based User Modelling

Inken Hagestedt, Michael Backes, Andreas Bulling

arXiv:2006.00860, pp. 1–9, 2020.

Abstract

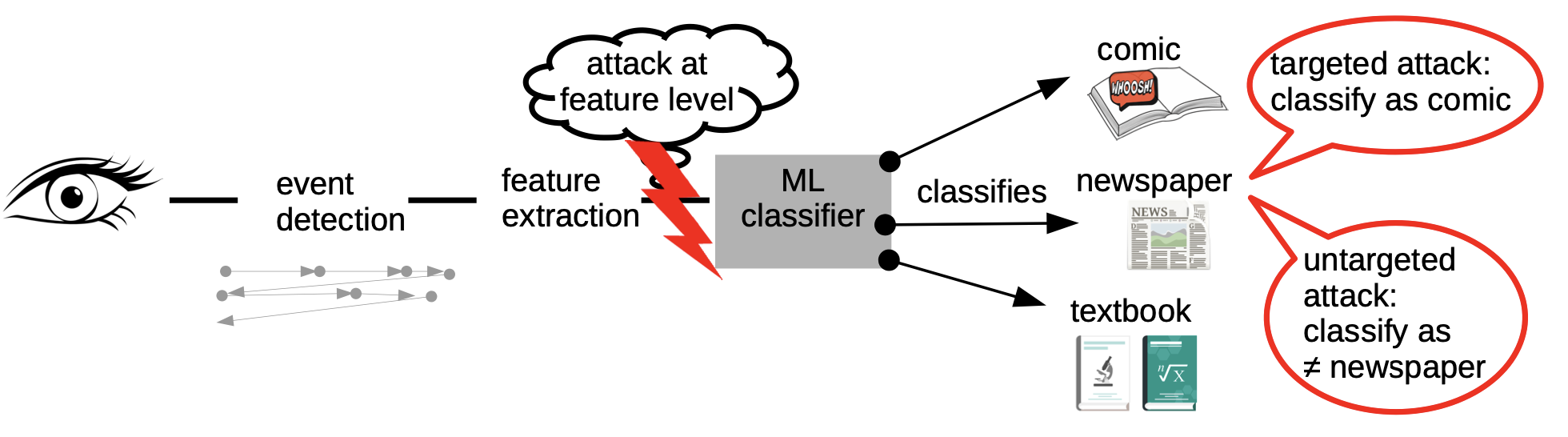

An ever-growing body of work has demonstrated the rich information content available in eye movements for user modelling, e.g. for predicting users’ activities, cognitive processes, or even personality traits. We show that state-of-the-art classifiers for eye-based user modelling are highly vulnerable to adversarial examples: small artificial perturbations in gaze input that can dramatically change a classifier’s predictions. We generate these adversarial examples using the Fast Gradient Sign Method (FGSM) that linearises the gradient to find suitable perturbations. On the sample task of eye-based document type recognition we study the success of different adversarial attack scenarios: with and without knowledge about classifier gradients (white-box vs. black-box) as well as with and without targeting the attack to a specific class, In addition, we demonstrate the feasibility of defending against adversarial attacks by adding adversarial examples to a classifier’s training data.Links

Paper: hagestedt20_arxiv.pdf

Paper Access: https://arxiv.org/abs/2006.00860

BibTeX

@techreport{hagestedt20_arxiv,

title = {Adversarial Attacks on Classifiers for Eye-based User Modelling},

author = {Hagestedt, Inken and Backes, Michael and Bulling, Andreas},

year = {2020},

pages = {1--9},

url = {https://arxiv.org/abs/2006.00860}

}