Contextual Media Retrieval Using Natural Language Queries

Sreyasi Nag Chowdhury, Mateusz Malinowski, Andreas Bulling, Mario Fritz

arXiv:1602.04983, pp. 1–8, 2016.

Abstract

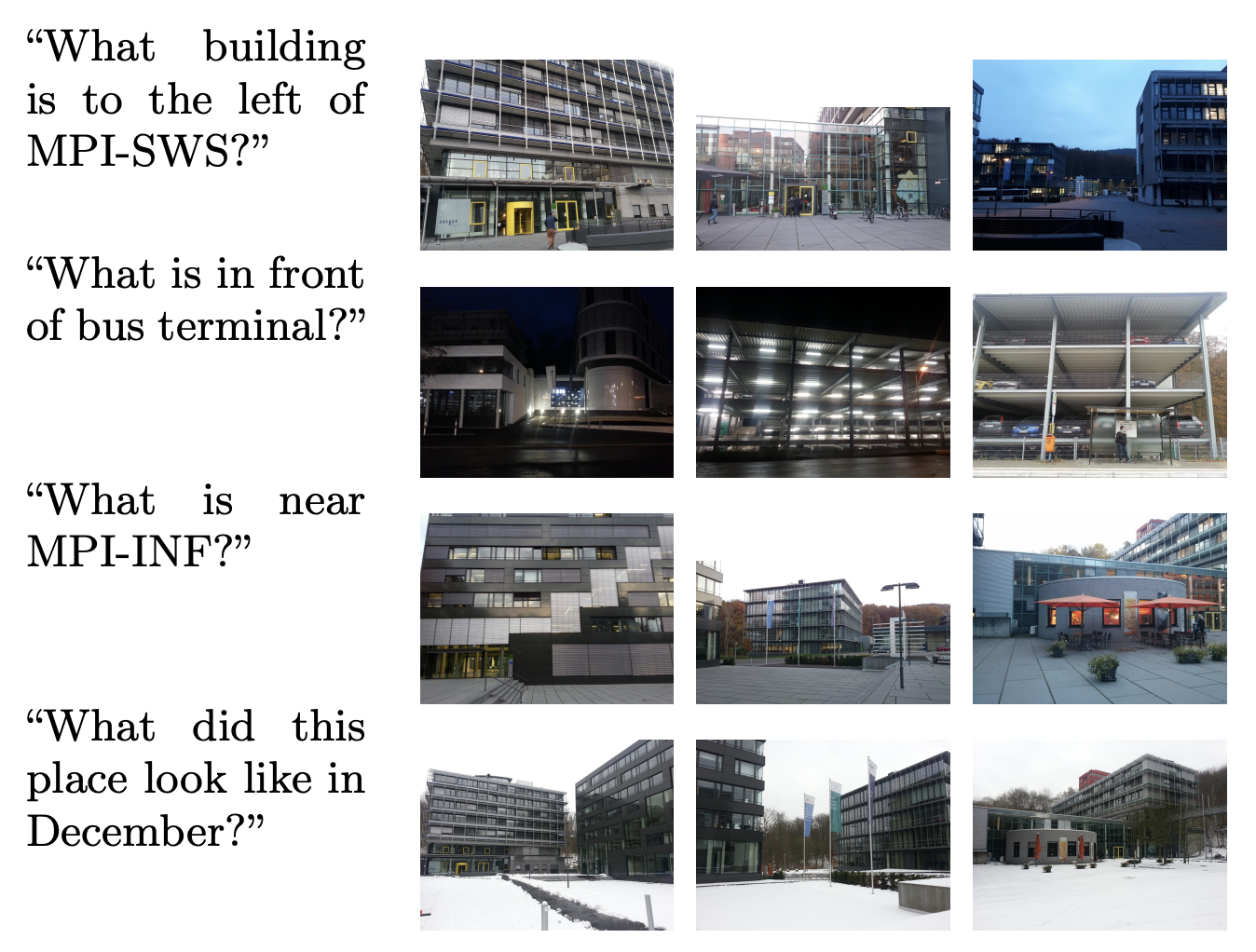

The widespread integration of cameras in hand-held and head-worn devices as well as the ability to share content online enables a large and diverse visual capture of the world that millions of users build up collectively every day. We envision these images as well as associated meta information, such as GPS coordinates and timestamps, to form a collective visual memory that can be queried while automatically taking the ever-changing context of mobile users into account. As a first step towards this vision, in this work we present Xplore-M-Ego: a novel media retrieval system that allows users to query a dynamic database of images and videos using spatio-temporal natural language queries. We evaluate our system using a new dataset of real user queries as well as through a usability study. One key finding is that there is a considerable amount of inter-user variability, for example in the resolution of spatial relations in natural language utterances. We show that our retrieval system can cope with this variability using personalisation through an online learning-based retrieval formulation.Links

Paper: chowdhury16_arxiv.pdf

Paper Access: https://arxiv.org/abs/1602.04983

BibTeX

@techreport{chowdhury16_arxiv,

title = {Contextual Media Retrieval Using Natural Language Queries},

author = {Chowdhury, Sreyasi Nag and Malinowski, Mateusz and Bulling, Andreas and Fritz, Mario},

year = {2016},

pages = {1--8},

url = {https://arxiv.org/abs/1602.04983}

}